5 Things To Not Do with vSphere

I'll admit it -- I love the flexibility that VMware vSphere virtualization provides. The fact is, you can do nearly anything on this platform. This actually can be a bad thing at times. I recently was recording a podcast with Greg Stuart, who blogs at vdestination.com and we observed this very point. We agreed that all virtualized environments are not created equal, and it is very rare if not impossible to see two environments that are the same.

This begs the question: How can virtualized environments be so different? And because of that, what are things that we may be doing right in one environment, but that's wrong in another? I've come up with a list of things you should NOT do with your vSphere environment, even if you can!

1. Don't avoid DNS.

I had a popular post last year, Have you checked out my post from last year, "10 Post-Installation Tweaks to Hyper-V"? My #1 post-installation tweak is that you should get DNS right. In terms of what NOT to do, the first no-no is using host files. Sure, it may work, but luckily vSphere 5.1 uses nslookup queries and reports the status, from a DNS server. No faking it now! Get DNS correct.

2. Don't stack networks all on top of each other.

Just because you can, doesn't mean you should. This is especially the case for network switches. Now, I'll be honest: I do stack many networks on top of each other in lab capacities, but that is for the lab. It's different for production capacities, where I've always put in as much separation as possible. This can be as simple as multiple VLANS, or as sophisticated as management traffic on a separate VLAN and interfaces just for vmkernel.

Storage interfaces can be treated the same way, different VLANs and ideally different interfaces. Fig. 1 shows how to NOT deploy it (from my lab!).

|

Figure 1. This virtual switch has management and iSCSI storage traffic (on vmkernel) and guest VM networking on the same physical interface and TCP/IP segment. (Click image to view larger version.) |

Also please don't do (again in the lab) as I have, of only having one physical adapter assigned to the virtual switch for your production workloads. That somewhat defeats the purpose of virtualization abstraction!

3. Don't avoid updating hosts. And VM hardware. And VMware Tools.

vSphere provides a great way to update all of the components of your virtualized infrastructure, via vSphere Update Manager. This component makes it very easy to upgrade these components. In the case of a vSphere host, if you need to go from vSphere 4.1 to 5.1, no problem. If you need to put in the latest hotfixes for vSphere 5.0, no problem as well; Update Manager makes host management very easy.

Same goes for virtual machines, they need attention also. Update Manager is a great way to manage upgrades in sequence for VM hardware levels. You don't have any VMware hardware version 4 VMs laying around there now, do you? Along with hardware version configuration, you can also manage the VMware Tools installation and management process. The tool is there, so use it.

4. Don't overcomplicate DRS configuration.

If you have already done this, you probably have learned to not do this again. It's like touching a hot coal in a fire; you may do it once but you quickly learn to not do it again. Unnecessary tweaking of DRS resource provisioning can cripple a VM's performance, especially if you start messing with the numbers associated with share values associated with VMs or resource pools. For the mass of VMware admins out there, simply don't do it. Even I don't do this.

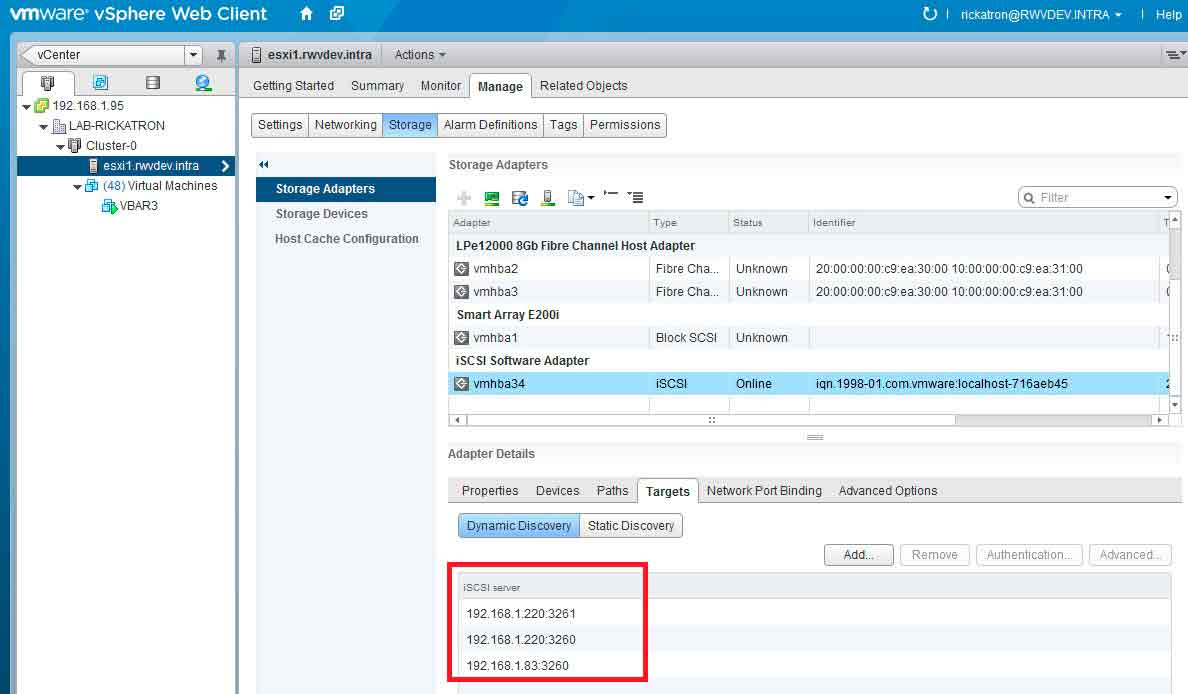

5. Don't leave old storage configurations in vmkernel.

I'll admit that I'm not a good housekeeper. In fact, the best evidence of this again is my lab environment. I'm much better behaved in a production capacity, so trust me on this! But one thing that drives me crazy are old storage configuration entries in the iSCSI target discovery section of the storage adapter configuration. Again back to my lab. Anyone see the problem here? I'm going to bet that one of those two entries for the same IP address is wrong!

|

Figure 2. This virtual switch has management and iSCSI storage traffic (on vmkernel) and guest VM networking on the same physical interface and TCP/IP segment. (Click image to view larger version.) |

Hopefully you all can give me a pass; after all, this is my lab. But the fact is, we find ourselves doing these things from time to time in a production capacity.

What configuration practices do you find yourselves constantly telling people NOT to do in their (or your own!) VMware environment? Share your comments here.

Posted by Rick Vanover on 04/10/2013 at 3:32 PM