How-To

Introduction to Docker, Part 2: Repos and Resource Consumption

After introducing the basics, Tom Fenton looks at the ephemeral nature of container instances and how to use networking and persistent storage with them.

In the first article of my Docker series, I covered the basics of Docker containers: how to install one, and how to run, start and access a Docker instance. In that article, I ran two different Linux distros on an Ubuntu host and accessed them from the command line.

In this article, I will look at the ephemeral nature of container instances and how to use networking and persistent storage with them. But first, we will look at one of the features that really made Docker take off: the public repository for Docker images called Docker Hub.

Docker Hub

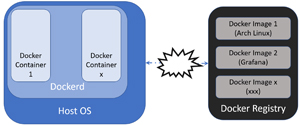

Containers allow you to run OSes and applications in isolation from one another, that is, they can run in a sandbox environment without interference from the base OS or other container instances. Dockerd is the daemon or process that runs on a host OS that manages the Docker containers, and it can be accessed via a command-line interface (CLI) called docker. I used various Docker commands in my previous article.

[Click on image for larger view.]

[Click on image for larger view.]

Docker containers and images are entities that are used to assemble an application in Docker. The container is the environment that a Docker image runs in. An image is a read-only template that is used to store and ship applications. This leads us to Docker Registry.

The real power of Dockers pertains to their ability to use a registry as a repository for images. By using a registry, you can store and share images that have been built up with other Docker environments. There are both public repositories, which anyone can pull images from, as well as private registries, which have limited access. The main public registry for Dockers is known as Docker Hub.

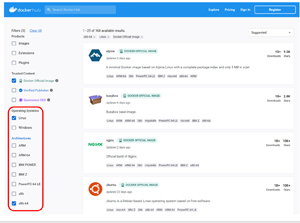

From the Docker Hub, you can search for images that have OSes and applications that you want to download and run. When searching for images, you can filter for the OS and architecture you have as well as if you want to search "official" and "verified" images.

[Click on image for larger view.]

[Click on image for larger view.]

The above screenshot shows the results of my search for Alpine Linux, a very small Linux image. The Alpine image is only 8MB and requires only a 130MB disk.

Using the Docker Hub web site is a convenient way to search for a Docker image, but you can also search for images from the command line. The basic syntax is docker search <image name>. I entered docker search SQlite to search for an SQLite image.

[Click on image for larger view.]

[Click on image for larger view.]

Both SQLite and SQLite-adjacent products, such as management tools, were displayed. Besides the image name and description, it also has columns for "STARS" (how many people liked the image), "OFFICIAL" (if the image was built from a trusted source), and "AUTOMATED" (whether the image was built automatically from a GitHub or Bitbucket repository).

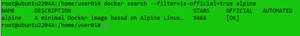

You can use filter out attributes on the images. To search for official Alpine Linux images, I entered:

docker search --filter=is-official=true alpine

[Click on image for larger view.]

[Click on image for larger view.]

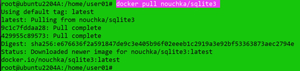

If you want to pull an image down to your local machine without running it, you can enter:

docker search pull nouchka/sqlite3

[Click on image for larger view.]

[Click on image for larger view.]

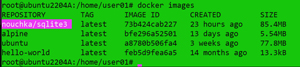

The docker images command will display your local images.

[Click on image for larger view.]

[Click on image for larger view.]

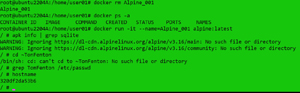

Once I found the image on Docker Hub, I verified that Docker was running, listed the images currently on my system, showed which instances were running, downloaded and deployed the latest Alpine Linux image and gave it the name Alpine_001, listed the images again, showed which instances were running, and then entered a shell on the instance all by entering:

systemctl status docker

docker image ls

docker ps -a

docker run -itd --name=Alpine_001 alpine alpine:latest

docker image ls

docker ps -a

docker attach Alpine_001

[Click on image for larger view.]

[Click on image for larger view.]

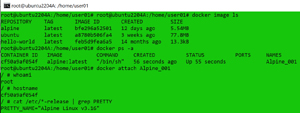

Once I was in the instance, I looked at my username, the hostname of the system, and the name of the distro, as well as listed the IP address of the system and pinged my local router (10.0.0.1), by entering:

whoami

hostname

cat /etc/*-release | grep PRETTY

ip a

ping 10.0.0.1

[Click on image for larger view.]

[Click on image for larger view.]

While the IP address of the instance is different from the that of the host system, it can still ping the local router.

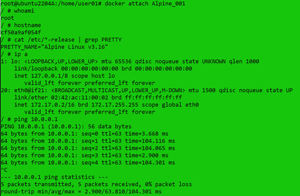

Ephemeral Nature of Containers

Containers are designed to be relatively short lived; when they are killed, any information stored on them is removed. To explore the ephemeral nature of containers, I created a new user in an Alpine instance, verified that the user had a home directory, created a file in the instance, and installed an application in it (SQLite) by entering:

adduser TomFenton

cd ~TomFenton

pwd

apk info | grep sqlite

apk info | grep sqlite

[Click on image for larger view.]

[Click on image for larger view.]

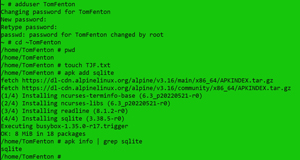

When I went into new the instance, I found that SQLite was installed, and the user was there. I then exited the instance, killed it, and recreated it by entering:

docker kill Alpine_001

docker rm Alpine_001

docker ps -a

docker run -it –name=Alpine_001 alpine

[Click on image for larger view.]

[Click on image for larger view.]

When I went into new the instance, I found that SQLite was not installed, and the user was gone.

For many use cases, having non-persistent data on a system is not an issue; however, for other applications, such as databases, persistent data storage is needed. I will show you can have persistent data storge in a later article, but first let's look at the resource (CPU and RAM) usage of a Docker instance.

Resource Tax of Containers

Although container instances are very lightweight, they do consume resources. To get a better idea of their resource usage, I stopped Docker from automatically starting, rebooted my system, started Docker, started an Alpine Linux instance, started an Ubuntu instance, started another Ubuntu instance, killed all the instances, and stopped dockerd. I waited five minutes between each step, and I measured the CPU and RAM usage on the host system between each of these activities using vmstat and the "docker stats" command to show the instance's resource usages. To do this, I created the following script:

#!/bin/bash

SleepGetStats () {

sleep 300

echo "starting next step" >> LSTATS.out

echo "starting next step" >> DSTATS.out

vmstat -t -S M >> LSTATS.out

docker stats --no-stream >> DSTATS.out

}

##

## Start of Run

rm LSTATS.out

rm DSTATS.out

SleepGetStats

##Start Dockerd

systemctl start docker

SleepGetStats

##Start Alpine Linux instance

docker run -itd –name=Alpine_001 alpine

SleepGetStats

##Start Ubuntu instance

docker run -itd –name=Ubuntu_001 ubuntu

SleepGetStats

##Started another Ubuntu instance,

docker run -itd –name=Ubuntu_002 ubuntu

SleepGetStats

##kill all the instances

docker stop $(docker ps -a -q)

SleepGetStats

##Remove all the containers

docker rm $(docker ps –filter status=exited -q)

SleepGetStats

##Stop dockerd

systemctl stop docker

SleepGetStats

##Display output files

cat LSTATS.out

cat DSTATS.out

Below is a table of the output from the host OS (vmstat):

|

State

|

CPU User

|

CPU System

|

Fee RAM MB

|

|

Base OS

|

0

|

0

|

181

|

|

Dockerd

running

|

0

|

0

|

176

|

|

Alpine Linux

|

0

|

0

|

165

|

|

Ubuntu_001

|

0

|

0

|

147

|

|

Ubuntu_002

|

0

|

0

|

161

|

|

All Instance

killed

|

0

|

0

|

151

|

|

Dockerd

stopped

|

0

|

0

|

163

|

|

Docker

containers removed

|

0

|

0

|

187

|

Stats from Docker stats:

|

State

|

CPU %

|

Fee RAM MB

|

|

Base OS

|

N/A

|

N/A

|

|

Dockerd

running

|

N/A

|

N/A

|

|

Alpine Linux

|

0

|

0

|

|

Ubuntu_001

|

0

|

0

|

|

Ubuntu_002

|

0

|

0

|

|

All Instance

killed

|

N/A

|

N/A

|

|

Dockerd

stopped

|

N/A

|

N/A

|

|

Docker

containers removed

|

N/A

|

N/A

|

The graph below shows the host usage while my testing was taking place. This confirmed that the system was idle and that the instances that I was running did not increase the CPU usage; in the worst case, there was only a 34MB drop in free memory on the system. It does need to be noted that the instances that I instantiated were idle and not running any applications.

[Click on image for larger view.]

[Click on image for larger view.]

Conclusion

Below is a summary list of Docker commands used in this article:

|

Command

|

Notes

|

|

docker run

-it ubuntu bash

|

Download the

Docker image (ubuntu) from the public repository if needed and run it in interactive

mode (-i) using a pseudo -tty

(-t)

|

|

docker

search SQlite

|

Search the

repository for an SQlite image

|

|

docker

search --filter=is-official=true alpine

|

Only search

for official alpine images

|

|

docker

search pull nouchka/sqlite3

|

Pull down an

image to the local system

|

|

docker

images

|

List the

images that are on your local system

|

|

docker

attach Alpine_001

|

Attach to the

standard out and error of the image

|

|

docker

kill Alpine_001

|

Kill a

running image

|

|

docker rm

Alpine_001

|

Remove an

image

|

|

docker

stats

|

Display

resource usage of docker instances

|

In this article, I discussed how Docker runs OSes and applications in isolation from each other under the management of dockerd, which can be accessed via a CLI called docker. Docker templates are called images and can be stored in private or public repositories called registries. The most popular public registry is Docker Hub. By default, instantiated Docker images are non-persistent and any information stored on them will be removed when the image is killed.

As demonstrated in this article, the resource usage of Docker images and instances is very light. When an instance was running in the background, I found the CPU usage to be imperceptible. In the next articles in this series, I will cover networking and persistent storage with Docker.

Update: Part 3 (networking) is now available here.