News

New Copilot+ PCs Leverage Azure LLMs and Built-In SLMs

Microsoft extended its small language model (SLM) technology along with cloud-based large language models (LLMs) to the device level with the introduction of Copilot+ PCs, which leverage powerful processors and multiple state-of-the-art AI models.

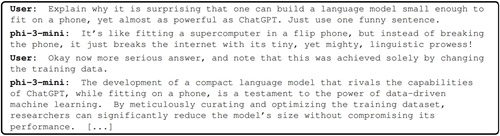

We earlier reported on the the company's move to using smaller AI models with last month's introduction of phi-3-mini, a 3.8 billion parameter model. Large models can boast hundreds of billions of parameters. An advantage of going small means phi-3-mini can be used on devices as small as phones.

[Click on image for larger view.] Phi-3-Mini Explains Itself (source: Microsoft).

[Click on image for larger view.] Phi-3-Mini Explains Itself (source: Microsoft).

Now, SLMs are being used in conjunction with Azure-hosted LLMs to provide new AI experiences on Copilot+ PCs specially designed to leverage advanced AI, just introduced today ahead of the company's Build 2024 developer conference, which kicks off tomorrow. The SLMs are apparently built-in to the devices and running locally, though that wasn't explicitly stated by the company. Dell, for example, referenced "on-device AI" in its announcement of new Copilot+ PCs.

[Click on image for larger view.] Copilot+ PCs (source: Microsoft).

[Click on image for larger view.] Copilot+ PCs (source: Microsoft).

Along with AI models, they are powered by the Snapdragon X Elite and Snapdragon X Plus processors, said to be up to 20 times more powerful and up to 100 times more efficient for running AI workloads.

"We introduced an all-new system architecture to bring the power of the CPU, GPU, and now a new high performance Neural Processing Unit (NPU) together," the company said. "Connected to and enhanced by the large language models (LLMs) running in our Azure Cloud in concert with small language models (SLMs), Copilot+ PCs can now achieve a level of performance never seen before."

A separate announcement provided details on the all-new Surface Pro and Surface Laptop, explaining features like:

- Recall: "You can find what you've seen on your PC using the clues you remember."

- Cocreator in Paint: "You can use your ink strokes and words to describe the image you want to create making it easier to create, refine and evolve your ideas in near real time."

- Live Captions: "You can turn audio that passes through your PC into a consistent English captions experience in real time on your PC."

Developers leveraging NPUs for their AI workloads also have new experiences available:

- Davinci Resolve: "Effortlessly apply visual effects to objects and people using NPU-accelerated Magic Mask in DaVinci Resolve Studio."

- Cephable: "Stay in your flow with faster, more responsive adaptive input controls, like head movement or facial expressions via the new NPU-powered camera pipeline in Cephable."

- CapCut: "Remove the background from any video clip in a snap using Auto Cutout running on the NPU in CapCut."

A natural fit for AI, the Azure cloud figured prominently in Microsoft's announcements.

"This first wave of Copilot+ PCs is just the beginning," the company said. "Over the past year, we have seen an incredible pace of innovation of AI in the cloud with Copilot allowing us to do things that we never dreamed possible. Now, we begin a new chapter with AI innovation on the device. We have completely reimagined the entirety of the PC -- from silicon to the operating system, the application layer to the cloud -- with AI at the center, marking the most significant change to the Window platform in decades."

Stay tuned for more AI news, with a healthy dose of Azure, at the Build 2024 conference, which runs through May 23.

About the Author

David Ramel is an editor and writer at Converge 360.