In-Depth

AI in 2025: Multimodal, Small and Agentic

If your organization hasn't started an AI adoption journey, it might already be falling behind. 2024 may have been a banner year for AI in the enterprise, but 2025 is promising even more improvements in capabilities, accessibility, privacy, security and more -- and they're going to affect every single part of your organization's IT infrastructure, whether you're ready for them or not.

To help organizations get ready, our AI expert Brien Posey weighed in with his views on the top AI trends going into 2025, seeing the transformative technology getting a huge boost from multimodal capabilities along with smaller more targeted large language models (LLMs) and autonomous agents able to do things on their own, even taking over computer operations.

Posey, a 22-time Microsoft MVP and well-known industry writer and speaker, presented his views last week in an online, half-day tech summit presented by Virtualization & Cloud Review titled, "2025 AI Trend Watch Summit: Top Innovations To Watch, Adopt (and Avoid)," now available for replay.

"AI has seen tremendous gains over the last few years, but it's also seen some really spectacular failures", said Posey in the opening session of the three-hour event sponsored by Mimecast, Cohesity and Okta.

"AI has seen tremendous gains over the last few years, but it's also seen some really spectacular failures."

"AI has seen tremendous gains over the last few years, but it's also seen some really spectacular failures."

Brien Posey, 22-time Microsoft MVP and freelance technology author

So he was on hand to talk about some of those gains, some of the huge innovation coming, and also those epic fails in a presentation titled "The State of AI for Business Today: Top Trends, Notable Developments and the Biggest Flops."

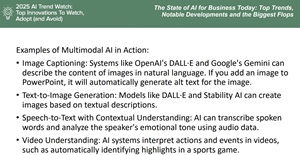

Multimodal AI

The No. 1 game-changer evolving now is multimodal AI, where advanced systems work with various forms of input including text, images, audio and video in order to work with and generate responsive content that is more contextually rich and accurate, adding yet another human-like capability.

[Click on image for larger view.] Multimodal Examples (source: Brien Posey).

[Click on image for larger view.] Multimodal Examples (source: Brien Posey).

"I consider this to be the biggest trend to watch going forward," Posey said. "I think this one is going to be super important."

The importance comes in a PC-based AI system that can accept text, video, images, typed information, sensor data, and pretty much anything else that you can think of, and bring all of that together to form a sort of cohesive understanding of the topic at hand.

"Now, multimodal input has existed for quite a while, but the thing about past systems is that they've essentially been completely disparate systems that have been bolted together," he explained. "So you might have a text processor and an image processor, and they just happen to be jammed together in one product. That isn't really what we're talking about here. What we're talking about is combining input from different types of sources and allowing the system to gain a richer understanding of the world around it and of the situation at hand by looking at all of those different sources as one. So multimodal AI is going to be extremely powerful, and I think it's really going to be the basis of what we see AI achieving over the next decade. I honestly believe that."

He went on to discuss multimodal AI in the context of Google's Gemini AI system and others and how it can be used in industries like retail, especially with virtual assistants.

Posey further reiterated the importance of multimodal AI in response to an audience question about what he saw as the most important AI technological advancement coming in the next 10 years. "I think it's absolutely got to be multimodal input, being able to cohesively ingest input from multiple sources and come make sense out of all of that, that's going to be huge."

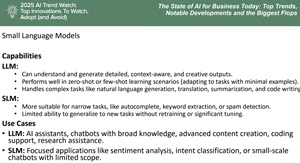

Going Small

Smaller LLMs, as opposed to larger, jack-of-all-trades systems trained on huge volumes of data, was Posey's second AI trend coming up in 2025. "Small language models, on the other hand, are a lot more suitable for really specific tasks," he explained.

[Click on image for larger view.] Small LLMs (source: Brien Posey).

[Click on image for larger view.] Small LLMs (source: Brien Posey).

"So for example, if you've ever used word and it suggests the next few words that it thinks you're going to type, that's likely done with a small language model. Also, if you're sending someone a text message on your phone, and it provides suggestions down at the bottom of the screen of words that you can tap so that you don't have to type those words -- that also tends to be done using a small language model. Small language models are also good for keyword extraction and for spam detection. So, now, small language models have their strengths, but they also have their weaknesses. They have a very limited abilities to generalize new tasks. Typically, if you try to get a small language model to do something new, you're going to have to either do a lot of retraining, or you're going to have to significantly rebuild the application."

As far as use cases they are well-suited for, he mentioned things like sentiment analysis, intent classification and small-scale chatbots with limited scopes.

"So for example, if I've got a retail site and customers are leaving reviews. I don't have to read through every single review. I can use a small language model to gain customer sentiment from the reviews that have been left. So why use a small language model? Why not just use a large language model for everything? Because clearly, a large language model can handle most tasks that you throw at it.

"Well, not every task is going to require a large language model, and one of the benefits to using a small language model is that they can be trained a whole lot more quickly and easily and on much smaller data sets than a large language model, and the training process tends to be a lot faster. Also, small language models have a relatively modest hardware requirement, so some of the small language models out there can even run on edge devices, such as a PC and you may not even necessarily need internet connectivity. I've even seen small language models that can run on a Raspberry Pi device.

"Also small language models tend to have lower latency than large language models and faster response times, because if you think about it, by definition, a small language model is dealing with a much smaller data set, so it only stands for reason that it's going to be able to produce outputs a lot more quickly than a large language model. So as a best practice, if a task can be handled by a small language model, then it's probably going to be better in most cases, to use a small language model than to use a large language model."

Agentic AI

"So another turn to watch is AI agents," said Posey, who had previously discussed them in the context of Microsoft 365 Copilot capabilities such as the facilitator agent and self-help agents for IT and for HR, along with a translation agent that Microsoft is creating that to allow teams to translate audio from one spoken language to another. Coming up, however, are many more agents from companies other than Microsoft used for a huge variety of tasks across a number of different fields.

[Click on image for larger view.] AI Agents (source: Brien Posey).

[Click on image for larger view.] AI Agents (source: Brien Posey).

"Well, for starters, we have creative AI agents, things that can write articles or marketing copy or scripts," he said. "These same types of agents that perform creative work might also compose music or create artwork or produce or enhance video content, and I've already shown you quite a few examples of AI products that you can buy today that can do these sorts of things. There are also industrial AI agents, so AI can be used for predictive maintenance of equipment. So let's suppose that you've got some piece of industrial equipment and it produces massive log files showing everything that's going on with this equipment. Well, a specialized AI agent may be able to analyze those log files and find out if there were any entries in the log files that might indicate that there's a failure that's going to be happening at some point in the time, and then recommend that you perform some sort of maintenance on that piece of equipment before it has a chance to break down.""

Posey isn't alone in his enthusiasm for agentic AI, as research firm Gartner recently predicted the tech would be one of the top 10 strategic technology trends for 2025.

[Click on image for larger view.] 2025 Gartner Top 10 Strategic Technology Trends (source: Gartner).

[Click on image for larger view.] 2025 Gartner Top 10 Strategic Technology Trends (source: Gartner).

"Also, industrial AI agents may be able to optimize production lines and help you to figure out ways of cutting out inefficiencies, and they might be able to help monitor conditions within a smart factory," Posey continued. "Also, you may be able to use AI agents for retail, things like generating personalized recommendations, predicting inventory requirements by looking at past sales patterns and current sales trends and analyzing customer feedback."

Posey's insights on other important trends -- along with some spectacular AI failures -- were just one part of last week's half-day summit, with expert Howard M. Cohen also presenting, along with third-party tooling discussed by Mimecast, Cohesity and Okta.

While we can't go into Howard's presentation here, he summed up his thoughts on agentic AI in a brand-new article on sister publication Pure AI titled "Welcome to the Agentic World."

One important aspect of attending such presentations live is the ability to ask questions of the presenters, a rare chance for expert one-on-one advice from the front lines. Witn that in mind, some upcoming events being presented by Virtualization & Cloud Review and sister publications in the next week include: