Next week is the big event. VMworld will return once again to San Francisco and connect the entire virtualization community together. This will be my third trip to VMworld, and I'm quite excited. While I am saving my snarky post about the five things I dislike most about VMworld for later this week, here are five things that I like best about VMworld:

- Community. I don't exactly know why or how, but virtualization "just gets" the whole technology community thing. Virtualization is unique among the greater IT landscape in that we have a passionate blog network, podcasts, events such as VMworld, colorful Twitter personalities and a common desire to tie all of these social media strategies together. Having these entities manifest themselves at a big event, such as VMworld, is a real treat.

- Partners. VMworld does a good job of effectively bringing every partner into one room in the Solutions Exchange pavilion. This is a good way to get a feel for what's out there when the Internet isn't enough.

- Sessions. Most sessions are presented by leaders in their field, and I've enjoyed almost every one I've ever attended. Here's a little secret on the sessions: VMware is very stringent on the quality assurance aspect of the presentation format. Sure, there is a what you can and cannot say aspect to it, but also a very professional and effective communication strategy.

- Variety. As I have stated before, and anyone whom has attended will say, VMware is not like a training class for a specific product. There is no "deliverable" that you walk away with, but what you do experience is a sampling of every aspect of your infrastructure (beyond virtualization). This can come in the form of conversations with people in similar situations. Everyone walks away with something positive from VMworld.

- Fun. VMworld has fun as part of the agenda. This can be the VMworld party, which will have INXS performing this year, vendor parties or even exhibit floor swag. I'm not one to take much notice of swag, but every year someone will provide some form of promotional material that is pretty cool. I've amassed a boomerang, ocarina, water bottles, T-shirt apparel for any occasion and an endless score of USB flash drives.

VMworld is what you make of it, and you can benefit from all of the above as an attendee. If you will be there, I encourage you to go out and take it all in! I'll be there, and if you want to meet me be sure to stop by a Tweetup (or SmackUp) for the Virtumania podcast crew. It's Sept. 1 at 1 p.m. at the Veeam Booth (#413). Register for the event at Twtvite.com.

What do you like most about VMworld? Share your comments below.

Posted by Rick Vanover on 08/24/2010 at 12:47 PM3 comments

I believe virtualization rolls in circles of sorts. When we got started with virtualization we were focused on squeezing in enough memory to consolidate the workloads we wanted, new processors that came out with more cores with a better architecture caused us to architect around them and then we zeroed in on storage.

With storage, we definitely need to be aware of IOPs and datastore latency. But, how much? You can turn to tools to identify problems with latency, such as VKernel's free StorageVIEW. But where do you really look for the latency? Do you look at the storage controller (if it provides this information), do you look at the hypervisor with a tool like StorageVIEW, or do you look in the operating system or application to get this data? With each method, your mileage may vary, so you may want to be able to get them from each measure -- your results surely won't be exactly the same all of the time.

IOPs are something that can give administrators headaches. Basically, a drive is capable of an IOP rate that is pooled together for all of the hard drives in a pool, whatever the technology may be. So, if you have 12 drives spinning capable of 150 IOPs, you can extend 12X150 IOPs up to 1800 IOPs for that collection of disk resources. Very many other factors are involved, and I recommend reading this post by Scott Drummonds on how IOPs impact virtualized workloads, as well as other material on the Pivot Point blog.

For the day-to-day administrator concerned with monitoring the run mode of a virtualized infrastructure, do you look at this all day long? I'll admit that I jump into the datastore latency tools when there is a problem and my biggest issue is that application owners can't give me information about the IOPs of their applications. Getting information about read and write behavior also is a challenge.

How frequently do you monitor these two measures and how do you do it? Share your comments here.

Posted by Rick Vanover on 08/19/2010 at 12:47 PM4 comments

For virtualization administrators, there are a lot of free things out there today. But how much you can do with all of this free stuff becomes harder to see. Citrix takes a different approach, however. I have long thought that the Citrix virtualization offering for virtualizing server infrastructures with XenServer has been the best free offering among free ones from Microsoft, VMware and Citrix. I'll go out on a limb and say XenServer is easier to set up than Hyper-V; you can flame me later on that.

The free stuff topic is one of the most passionate topics I deal with as a blogger and in my community interaction. All the time I receive e-mails and talk with administrators who want to get started with virtualization (I volunteered free help!), yet don't have the resources like other organizations. Inquiries frequently come from non-profit, educational or government installations that are very small and are run by people who have to balance everything on their own. In these situations where I volunteer help, the question usually ends up to be, which free product do I end up using?

When it comes to a server virtualization offering for most datacenter consolidation offerings, I usually end up recommending Hyper-V or XenServer simply out of the features offered. VMware isn't even in the conversation other than me saying, "I really know how to do it this way, but you can get a lot more out of the free offering from either of these two."

Citrix continues this trend with the release candidate of XenClient. The XenClient Express product is a type 1 client hypervisor for laptops. It was released in May and the second release candidate will be out later in 2010.

Recently, I had a chance to chat with Simon Crosby on the Virtumania podcast, episode 23. Simon gave a great overview of some XenClient use cases as well as how each use case can roll in a number of different technologies. Simon also shed some light on the frequently confusing Microsoft relationship with Citrix. Their relationship has been unique for over a decade, and I've settled on calling it "co-opetition" between the two software giants.

What is clear is that Citrix is still a player in the virtualization space. Whether it be new innovations such as XenClient, a strong XenServer offering, or the robust display protocol HDX; Citrix will drop in solutions across the stack. For me, it means I get some lab time with XenClient.

Have you tinkered or read about XenClient? If so, share your comments here.

Posted by Rick Vanover on 08/17/2010 at 12:47 PM1 comments

The old saying that history repeats itself does indeed manifest itself in the IT realm. Recently, I read Brandon Riley's

Virtual Insanity post where he asks: Is VMware the Novell of Virtualization?

Virtual Insanity is a blog I read frequently. Regular contributors include a number of VMware and EMC employees. Now that the transparency topic is out of the way, let's talk about the whole idea that VMware is acting like Novell years ago when NetWare was pitted against Windows NT. A consultant working with me back in the mid 1990s when I was mulling going to Windows NT for file servers asked me, "Why would you replace your file server with an inferior product?"

That was so true then, technically speaking. NetWare 3 and 4 back in the day offered file server functionality that still to this day is not matched by the Microsoft offering. Don't believe me? Look up the NetWare flag command and see if you can do all of that with a Windows file system.

When it comes to virtualization, will Hyper-V or some other virutalization platform supercede VMware whom currently has the superior product? My opinion is that this will not be the case.

VMware innovates at a rate not seen by the fallen hero in NetWare and other products who may have "lost" the Microsoft battle. I will concede that innovation isn't enough, but I see the difference-maker is the greater ecosystem of infrastructure technologies which are moving at this same rate. Whether this be cloud solutions, virtual desktops, server virtualization or virtualized applications; the entire catalog becomes solutions that make technology decisions easy for administrators. Couple the superior technology with that decision, and I think the case is made for VMware to not have a similar fate as Novell.

Where are you on the fate of VMware and a sense for the history of NetWare? Share your comments here.

Posted by Rick Vanover on 08/11/2010 at 12:47 PM14 comments

One of the most definining community aspects of virtualization is manifested annually at VMworld. This year in San Francisco, I am particularly intrigued about what is coming. Partly because I am still somewhat surprised and perplexed that vSphere 4.1 was released somewhat close to the upcoming VMworld conferences.

I am perplexed because VMware has historically strived to have the conferences center around a major announcement. Since vSphere 4.1 was recently released, the major announcements will presumably be about "something else." The enticement is definitely enhanced with social media sites such as Twitter. VMware CTO Steve Herrod recently tweeted, "Most announcements I will have ever done in keynote!" Coming into this conference, I don't have any direct information about what the announcements may be, which piques my curiosity.

What value are announcements anyway? In 2008's VMworld, VMware announced and previewed vSphere, yet we patiently held on until May 2009 for the release. An announcement lacks the immediate relevance to most of the virtualization community, but definitely helps shape the future decision process for infrastructure administrators. Larger, more rigid organizations may keep a "minus 1" level of version currency. Others organizations may work aggressively to engage in pilot programs and be on the cutting edge of the technologies. The organizational tech climate truly will vary from customer to customer among the VMworld attendees. What I don't want to do with announcements -- or, more specifically, the anticipation of announcements -- is to build up too high of an expectation.

Make no mistake, I look forward to VMworld more than any other event that I participate in. What will VMworld be for me this year? Hopefully fun, informative and community-rich! Hope to see you there!

Posted by Rick Vanover on 08/10/2010 at 12:47 PM0 comments

One of the aspects of vSphere from the recent 4.1 release is the introduction of

per-VM pricing for advanced features. My initial reaction is negative to this, as I'd like my VM deployments to be elastic, to expand and contract features without cost increases. With my grumblings, I decided it was important to chew on the details of per-VM pricing for vSphere.

The most important detail to note is that per-VM pricing is only for vCenter AppSpeed, Capacity IQ, Chargeback, and Site Recovery Manager virtual machines. Traditional VMs that are not monitored or managed by these add-ins are not (yet) in the scope of per-VM pricing. Other vSphere products that are not included in per-VM pricing: vCenter Heartbeat, Lab Manager, Lifecycle Manager, and vCenter Server.

For the products that do offer per-VM pricing, it'll be bundled at 25 virtual machines. Personally, I think this unit is too high. If it is per-VM, I think it needs to be brought down, maybe to the 5 VM mark with 25 VM licensing unit available also. Likewise, maybe a 100 VM licensing unit would act to front-load the licensing better than a 25 VM chunk of licenses. Here's how it breaks down per 25-pack of per-VM licenses:

- vCenter AppSpeed: $3,750

- vCenter Capacity IQ (per-VM pricing available late 2010/early 2011): $1,875

- vCenter Chargeback: $1,250

- vCenter Site Recovery Manager: $11,250

(VMware states that "VMware vCenter CapacityIQ will be offered in a per VM model at the end of 2010/early 2011, and you can continue buying per processor licenses for vCenter CapacityIQ until then.")

Each product has a different per-VM cost, but that is expected as they each bring something different to the infrastructure administrator. Breaking each of these down to a per-VM price, the 25 packs equate to the following:

- AppSpeed: $150 (each VM)

- Capacity IQ:75

- Chargeback: 50

- Site Recovery Manger: 450

To be fair, I don't think these prices are too bad. Take a look at your costs for your operating systems, system management software packages or other key infrastructure items and you'll notice it's right in line with those costs. The issue I have is that most organizations still do not have a well-defined model for allocating infrastructure costs. Solving that will be a bigger challenge for organizations who are dealing with that situation.

What concerns me is will these products, or future products that introduce per-VM pricing, justify the "new" expense? Meaning, each of these VMs that are now subject to per-VM pricing ran fine yesterday; why are they asking for more money? On the per-VM pricing sheet, VMware explains that current customers will receive details soon on their specific situation; however. Simply speaking, if I am a vCenter Site Recovery Manager customer today; I've invested what I need to provide that environment. If the SnS renewal for Site Recovery Manager comes due and these workloads are now in the per-VM model, I foresee a conversation where they might be asking for more money.

Like all administrators, I just hate going back to the well for more money. I just hope this doesn't become the case.

Posted by Rick Vanover on 08/05/2010 at 12:47 PM3 comments

One of the most contentious issues that can come up in virtualization circles is the debate on whether to use blades or chassis servers for virtualization hosts. The use of blades did come up in a recent

Virtumania podcast discussion. While I've worked with blades over the years, most of my virtualization practice has been with rack-mount chassis servers.

After discussing blades with a good mix of experts, I can say that blades are not for everybody and that my concerns are not unique. Blades have a marginally higher cost of entry, meaning that up to six blades are required to make the hardware purchase more attractive than the same number of chassis servers. The other concern that I've always had with blades is effectively shrinking a domain of failure to include another component, the blade chassis. Lastly, I don't find myself space-constrained; so why would I use blades?

Yet, it turns out that blades are advancing quite well with today's infrastructure. In fact, they're progressing more swiftly than my beloved rack-mount chassis server. Today's blades offer superior management and provisioning, ultra-fast interconnects and features on-par with CPU and memory requirements of the full server counterparts. A common interconnect between blades is 10 Gigabit Ethernet, which, in virtualization circles, will lead the way for ultra-fast virtual machine migrations and can make Ethernet storage protocols more attractive.

So, why not just go for blades? Well, that just depends. Too many times, other infrastructure components are in different phases of their lifecycles. The biggest culprit right now is 10 Gigabit Ethernet. I think that once this networking technology becomes more affordable, we'll see the biggest obstacle removed for blades. Cisco's Unified Computing System requires a 10 Gigabit uplink, for example. Blades can also allow administrators to take advantage of additional orchestration components that are not available on traditional servers. The Hitachi Data Systems Unified Compute Platform blade infrastructure allows the blades to be pooled together to function as one logical symmetric multiprocessing server.

Blades bring the features; that is for sure. Which platform do you use, and why? Are you considering changing to blades? Share your comments here.

Posted by Rick Vanover on 08/03/2010 at 12:47 PM3 comments

Regardless of where we sit on the VMware/Citrix/Microsoft virtualization debate, there is one indisputable fact: Microsoft has the largest partner ecosystem. It's size will give the company a certain amount of market share once virtualization solutions start to emerge. In

episode 13 of the Virtumania podcast, Gartner research director

Chris Wolf stated that betting against Microsoft in the long run has not usually proven successful.

Recently, I had a chance to have a briefing by VM6 Software on their VMex solution that can be used to deliver a complete infrastructure solution in simple fashion. VMex does this by utilizing commodity servers and networking equipment to deliver virtualization hosts, management tools, a VDI solution and shared storage solution, all through a single interface. The VMex solution combines all of these roles via two standard Windows Server 2008 R2 servers and manages them centrally.

|

Figure 1. The VMex Interface, showing features in the Enterprise edition. (Click image to view larger version.)

|

What caught my eye in the demo is that the tree view is incredibly intuitive. I'll admit it that I am a details guy, favoring the ability to turn knobs and dials across the components of the infrastructure. VMex takes all that away and for the small business or remote branch, I see this as a perfect solution. It's easy to set up all of the necessary components of a virtualized infrastructure: shared storage, virtual machines, virtual desktops, management and networking. For the high-featured editions of VMex, administrators can easily pool servers together. There is a host-to-host management network that coordinates the activities between the two servers, as well as a storage network between the hosts.

The Enterprise edition of VMex has additional features (see Fig. 1). The VMex Light product is a single server solution that starts at $1,000 and goes up from there. VM6 wouldn't provide a pricing structure for the enterprise solution that offers migration and multiple site configurations. More information on VMex can be found here.

Would you go for Microsoft virtualization in a box? Share your comments here.

Posted by Rick Vanover on 07/29/2010 at 12:47 PM1 comments

A lot of chatter on vSphere 4.1 is flying through the blogosphere, and rightfully so. Aside from running lots of features into the enterprise-class virtualization suite, a number of requirement changes are being rolled in as well.

When VMware released vSphere, we saw for the first time that ESX and ESXi had hard requirements for x64 processors. It was really to be expected, as enterprise virtualization would not be well served on equipment that cannot support x64 at this time. I encourage each administrator to read through the vSphere Compatibility Matrix (PDF here) as well as the Hardware Compatibility Guide.

One observation is that many of the infrastructure components are being updated, rather significantly, when going from vSphere 4.0 to 4.1. Here is a list of some of the requirements that may catch administrators off guard:

- vCenter Server must be installed on an x64 system. This means that the days of Windows Server 2003 (x86 installs) as a vCenter Server are over. The best bet, of course, is to install it on Windows Server 2008 R2, which is only one available on x64 installations.

- There are a number of database engine landmines to navigate. For example, in the compatibility matrix SQL Server 2005 for the vCenter Server Update Manager database can run on SQL Server 2005 Standard Edition with SP2, but cannot run on SQL 2005 SE 64-bit with SP2. It's an example of where an infrastructure administrator trying to get ahead of the curve implementing a database server or cluster may be stuck. The vCenter Server database works on both of those SQL Server 2005 platforms, however.

- Windows 2000 cannot run the vSphere Client. This isn't so much of a stopping point but somewhat expected, as the platform has now fallen off of Microsoft extended support.

While I don't think x86 is entirely dead, it is falling quickly from the infrastructure footprint in terms of servers. While ISV support of x64 server applications is an entirely different, emotionally charged discussion, the end of the line will likely come when x86 clients fall off the roadmap. VMware is nudging us along swiftly, and rightfully so at this point in technology. Like many administrators, this may be the release where the process is more of a new install instead of an over-top upgrade.

How do the vSphere 4.1 requirements impact your infrastructure configuration? Share your comments here.

Posted by Rick Vanover on 07/27/2010 at 12:47 PM8 comments

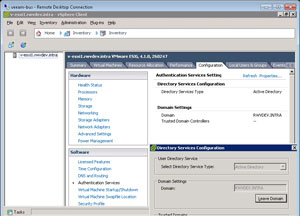

One of the most lauded features of vSphere 4.1 is the built-in Active Directory authentication engine for ESXi hosts. It's very easy to configure, and it works for both free ESXi installations as well as hosts managed by vCenter. The Active Directory-integrated security is configured in the Authentication Services section of each host (see Fig. 1).

As easy as it is to configure, I recommend you read Maish's post on the Technodrone on how to do such.

So, while configuration is easy enough, I have confirmed with Microsoft that it's not that easy to manage from a licensing perspective. As it turns out, enabling Active Directory-integrated security to the ESXi host creates a computer account for each host. It's actually similar to what XenServer did in the 5.5 release, when Citrix added Active Directory authentication.

Using Active Directory-integrated security in this configuration can impact your licensing responsibility if device client access licenses (CALs) are used. I reached out to Microsoft to clarify how this applies. The good news is that there is no server licensing impact for the integrated authentication feature. A Microsoft spokesperson provided this language to clarify server licensing:

All Windows Server licenses are assigned to either the server itself or a processor within the server. The licensing or physical deployment of any other product like another company's hypervisor or management tools, in no way impacts the licensing of our server products

For CALs, it is a different story. Basically, if User CALs are the licensing mechanism, there is no licensing cost for an ESXi host with Active Directory authentication. There are if you're using Device CALs. This language was provided to clarify how CALs apply to this configuration:

The answer is "no" if a customer is using "User CALs" since then they can access any server from any device. If the customer is using "Device CALs" then each device used to access the server would need to be licensed with a CAL. So "yes".

The Yes and No references are the answers these questions I had presented to Microsoft.

|

Figure 1. Configuring Authentication Services on an ESXi host. (Click image to view larger version.)

|

With any licensing topic, I recommend that you consult your Microsoft licensing professional with your configuration. You can find a good overview comparing device vs. user CALs on Microsoft's site here. All in all, I don't think the licensing issue is a big deal and most organizations can address this via a regular true up of their Enterprise Agreements with Microsoft.

Does this licensing note impact your infrastructure administration practice? If so, share your comments here.

Posted by Rick Vanover on 07/22/2010 at 12:47 PM3 comments

As we review more details of VMware's vSphere 4.1 update; we find more nuggets that we can latch onto for which we can find use cases.

One feature that I am somewhat mixed on is the vStorage APIs for array integration (VAAI). I'm not discounting whether or not it is a good feature, but how much of the storage ecosystem will take advantage of this amazing feature. As of its release, only a handful of products support VAAI and they are from the usual suspects: NetApp, Hitachi Data Systems, EMC and 3Par. I hope that we see the larger storage ecosystem embrace VAAI support. While these are familiar players in enterprise storage, many VMware installations use other products that do not yet support VAAI.

What does VAAI do? Quite simply, it does some of the largest non-compute workloads in which an ESXi host interacts with the storage. According to this VAAI KB article on the VMware Web site, VAAI supports these three operations:

- Atomic Test & Set (ATS), which is used during creation of files on the VMFS volume

- Clone Blocks/Full Copy/XCOPY, which is used to copy data

- Zero Blocks/Write Same, which is used to zero-out disk regions

With these operations, VAAI pushes the hardware do work that is best served on the storage processor. Hu Yoshida, VP and CTO at Hitachi Data Systems took particular interest to the VAAI feature in his recent blog post. There, he stated how VAAI is a transforming aspect of the datacenter to enable more performance to the virtualized infrastructure. Other storage vendors are touting their integration with VAAI as well, including some of the vendors whom have previewed VAAI last year as did NetApp at VMworld 2009.

While the current inventory of storage vendors whom support VAAI will surely grow, I hope to see a wide adoption across the VMware Hardware Compatibility list. Ideally, it would be a requirement for the storage partner to ensure a consistent experience across all products. While I think that is quite the wish-list item, administrators can turn the tables and make that a requirement for new storage products purchased.

What does VAAI do for you with vSphere 4.1? Share your comments here.

Posted by Rick Vanover on 07/20/2010 at 12:47 PM4 comments

Virtualization Review Editor Bruce Hoard points out in

this post that VMware has trimmed costs on some of vSphere 4.1 suite’s offerings. It helps SMBs and is a huge step in the right direction.

I want to focus specifically on the Essentials Plus Kit offering of vSphere, which has the following features:

- ESXi host memory up to 256 GB

- Up to 6 cores per processor

- Up to 3 servers with up to 2 sockets

- Thin provisioning of VMDK files

- VMware Update Manager

- vStorage APIs for Data Protection and Data Recovery

- vMotion and High Availability

This offering, again for up to six sockets across three servers, is $2,995. It's not priced per processor like other offerings we may be familiar with, and does not include VMware’s Support and Subscription (SnS).

At that price and without the SnS offering, it's still perfect for smaller data centers. Especially for organizations that may have a mix of small and large datacenters. The smaller datacenters that may be remote, have limited staff; yet administrators want to deliver the same functionality and availability that we have come to know and love with larger vSphere installations.

Notably missing from the Essentials Plus Kit compared to 'traditional' vSphere administration are DRS, FT and Storage vMotion. While the kit does include vMotion, the virtual machine migrations will be manual instead of invoked by vCenter. For many environments that are dealing with smaller, remote datacenters; I see this as a perfect fit.

The lack of Storage vMotion likely is not much of an issue either, as chances are there are only one or two LUNs in place for smaller environments.

Does this new offering make a big difference in provisioning virtualized servers for smaller environments in your organization? I think it does. Share your comments here.

Posted by Rick Vanover on 07/15/2010 at 12:47 PM2 comments