One of vSphere's premier features is the Distributed Resource Scheduler. DRS is applied to clusters, but what about exceptions that you may want to implement? Sure, you can use the DRS rules and you will quickly realize that there are still improvement opportunities for extending your management requirements to the infrastructure.

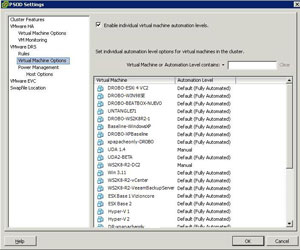

Among the easiest ways to implement an additional level of granularity to a cluster is to implement per virtual machine DRS configuration. The primary use case for an individual virtual machine DRS automation level setting is to keep a specific VM from migrating away from a designated host. Other use cases can include allowing development workloads to migrate freely and not production systems. You can find this option in the properties of the DRS cluster in the virtual machine options section (see Fig. 1).

Likewise, you can configure the entire cluster as well as specific hosts to be eligible or ineligible for Distributed Power Management, which will power down and resume the hosts if they are under-utilized to the DRS configuration level.

Individual virtual machine DRS automation level settings coupled with separation (or keep together) rules will allow you to craft somewhat more specific settings for your requirements. Of course, we never seem to be satisfied in how specific we can make our requirements for infrastructure provisioning.

|

| Figure 1. Allowing specific virtual machine DRS rules can keep a virtual machine from migration events, such as vMotion. (Click image to view larger version.) |

I've never really liked the DRS rules, as they don't allow multiple select for single separation or keep together assignments without multiple rule configurations. For example, I want to keep virtual machine A on a separate host from virtual machines B through Z. You can easily keep them all separate, but that doesn't address the preference I have.

The best available option to address some of the groupings and pairings is to create a vApp. The vApp is a organizational feature of vSphere that allows pre-configured startup order, memory and CPU configuration limits, IP addressing as well as documentation for application owner information. The vApp doesn't become a manageable object like a VM for separation and user-defined DRS automation levels, however.

Have you ever used the DRS automation level on a per-virtual machine basis? If so, share your usage experience here.

Posted by Rick Vanover on 05/06/2010 at 12:47 PM4 comments

While the larger technology ecosystem has fully adopted server virtualization, there still are countless line of business applications that have blanket statements about not supporting virtualization. Infrastructure teams across the land have adopted a virtualization-first infrastructure deployment strategy, yet occasionally applications come up that aren't supported in a virtual environment.

The ironic part here is that if you ask why, you could be quite entertained by the answers received. I've heard everything from vendors simply not understanding virtualization to things such as a customer trying to run everything on VMware Workstation or VMware Server. But the key takeaway is that most of the time, you will not get a substantive reason as to why a line of business application is not supported as a virtual machine.

In an earlier part of my career, I worked for a company that provided line of business software to an industrial customer base. Sure, we had the statement that our software was working as close to real-time as you will find in the x86 space, but customers wanted virtualization. We had a two-fold approach to see if virtualization was a fit. The first was to make sure the hardware requirements could be met, as there may have been custom devices in use via an accessory card that would have stopped virtualization as a possibility. For the rest of the installation base, if all communication could be delivered via Ethernet we'd give it a try, but reserve the right to reproduce on physical. The reproduce on physical support statement is a nice exit for the software vendors to really dodge a core issue or root cause resolution for a virtualized environment.

In 2010, I see plenty of software that I know would be a fine candidate for virtualization -- yet the vendor doesn't entertain the idea at all. The most egregious example is a distributed application that may have a number of application and Web servers with a single database server. The database server may only have a database engine, such as Microsoft SQL Server installed, and no custom configurations or software. Yet the statement stands that virtualization isn't supported. Internally, I advocate against these types of software titles to the application owners. The best ammunition I have in this case is support and availability. It comes down to being able to manage it twice as good on a virtual machine at half the cost. The application owners understand virtualization in terms like that, and I've been successful to help steer them towards titles that offer the same functionality and embrace virtualization.

I don't go against vendor support statements in my virtualization practice; however I've surely been tempted. I don't know if I'll ever get a 100 percent virtual footprint. Yet I do measure my infrastructure's virtualization footprint in terms of "eligible systems." So, I may offer a statement like 100 percent of eligible systems are virtualized in this location.

How do you go about software vendors that offer the cold shoulder to virtualization? Share your comments below.

Posted by Rick Vanover on 05/03/2010 at 12:47 PM1 comments

Virtualization is a technology that can apply to almost any organization in a number of ways. The strategy that the small and medium business may use for virtualization would differ greatly from that of a large enterprise. Regardless of size, scope and virtualization technologies in use, there are over-arching principles that guide how the technology is to be implemented.

It is easy to get hooked on the cool factor of virtualization, but we also must not forget the business side. In my experience, it is very important to be able to communicate a cost model and a return on investment analysis for your technology direction. Delivering these end products to management, a technology steering committee or your external clients will be a critical gauge to the success of current and future technologies. Virtualization was among the first technologies that made these tasks quite easy.

The server consolidation approach is an easy cost model to make by outlining the costs for a single server's operating system environment (OSE) in the physical world, compared to that of the virtual world. It is important to distinguish between a cost model and chargeback, as few organizations do formal chargeback at the as-used level for processor, memory and disk utilization. While it seems attractive to have the electric company approach in billing for as-used slices of infrastructure, most organizations prefer fixed price OSE models for budget harmony. To be fair, there are use cases for as-used chargeback; I just haven't been in those circles.

So what does a cost model look like? What is the deliverable? This can be a one-page spreadsheet with all of the costs it takes to build a virtual infrastructure or it can be an elaborate breakdown of many scenarios. I tend to prefer the one-page spreadsheet that has the upfront costs simply divided by a target consolidation ratio. This will simply say what it takes to provide infrastructure for a pool of OSEs. You can then divide this to show what it may look like in the 10:1, 15:1 or higher consolidation ratios.

The next natural step is to deliver an ROI on virtualization technology. Virtualization for the data center (specifically server consolidation) is one of the easier ROI cases to make. Many organizations have not had the formal requirement to document an ROI; but it may be a good idea to do so. This will increase the likelihood of future technologies to work with virtualization as well as increase your political capital within the organization.

For an ROI model, you can simply compare the cost model of the alternative (physical servers) with the cost model of a virtualized infrastructure. For most situations, there will be a break-even point in costs. It's important to not over-engineer the virtualization footprint from the start yet set realistic consolidation goals.

An effective cost model and ROI can be created easily with organizations that are already using virtualization to some extent. For organizations that are new to virtualization, it is important adequately plan for capacity when considering migrating to a consolidated infrastructure. Check out my comparative report of three popular capacity planning tools to help make sure you don't under- or over-provision your virtual environment from the start.

How do you go about the business side of virtualization? Do you use formal cost models and ROI analyses in your virtualization practice? Share your comments here.

Posted by Rick Vanover on 03/29/2010 at 12:47 PM0 comments

Storage and related administration practices are among the most critical factors to a successful virtualization implementation. One of the vSphere features that you may be overlook is the paravirtual SCSI (PVSCSI) adapter. The PVSCI adapter allows for higher-performing disk access as well as relieving load on the hypervisor CPU. PVSCI also works best for virtual workloads that will require high amounts of I/O.

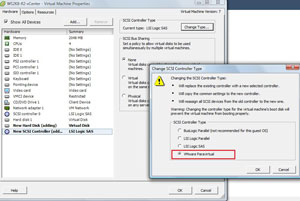

Configuring the PVSCI adapter is slightly less than intuitive, primarily because it is not a default option when creating a virtual machine. This is for good reason, as the adapter is not supported as a boot volume within a virtual machine. PVSCSI is supported on Red Hat Linux 5, Windows Server 2008 and Windows Server 2003 for use for non-booting virtual disk files.

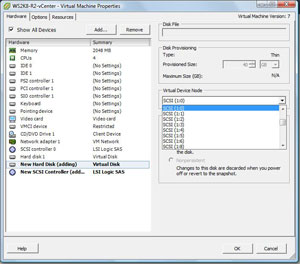

To configure the adapter, first review VMware KB 1010398 and make sure it is going to work for your configuration. The first step is to add another virtual disk file. During this step, you'll be asked which virtual device node to use. For non-boot volumes on most virtual machines, select a position higher than SCSI 1:0 (see Fig. 1).

|

| Figure 1. The virtual device node outside of the first controller is key to using a PVSCSI adapter. (Click image to view larger version.) |

Selecting the higher value will cause the subsequent controller to be added to the virtual machine's inventory. Once the hard drive and additional controller have been added, the controller can be changed to the PVSCSI type (see Fig. 2).

|

| Figure 2. Changing the type on the controller will enable the PVSCSI high-performance adapter. (Click image to view larger version.) |

At that point the guest is ready to use the PVSCSI adapter for the virtual drives that were added. I've been using the PVSCSI adapter and can say that it makes a measurable impact in performance on the guest virtual machine, so I will be using it in applicable workloads. The supported operating systems are listed above, but it is important to note that the PVSCSI adapter is not supported for use with a virtual machine configured for the Fault Tolerant (FT) availability feature until vSphere Update 1.

That is cool, but tell me something I don't know about vSphere features!

As a side note to the current vSphere features, it is also a good time to talk about future vSphere features. I had the honor once again to be a guest on the Virtumania podcast, where we received some insight to future vSphere features. In episode 4, special guest Chad Sakac of EMC explained some upcoming vSphere features, among them an offloading to storage controllers certain I/O intensive functions like deploying a virtual machine. If you have ever spoken with Chad, you know that the information he gives takes some time to absorb and set in. I recommend you check out episode 4 now.

Posted by Rick Vanover on 03/25/2010 at 12:47 PM3 comments

Last week, Microsoft announced a number of new virtualization technologies targeted at the desktop space. The additions are primarily focused on virtualized desktops, VDI and application virtualization. Somewhat of a footnote to all of this is that a new addition, dynamic memory, will apply to all Hyper-V virtualization, not just the desktop technologies.

Dynamic memory was featured at one point in the Hyper-V R2 product, but has since been rescinded as of the current offering. Its whereabouts has been a hot topic on VMware employee Eric Gray's vcritical blog and made rounds in the blogosphere.

The dynamic memory feature will apply to all Hyper-V workloads. It will work with a basic principle of a starting and a maximum allocation. The simple practice is to make the starting allocation the base requirement for the OS in question -- 1 GB for Windows 7 for example -- which is counter to what VMware's virtualization memory management technologies provides.

I had someone explain dynamic memory's benefits to me and the simplified version is that dynamic memory is an extension of the hot-add feature to allow more memory to be assigned to a virtual machine. Cool, right? Well, maybe for some and only if we think about it really hard. Consider the guest operating systems that support hot-add of memory. Doing some quick checking around, I end up with Windows Server 2008 (most versions), Windows 7 and Windows Server 2003 (Datacenter and Enterprise only).

The main thing that the enhanced dynamic memory will address is the lack of hot remove, which is a good thing. Basically, it is easy to do a hot-add, but what if you want to step it down after the need has passed? This is where the R2 features will kick in and reclaim the memory. I don't want to call dynamic memory a balloon driver, but it will automagically mark large blocks of memory as unavailable, which in turn will allow that memory be reclaimed back to the host.

One fundamental truth to take away is that Hyper-V will never allocate more than the physical memory amount. Any disk swapping won't take place in lieu of direct memory allocation.

Given that we don't have public betas yet, a great deal of detail still needs to be hammered out. Make no mistake: Microsoft's Hyper-V virtualization takes an important step in the right direction with the dynamic memory feature. It is not feature-for-feature on par with VMware's memory management technologies, but this increased feature set has sparked my interest.

Stay tuned for more on this. I and others will surely have more to say about dynamic memory.

Posted by Rick Vanover on 03/23/2010 at 12:47 PM1 comments

I have mentioned before that in my home lab, I use a DroboPro device that functions as an iSCSI target. It's a great, cost-friendly piece of shared storage that can be used with VMware systems. Recently, my DroboPro device failed, entering a series of reboots of the controller. I'll spare you the support experience, but the end result is that the company sent me a new chassis.

The Drobo series of devices support a transplant of the drive set to another controller. I followed the documented procedure, but ESXi was less than satisfied with my quick-change artistry. This is because the signature is different on the target. In the case of this storage controller, the new DroboPro controller is a different iSCSI target (though configured the same) path. From last month's "Which LUN is Which?" post, looking at the details on the full iSCSI name will show the difference. This means that when I pull in the specific volume (which was formatted as a single VMFS datastore), ESXi is smart enough to know that VMFS is already formatted on this volume.

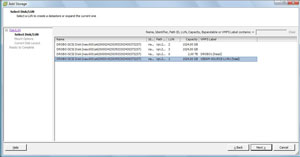

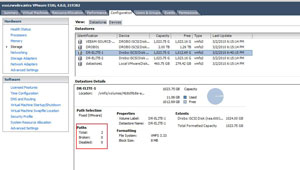

In my specific situation, I have two VMFS volumes and two Windows NTFS volumes formatted on this storage controller (see Fig. 1), which ESXi recognized as VMFS volumes.

|

| Figure 1. Detecting the presence of the VMFS datastore is a critical step of reintroducing storage to the host. (Click image to view larger version.) |

We then have the option of keeping the existing VMFS volume signature, assigning a new signature or reformatting the volume. The text on the message box is less than 100 percent clear; chances are, people end up on this screen after some form of unplanned event (see Fig 2.).

|

| Figure 2. The top option is the most seamless way to import the VMFS volume, whereas assigning a new signature requires intervention on the guest virtual machine. (Click image to view larger version.) |

Lastly you will be presented with the volume layout (see Fig. 3).

|

| Figure 3. This will summarize the volume layout before it is reimported into the host's storage inventory. (Click image to view larger version.) |

The final step is a host-based event called "Resolve VMFS volume" and the VMFS volume will reappear on the host. Virtual machines on that volume will have their connectivity reestablished to the datastore and be ready to go.

This is a scary process -- don't underestimate this fact. I did have a backup of what I needed on the volumes, but I was still more nervous than anything in this process. Hopefully if you have this situation you can review this material, check with your storage vendor's support and utilize VMware support resources if they are available to you.

Posted by Rick Vanover on 03/17/2010 at 4:59 PM0 comments

Last week, I had the honor of presenting at the

TechMentor Conference in Orlando. If you aren't familiar with TechMentor, you should be. Simply speaking, it is a track-driven series of training sessions brought to you by real-world experts on topics that everyone can use. Presenters this year included Greg Shields, Don Jones, Mark Minasi, Rhonda Layfield, and others.

In the virtualization track, we had a series of deep dives and technical sessions. The coverage very adequately represented Hyper-V and VMware, and it is tough to find good Hyper-V training from people that are actually using the technology.

I was pushing the VMware side heavily, but the natural conclusion is to make this seem like VMworld. TechMentor couldn't be farther from it. TechMentor is much more intimate and personal than VMworld. Sure, there are scores more attendees to VMworld. But, have you ever tried to stop and catch a quick conversation with someone at VMworld after a session? This isn't an issue at TechMentor, where the speakers are accessible.

This was my second year presenting, and one of the more popular sessions was my advanced conversion topic. I've maintained a running configuration of things I have learned over the years in performing physical-to-virtual (P2V) as well as virtual-to-virtual (V2V) conversions. The Visio diagram in Fig. 1 shows what can be a wonderful springboard for you to customize in your organization to collect lessons learned, avoid pitfalls with conversions and implement your own procedures, such as change controls and support contacts.

|

| Figure 1. This P2V flowchart allows you to go about your conversions with a procedural approach; note that this is one page; download the whole flowchart here. (Click image to view larger version.) |

I also presented three other sessions: data center savings with virtualization, virtualization disaster recovery, and virtualization-specific backups. It was a great time in Orlando, in spite of the rain, but I hope to be back next year delivering more good virtualization sessions.

Posted by Rick Vanover on 03/16/2010 at 12:47 PM9 comments

Last week, I had the honor of being one of the guests on the inaugural

Virtumania podcast. On the show we had

Rich Brambley,

Sean Clark (the guy with the hat) and

Mark Farley. Our topic was the VirtualBox hypervisor and what Oracle has in store with that and virtualization in general. Rich and I are avid users of VirtualBox in the Type 2 hypervisor space, and it was a good place to discuss how we use VirtualBox and where it fits in the marketplace. Here is a quick rundown of version 3.1.4 that have rolled into the product recently:

- Teleporation: This is a big one, as VirtualBox is the only Type 2 hypervisor that provides a live migration feature.

- Paravirtualized network driver: VirtualBox can have a guest virtual machine have a non-virtualized network hardware interface for Linux (2.6.25+) and select Windows editions.

- Arbitrary snapshots: This allows a virtual machine to be restored to any snapshot downstream, as well as new snapshots taken from other snapshots (called "branched snapshots" in VirtualBox).

- 2D hardware video acceleration: VirtualBox has always been somewhat ahead of the curve in multimedia support, and version 3.1 introduces support for Windows VMs to use hardware acceleration. The 3.0 release already added OpenGL 2.0 and Direct 3D support.

I still use VirtualBox extensively for my desktop hypervisor, but as far as what Oracle has in store for the end product, we don't yet know. We know that there is some form of product consolidation plans with Oracle VM in the works, but what specifically will be the end result is anyone's guess.

What we know for sure is that VirtualBox still is a good Type 2 hypervisor, and Oracle and company want to do more with virtualization. The polish and footprint in enterprises that Oracle and Sun have together are attractive. Consider further that the combined companies can provide servers, storage, hypervisors and operating systems. How much of that will end up in enterprise IT remains to be seen.

Posted by Rick Vanover on 03/11/2010 at 12:47 PM0 comments

Last week I outlined how I go about provisioning my own lab for testing virtualization and supporting blogging efforts. One of the key points of my ability to have a functional lab is a shared storage device. I use the

DroboPro iSCSI storage device. Since I purchased the unit, the

Elite version has been released. While the DroboPro and DroboElite are candidates for home or test labs, Data Robotics (makers of the Drobo series) are positioning themselves well for the SMB virtualization market.

I had a chance to evaluate the DroboElite unit, and it has definitely added some refining points that are optimized for VMware virtualization installations. The DroboElite is certified on the VMware vSphere compatibility list for up to four hosts. The new features for the DroboElite over the DroboPro are:

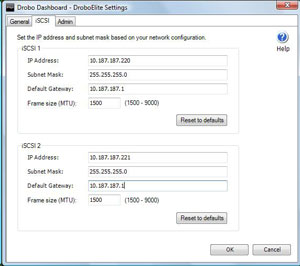

Second iSCSI network interface -- The cornerstone feature is its ability to have two Ethernet connections for iSCSI traffic to a VMware ESX/ESXi host (see Fig. 1).

|

| Figure 1. You can provide two network interfaces from the DroboElite to be placed on two different switches for redundancy, right from the dashboard. (Click image to view larger version.) |

This also allows the multipath driver for ESX and ESXi to show the second path to the disk. Whether or not you put it on multiple switches, depending on your connectivity on the host and on the physical network, you can design a much better connectivity arrangement compared to the DroboPro device, which only had one network interface. Fig. 2 shows the second path made available to the datastore:

|

| Figure 2. The second network interface allows DroboElite to present two paths to the ESXi host. (Click image to view larger version.) |

While the second network interface was a great touch, the DroboElite still has only one power supply. This is my main concern for using it as primary storage for a workplace virtualization installation. What complicates it further is that the single power supply is not removable or a standard part.

Increased Performance

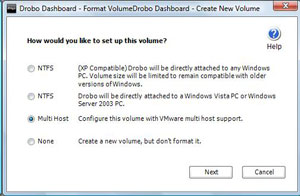

Simply speaking in terms of user experience, the DroboElite is faster than the DroboPro. I asked Data Robotics for specific information on the controller, but they had none, than the DroboElite has 50 percent more memory and a 50 percent faster processor. Beyond simply having more resources on the controller, there are also some code-level changes with the DroboElite. This is most visibly seen in the volume creation task, where a volume is determined to be a VMware multi-host volume (see Fig. 3).

|

| Figure 3. A volume is created with the specific option of being a VMware datastore. (Click image to view larger version.) |

Like any storage system, if it gets busy, you feel it. The DroboElite is no exception. At one point, I installed two operating systems concurrently and the other virtual machines on the same datastore were affected. The DroboPro has produced this behavior as well, but it wasn't as pronounced on the DroboElite.

First Impression

Configuring the DroboElite is a very simple task. I think I had it up and running within 20 minutes, which included the time to remove the controller and five drives from packaging. For certain small environments, the DroboElite will be a great fit at the right price. List prices at the DroboStore have a DroboElite unit with a total of 8 drives at 2 TB each, making 12.4 TB of storage at $5,899USD. Comment here.

Posted by Rick Vanover on 03/09/2010 at 12:47 PM1 comments

There was quite a buzz in the blogosphere about what people do for their virtualization test environments. The theme of the VMware Communities Podcast #79, white boxes and home labs, spurred quite a discussion around the blogosphere, as many bloggers and people learning about virtualization go about this differently. Surprisingly, many utilize of physical systems to test and learn about virtualization.

Many of the bloggers took time to outline the steps they take and detail their private labs. I wrote about my personal virtualization lab at my personal blog site, outlining the layout of where I do most of my personal development work.

I make it a priority to separate my professional virtualization practice from my blogging activities. In my case, the lab is basic in that the host server, a ProLiant ML 350, is capable of running all of the major hypervisors. Further, I make extended use of the nested ESX (or vESX) functionality.

For a lab to be successful as a learning vehicle, some form of shared storage should be used to deliver the best configuration for advanced features. In my lab, I use a DroboPro as an iSCSI SAN for my ESXi server and all subsequent vESX servers. I can also use the iSCSI functionality to connect Windows servers to the DroboPro for storage using the built-in iSCSI Initiator. I purchased the DroboPro unit at a crossroads of product lifecycles, but the DroboElite was released in late 2009 and offers increased performance as well as dual Ethernet interfaces for iSCSI connectivity.

While my home lab is sufficient to test effectively all elements of VMware virtualization, there are some really over the top home labs out there. Jason Boche's home lab takes the cake as far as I can tell. Check out this post outlining the unboxing of all the gear as it arrived and his end-state configuration of some truly superior equipment.

How do you go about the home lab for virtualization testing? Do you use it as a training tool? Share your comments here.

Posted by Rick Vanover on 03/04/2010 at 12:47 PM6 comments

I am not sure when the typical server consolidation with virtualization officially became known as an on-premise private cloud, but it is. We will see more products start to blur the lines between on-premise and start to use the major cloud providers such as Amazon Web Services, Microsoft Azure and others.

One category that many internal infrastructure teams may want to consider sending to the cloud is the lab management segment. Lab management is a difficult beast. For organizations with a large amount of developers, the request for test systems can be immense. Putting development (lab-class) virtual servers, even if temporarily, in the primary server infrastructure may not be the best use of resources.

That is the approach that VMLogix has with the Lab Manager Cloud Edition product. Simply speaking, the lab management technology that VMLogix has become very popular in the virtualization landscape is extended to the cloud. A lot of history with VMLogix technology is manifested in current provisioning offerings with Citrix Xen technologies as well as a broad set of offerings for VMware environments. The Lab Manager Cloud Edition allows administrators to create a robust portal in the Amazon EC2 and S3 clouds to allow internal administrators to give developers server resources on demand without allocating the expense of internal storage and server resources.

The primary issue when moving anything to the cloud is security. For a lab management function, I believe security can be addressed with a few practice points. The first is to use sample data in the cloud if a database is involved. You could make a case for intellectual property with the developer's code and configuration that may be used and their transfers between the cloud and your private network. This can be somewhat mitigated by the network traffic rules that can be set for an Amazon Machine Image (AMI).

VMLogix is working on integrating with the upcoming Amazon Virtual Private Cloud for Lab Management to bridge a big gap. In most private address spaces, this will allow your IP address space to be extended to the cloud over a site-to-site VPN to the cloud. This can also include Windows domain membership which would allow centralized management of encryption, firewall policies and other security configuration.

Just like transitioning from development to production in on-premise clouds, application teams need to be good at transporting code and configuration between the two environments. If this is in the datacenter or in the cloud, this requirement doesn't change.

The VMLogix approach is to build on Amazon's clearly defined allocation and cost model for resources to allow organizations to provision their lab management functions in the cloud. This covers everything from who can make a virtual machine to how long the AMI will be valid. Lab Manager Cloud Edition also allows AMIs to be provisioned with a series of installable packages; such as an Apache web engine, the .NET Framework, and other almost anything you can support an automated installation or configuration. Fig. 1 is a snapshot of the Lab Manager Cloud Edition portal:

|

| Figure 1. The Lab Manager Cloud Edition allows administrators to deploy virtual servers in the Amazon cloud with a robust provisioning scheme. (Click image to view larger version.) |

Does cloud-based lab management seem appropriate at this time? Do we need something like Amazon VPC in place first? Share your comments here and let me know if you want to see this area explored more.

Posted by Rick Vanover on 03/01/2010 at 12:47 PM6 comments

Thin-provisioning, a great feature for virtual machines, uses the disk space required instead of what is allocated. I frequently refer to the Windows pie graph that displays used and free space as a good way to explain what thin-provisioned virtual disk files “cost” on disk. All major hypervisors support it, and there is somewhat of a debate as to whether or not the storage system or virtualization engine should manage thin-provisioning.

On the other hand, many storage systems in use for virtualization environment support a thin-provisioned volume. This is effectively aggregating the same benefit across many virtual machines. In the case of a volume that is presented to a physical server directly, thin-provisioned disks can be used in that way as well. Most mainstream storage products with decent management now support thin-provisioning of virtual machine storage types, primarily VMware's vStorage VMFS and Windows NTFS file systems. NTFS is probably marginally more supported simply due to Windows having broader support. A thin-provisioned volume on the storage system effectively is VMFS- or NTFS-aware for what is going on inside. This means that it knows if a .VMDK file is using its full allocation.

The prevailing thought is to have the storage system perform thin-provisioning for virtualization volumes. There is an non-quantified amount of overhead that may be associated with a write operation that would extend the file. You can hedge this off by using the 8 MB block size on your VMFS volumes, due to its built-in efficiencies or allocating a higher performance tier of disk. For the storage system to manage thin-provisioning, you're using on the disk controllers to manage that dynamic growth with direct access to write cache. What probably makes the least sense is to thin-provision on both environments. The disk savings would be minimal, yet overhead would be increased.

How do you approach thin-provisioning? Share your comments below.

Posted by Rick Vanover on 01/27/2010 at 12:47 PM7 comments