IBM this week opened a new Cloud Resiliency Center to help enterprises mitigate business interruptions and recover from disasters.

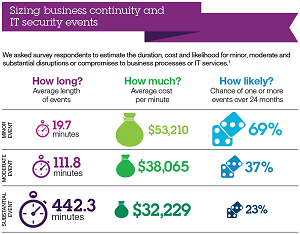

The company said the state-of-the-art facility in Research Triangle Park, N.C., provides cloud-based business continuity services to protect against potentially costly business disruptions. Such disruptions can be quite costly, according to recent research. IBM pointed to a Ponemon Institute study it commissioned that pegged the cost of a long-lasting outage at $32,000 per minute on average. Beyond that, in these days of instant social media commentary on any major corporate problem or outage, long-lasting disruptions can harm a company's reputation and impact future revenue.

To prevent that, "IBM's new resiliency center integrates cloud and traditional disaster recovery capabilities with innovative physical security features," the company said in a statement. "With cloud resiliency services, the recovery time of 24 to 48 hours that was once deemed the industry standard has shrunk dramatically to a matter of minutes. Open 24 hours a day, seven days a week, the resiliency center team will monitor developing disaster events and then mobilize as needed to ensure that the infrastructure for all customers is configured to handle the latest threats to keep data, applications, people and transactions secure."

[Click on image for larger view.] The impact of business disruptions. (source: IBM)

[Click on image for larger view.] The impact of business disruptions. (source: IBM)

The cloud-hosted center seems fitting, as cloud computing comes with all kinds of security implications. For example, other recent research indicates cloud computing itself can increase the risk of costly security problems, while yet other research found the No. 1 expected benefit of enterprises moving to the cloud was "disaster avoidance/recovery and business continuity."

In fact, IBM said, the business continuity and disaster recovery market is expected to grow dramatically, totaling almost $32 billion by 2015.

IBM hopes to capitalize on that opportunity by improving business resiliency services such as automated backup of data and applications; duplication of IT infrastructures; expedited recovery of data, applications and servers; and easier storage, management and retrieval of data.

The new North Carolina center joins IBM's stable of 150 such centers in 50 countries. The company plans to open two more resiliency centers this year in Mumbai, India, and Izmir, Turkey.

One customer that helped IBM break in the new North Carolina center is Monitise, which handles mobile money payments and transfers. The company uses the new center and one in Boulder, Colo., to expand its mobile commerce ecosystem.

"Banking, paying and buying on mobile is becoming an increasingly integral and recognizable part of daily life -- so for us as a Mobile Money provider, delivering a quality, always-on service is essential," said Monitise exec Adam Banks in a statement. "As we expand globally, this partnership with IBM allows us to provide a consistent, reliable customer service while having in place a proven cloud resiliency plan that ensures us that no matter the issue, our real-time service capabilities will not be impacted."

Of course, IBM isn't alone in chasing the growing market for cloud-based business continuity and disaster recovery services. Other industry heavyweights such as HP, VMware and Microsoft offer similar services, along with numerous smaller companies.

According to Microsoft-sponsored research conducted by Forrester Consulting, "Forty-four percent of enterprises either are extending disaster recovery to the public cloud or plan to do so. And 94 percent of enterprises that are doing disaster recovery to the cloud say it helps to lower costs and improve service-level agreements."

Posted by David Ramel on 09/24/2014 at 1:37 PM0 comments

Red Hat Inc. CEO Jim Whitehurst says the IT industry is undergoing a momentous shift from the client-server model to cloud-mobile, and the vendor of enterprise Linux and other open source software is pushing to come out the other end on top.

In the blog post, "Only a Matter of Time, Cloud Winners Will Be Chosen Soon," Whitehurst yesterday outlined recent moves and successes of the company while letting everyone know that enterprise cloud vendor supremacy is the next target.

"Right now, we're in the midst of a major shift from client-server to cloud-mobile," Whitehurst said. "It's a once-every-20-years kind of change. As history has shown us, in the early days of those changes, winners emerge that set the standards for that era -- think Wintel in the client-server arena. We're staring at a huge opportunity -- the chance to become the leader in enterprise cloud, much like we are the leader in enterprise open source."

The Red Hat leader's post contained no news, but seemed to be a rally-the-troops message signaling a change in corporate strategy.

"We want to be the undisputed leader in enterprise cloud, and that's why Red Hat is going to continue to push," Whitehurst said. "We're going to continue to grow our capabilities in OpenStack, OpenShift and CloudForms. We're going to continue to push our advances in storage and middleware and offer those to customers and our partner ecosystem."

Well, Jim, good luck in that. One thing you certainly nailed is the admission that "The competition is fierce."

Microsoft, Amazon Web Services Inc., IBM and countless other traditional industry heavyweights -- along with challenging upstarts and startups -- might have something to say on the matter.

Take Oracle Corp., for example, which might have signaled a change in corporate strategy itself this week with the news that industry icon Larry Ellison was stepping down as CEO, a position that will be staffed by two people henceforth: Safra Catz and Mark Hurd.

Oracle's Ellison Is "On the [PaaS] Attack."

Oracle's Ellison Is "On the [PaaS] Attack."

Yesterday, The New York Times previewed the upcoming Oracle OpenWorld conference in the article, "Hurd: Oracle Takes On Microsoft in the Cloud."

"Not to give too much away, but Mark Hurd, the newly minted co-chief executive of Oracle Corp., is ready for a battle," wrote Quentin Hardy in the Times on the same day as Whitehurst's missive. "It starts this Sunday night, and it features his boss, Lawrence J. Ellison, throwing down against Microsoft for control of corporate cloud computing applications."

Hurd was quoted as saying the conference's opening keynote address will be about the company's "unique opportunity to be the leader of the next generation of cloud." Hurd noted that Ellison was going to announce the company's new Platform-as-a-Service (PaaS) offering, stating, "We want to be on the attack."

There's a lot at stake, as the Times noted:

Platforms in the computer business are, of course, the holy grail, since before Oracle and Microsoft began competing decades ago. A company with a platform, the idea goes, is at the essential center of new software and business creation -- developers get rich, customers get loyal, the platform maker grows.

While Oracle and the feisty Ellison might be on the attack, Red Hat hasn't exactly been hunkering down in the PaaS trenches. Whitehurst mentioned the recent acquisition of FeedHenry that expanded "our already broad portfolio of application development, integration and PaaS solutions."

Whitehurst also noted the following recent moves, which might be seen as setting the company up for its big push to be the enterprise cloud leader:

- An agreement with Cisco Systems Inc. for a new integrated infrastructure offering for OpenStack-based cloud deployments.

- A collaboration with Nokia to bring the Red Hat Enterprise Linux OpenStack Platform to the Nokia telco cloud.

- A pact with Google Inc. to work on the Kubernetes project to manage Docker containers at scale.

- The acquisition of eNovance, a cloud computing services company.

- The acquisition of Intank, "which offers scale-out, open source storage systems based on Ceph, a top storage distribution for OpenStack."

- Recent launches of Red Hat Satellite 6, Red Hat Enterprise Linux OpenStack Platform 5 and Red Hat Enterprise Linux 7.

In the face of fierce competition, Whitehurst noted that "companies will have several choices for their cloud needs. But the prize is the chance to establish open source as the default choice of this next era, and to position Red Hat as the provider of choice for enterprises' entire cloud infrastructure."

So it should be interesting to watch how things shake out, though there might not ever be a clear winner in enterprise cloud computing. We might end up with some kind of oligarchical triumvirate, like in the old SQL Server/Oracle/DB2 relational database days.

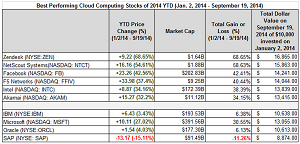

However, you can always get a clearer idea of where things stand in life by "following the money," of course, and Forbes this week kindly provided this capsule summation of the best-performing cloud computing stocks so far this year:

[Click on image for larger view.] The Forbes list of best-performing cloud computing stocks YTD 2014.

[Click on image for larger view.] The Forbes list of best-performing cloud computing stocks YTD 2014.

(source: Forbes.)

Posted by David Ramel on 09/23/2014 at 1:17 PM0 comments

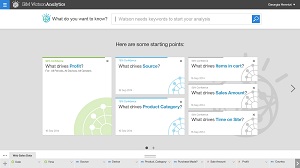

Watson is back. The "Jeopardy!" champion has graduated from winning T.V. game show competitions to fighting cancer and other important pursuits, and IBM says it will soon be able to answer your Big Data queries in the cloud.

IBM yesterday announced Watson Analytics will provide cognitive artificial intelligence to the company's cloud service users, who can perform Big Data analytics with natural language queries.

Initially, it will be a "freemium" service running on desktops and mobile devices, bringing Big Data analytics to the masses without the hassle of messing around with the complicated Apache Hadoop ecosystem or needing arcane data science skills.

IBM's Alistair Rennie said in a video that companies want to use analytics and better-informed decisions to change and improve every part of their businesses. However, he said, "the current generation of tools that they have get locked up in IT or in the domain of data scientists. And we just want to break that wide open and change the game by allowing every user in every business to have incredibly powerful analytics at their fingertips."

[Click on image for larger view.]What do you want to know?(source: IBM)

[Click on image for larger view.]What do you want to know?(source: IBM)

IBM said it obviated the need for specialized skills with three main innovations: a single business analytics experience; guided predictive analytics; and natural language dialogue.

The single experience is in contrast to other solutions that involve different analytics tools for different kinds of jobs. Watson will provide a seamless, unified way to use enterprise-grade, self-service data analytics in the cloud.

The guided predictive analytics finds hidden key facts relevant to your queries and discover patterns and relationships that would otherwise wouldn't be found. These in turn lead to new queries and fine-tuning to get to specific insights.

The natural language dialogue enables Siri- or Cortana-like questions, though you won't be able to speak to Watson (yet). You will, however, be able to ask questions such as: "What are the key drivers of my product sales?" and "Which benefits drive employee retention the most?"^ and "Which deals are most likely to close?"

After some beta testing (sign up here) of capabilities that will be made available in the next month, the service will be offered in a variety of free and paid packages in November.

"Watson Analytics offers a full range of self-service analytics, including access to easy-to-use data refinement and data warehousing services that make it easier for business users to acquire and prepare data -- beyond simple spreadsheets -- for analysis and visualization that can be acted upon and interacted with," IBM said in a statement.

The service will be hosted on IBM's SoftLayer cloud computing infrastructure provider and made available through the company's cloud marketplace, with future plans to make it available through Bluemix -- an implementation of the companies Open Cloud Architecture -- letting developers and independent software vendors use its functionality in applications they build.

Though IBM characterized yesterday's news as "its biggest announcement in a decade as the leader in analytics," it's not the company's first foray into cloud-based Big Data analytics. In June it announced IBM Concert software -- also available in the cloud marketplace -- targeting four of today's hottest transformative technologies: cloud, Big Data, social and mobility.

Now, Rennie said, the company is "blending the cognitive experience with the predictive experience" for enhanced analytical capabilities.

Watson, following on the heels of IBM's Deep Blue chess-playing supercomputer, gained national recognition in 2011 by beating top human champions on the T.V. quiz show, "Jeopardy!," winning a $1 million prize that was donated to charity. The company first announced Watson Analytics in January as one of three new applications available in the Watson Group, into which the company said it was sinking a $1 billion investment.

Posted by David Ramel on 09/17/2014 at 9:32 AM0 comments

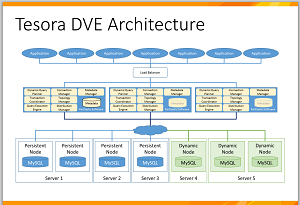

Tesora today announced its Database as a Service (DBaaS) offering for the OpenStack cloud platform.

Tesora DBaaS Platform Enterprise Edition is the first enterprise-ready commercial product stemming from the open source OpenStack Trove project, the company claimed. The mission of that project is "to provide scalable and reliable cloud DBaaS provisioning functionality for both relational and non-relational database engines, and to continue to improve its fully featured and extensible open source framework."

The Trove project was included with the April "Icehouse" release of OpenStack.

Tesora, which has been leading the Trove project's open source development efforts lately, is building on top of that project with its enterprise offering, adding support and professional services along with integrated technology enhancements.

The company's enterprise edition promises easier setup via installers and configured scripts for major OpenStack distributions; better database tuning performance through pre-configured and optimized guest images; database backups -- either full or incremental; replication for production applications; and thorough testing and certification across many popular databases such as MySQL, MongoDB, Redis, CouchDB, and Cassandra.

[Click on image for larger view.] The core component. (source: Tesora.)

[Click on image for larger view.] The core component. (source: Tesora.)

Those features help organizations address the thorny problem of cloud-based databases, Tesora said. Implementing database services over the cloud is harder than other services, according to the company, because databases demand specialized management skills and extensive knowledge of the features and functions unique to each application. Also, many resource-intensive databases don't work well in the cloud, particularly when high availability and reliability are paramount concerns.

"Self-service, cloud-based databases have proved harder to achieve than simply providing compute for a number of reasons," Tesora said in a white paper. "Databases, by their nature, manage data, making simple strategies used for stateless cloud services inapplicable. Scaling out stateful services complicates recovery and availability. Managing data privacy introduces additional complexity, and database servers don’t generally work well in virtualized environments."

The Trove project has been addressing these problems for several years, beginning at Rackspace. Tesora has since taken a leadership role in the project. The company was founded as ParElastic in 2010 by Ken Rugg and Amrith Kumar, who envisioned a pay-for-what-you-use utility database service that freed companies from the limitations imposed by using a single database.

Last fall, the company chose the open and pluggable OpenStack platform as its partner technology and joined the OpenStack foundation. Early this year, the company relaunched as Tesora ("treasure" in Italian, in fitting with the whole "Trove" theme). In June, the company open sourced its database virtualization technology as the basis of a free community edition of its software, with plans to enhance an enterprise edition with a Web-based UI, around-the-clock support and the other aforementioned enhancements.

"With the Tesora Community Edition we made Trove easier for users, and now with our Enterprise Edition we're making it more robust," Kumar said in a statement today. "We believe that this will pave the way for broader adoption of Database as a Service using OpenStack Trove by making it simpler to get up and running with new enterprise features and a higher level of support. We're removing another roadblock to the usage of OpenStack Trove."

Posted by David Ramel on 09/16/2014 at 2:18 PM0 comments

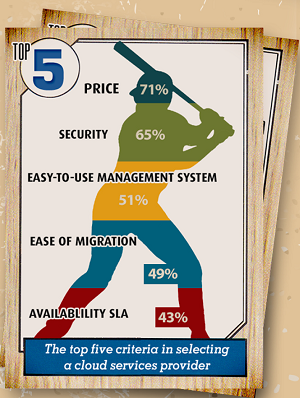

In a recent survey, half of the companies using cloud computing said they have benefitted by avoiding disasters and keeping the business running.

"Disaster avoidance/recovery and business continuity, as cited by 73 percent of all respondents, was the number one expected benefit of moving to the cloud," the survey report by Evolve IP said. "Of those with services in the cloud, five in 10 respondents indicated that they had already experienced that benefit. Improved flexibility (65.5 percent) and scalability (65 percent) followed suit."

Evolve IP, a communications and cloud services company, polled 1,275 IT pros and executives involved in cloud approval or implementation, focusing on Infrastructure as a Service (IaaS) and concentrating on IT and executive beliefs; service adoption; cloud implementation; expectations and barriers; and budgets.

Most of the survey results were predictable, such as: the cloud is wicked popular. Nearly nine out of 10 respondents regard the future of IT as being in the cloud, and 81 percent said they've deployed at least one cloud service. Extrapolated to an average, respondent organizations have deployed 2.7 services to the cloud.

[Click on image for larger view.]Evolve IP's baseball-themed illustration of the top reasons companies move to the cloud.

[Click on image for larger view.]Evolve IP's baseball-themed illustration of the top reasons companies move to the cloud.

(source: Evolve IP)

The survey also asked if respondents were "cloud believers." To that question, 70 percent of higher-level executives answered in the affirmative, while 58 percent of their lower-level manager counterparts agreed, which was five percentage points higher than a similar survey conducted last year.

"The upward trend of faith in the cloud among IT managers is notable since it's the IT managers who are often required to manage the deployment of cloud services, demonstrate the tangible benefits of cloud migrations, and select the vendors that will assist with deployments," Evolve IP said.

Of those who have actually moved to the cloud, the overwhelming virtualization hypervisor of choice was VMware ESX, favored by more than 82 percent of respondents. Citrix Xen came in second at 26 percent and KVM was third at 6 percent. However, when it came to deploying apps, Citrix scored somewhat better. It was used by 28 percent of companies deploying JD Edwards applications, compared to 33 percent on VMware. DB2 applications were also more competitive, with Citrix clocking in at 36 percent and VMware at 44 percent.

On the problematic side of things, there was less worry than last year about concerns and barriers regarding cloud implementations, though the primary concerns were the usual big three: security, legal/compliance and privacy.

Other major changes from last year's survey were reported by Evolve IP thusly:

- Survey respondents are slowly gaining more knowledge about the cloud.

- Overall, concerns about moving to the cloud have decreased.

- IT managers are coming around to the cloud, but are not yet as enthusiastic as their

directors and executives.

- The number of services in the cloud continues to grow.

- Adoption of Microsoft products in the cloud is increasing.

- Servers/datacenters, desktops and phone systems all saw greater plans for

adoption compared to 2013.

"This year's survey reinforces last year's data with a few major changes," said Evolve IP exec Guy Fardone. "We continue to see across the board drops in barriers to moving to the cloud and more support from IT managers as they've become more aligned with business executives. Also, as we have seen in our business, companies looking to move to the cloud on their own are experiencing some hiccups along the way. As a result, almost one in four organizations will use a third-party provider."

Evolve IP is just such a third-party provider and, unsurprisingly, was singled out by Fardone as a good candidate for that job.

Posted by David Ramel on 09/10/2014 at 1:43 PM0 comments

You have to give Cisco Systems Inc. credit: It's certainly not sitting idly by while its traditional proprietary networking model comes under siege by upstart software-defined networking (SDN) and virtualization technologies, sophisticated cloud implementations and the open networking movement.

Coming off a tough earnings call last month with investors and the announcement of a massive layoff, the company this week made all kinds of news in conjunction with its partner, Red Hat, a leader in the open source arena.

The Wall Street Journal and other media outlets have reported how the networking giant is under pressure from customers and analysts who want to see the company emphasize "lower prices, interoperable gear and faster innovation with new SDN networking gear."

Bloomberg, meanwhile, reported "Goldman Sachs Group Inc., Verizon Communications Inc. and Coca-Cola Enterprises Inc. are telling the world's largest networking-gear maker that they won't keep paying for expensive equipment, when software can squeeze out more performance and make the machines more versatile," in an article titled, "

Cisco CEO Pressured by Goldman Sachs to Embrace Software."

So yesterday, in what could be seen as a direct response to the aforementioned criticisms, Cisco and Red Hat announced an escalation of their partnership in three main areas: the open source OpenStack cloud platform; Cisco's Application Centric Infrastructure (ACI) -- its answer to SDN; and the "Intercloud," described by Cisco as "a network of clouds."

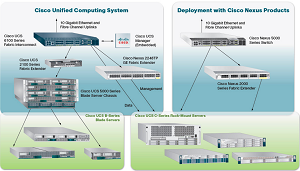

"Cisco UCS Integrated Infrastructure for Red Hat Enterprise Linux OpenStack Platform (UCSO) will combine Cisco Unified Computing System (UCS), Cisco ACI and Red Hat Enterprise Linux OpenStack Platform," the companies said in one of many announcements yesterday.

[Click on image for larger view.]The Cisco Unified Computing System

[Click on image for larger view.]The Cisco Unified Computing System

(source: Cisco Systems Inc.)

UCS is a 5-year-old datacenter platform that seeks to integrate hardware, virtualization support, switching fabric and management functionality into one cohesive platform, simplifying management, according to Wikipedia.

Cisco said its UCS Integrated Infrastructure solution combines UCS servers with Nexus switches, UCS Director management software and storage access as key components for building private, public and hybrid clouds.

On the OpenStack aspect of the expanded partnership, the companies said they "are working together to provide fully supported, certified platforms that deliver open source innovation and optimized functionality. The companies intend to collaborate to deliver validated architectures, a growing third-party ISV and IHV ecosystem, governance across clouds, and advanced services for lifecycle management of cloud solutions."

In the ACI part of the initiative, the companies said they "are extending the Cisco ACI policy framework to OpenStack environments, enabling customers to leverage greater policy-based automation in their cloud environments."

For the Intercloud, "Cisco and Red Hat intend to develop carrier-grade, service provider solutions for the Cisco Evolved Services Platform -- Cisco's software and virtualization platform for hybrid cloud deployments. Red Hat and Cisco also collaborate around OpenShift, Red Hat's award-winning Platform as a Service (PaaS) offering, and storage technologies, including Ceph."

The new UCSO collaboration between Cisco and Red Hat is expected to come in three variations: Starter Edition, Advanced Edition and Advanced ACI Edition.

The Starter Edition, expected by year's end, is designed for the simple and quick installation of private clouds. The Advanced Edition is for fast deployment and management of large private clouds. The Advanced ACI Edition will use the Cisco ACI technology to deploy and operate policy-driven infrastructure for large-scale clouds.

"Open source technology has emerged as the catalyst for cloud innovation," said Red Hat exec Paul Cormier. "Cisco is a market leader in cloud infrastructure and integrated infrastructure, while Red Hat brings market leadership and deep domain expertise of open source and OpenStack. By combining our complementary strengths, Cisco and Red Hat have a unique opportunity to capitalize on this market disruption."

Posted by David Ramel on 09/05/2014 at 1:27 PM0 comments

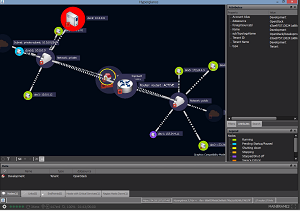

Real Status Ltd. today announced a software tool aimed at managing sprawling modern datacenters that encompass public and private clouds and Software-Defined Networking (SDN) implementations.

Now in general availability, Hyperglance version 3.3 seeks to be the one management platform that ties together the disparate systems of today's datacenters, using APIs to hook into cloud services such as Amazon Web Services (AWS) and OpenStack, along with SDN controller platforms including OpenDaylight and the HP Virtual Application Networks (VAN) SDN Controller.

It can also connect to the Nagios management system to integrate map alerts and other service data. A Hyperglance Open API JSON Web service provides a RESTful way to work with other data sources.

"The Internet of Things, hybrid cloud and SDN provide great agility, but also create complex physical and virtual relationships across an IT estate that are often difficult to fully understand," the company said. "Without such an understanding, communications can break down and systems might fail. Most traditional management systems look at static lists of data, charts, and very limited visualization of resource relationships, particularly across different platforms such as public and private cloud. It can be difficult to pinpoint exactly where errors exist and especially how errors, or potential error resolution, will affect other resources."

By simplifying systems management and aggregating and synchronizing data for visualization, navigation, and analysis, Real Status said enterprises can reduce the risk entailed with putting new cloud or SDN platforms into production, and also improve quality of service and increase efficiency.

[Click on image for larger view.]The Hyperglance topology live demo in action. (source: Real Status Ltd.)

[Click on image for larger view.]The Hyperglance topology live demo in action. (source: Real Status Ltd.)

The tool uses a 360-degree topology that can rotate, zoom in and out, make point-and-click selections, and issue commands to effect changes to single resources or collections. It provides existing filters or lets users create their own, and allows for interactive data visualizations.

"As part of the HP SDN Ecosystem, Hyperglance offers customers comprehensive graphical visibility and user-driven controls of network infrastructure powered by the HP VAN SDN Controller," said Michael Zhu, an exec at partner company HP. "Customers can now take advantage of HP SDN technology to instantly configure switches and optimize data paths in real time, with the confidence of knowing how such actions will affect complex virtual relationships."

Hyperglance is available as an Open Virtual Appliance that can be imported into VMware, Virtual box, OpenStack and AWS, or through the AWS marketplace. A free version for managing up to 50 nodes is available for download. At the high end, a maximum of 100,000 nodes can be managed for an annual $200,000 license. Eight other levels below that are available. For up to 1 million nodes, the company will provide a price quote upon request. A live demo, running a Hyperglance instance inside the browser, is available to see how it works.

Posted by David Ramel on 09/03/2014 at 11:35 AM0 comments

Movie special effects artists will be able to call upon the vast resources of the Google Cloud Platform for their time-consuming rendering processes now that the Internet search giant acquired Zync Inc.

Rendering is the frame-by-frame process of taking all the digital information of a video production, including audio and special effects like animation, texture, shading, simulated 3D shapes and so on, and converting it into a final video file format.

Anyone who has dabbled in home movies with software like Adobe Premiere, Adobe After Effects or Sony Vegas knows that the rendering process -- especially for complicated special effects -- is a high-resource, time-consuming chore, sometimes taking hours for a 20-minute project. With full-length cinematic movies, the resources and time required for rendering are staggering.

For example, the Wikipedia article about the 2009 movie Avatar stated: "To render Avatar, Weta used a 10,000-square-foot server farm making use of 4,000 Hewlett-Packard servers with 35,000 processor cores with 104 TB of RAM and 3 PB of network-area storage." That huge supercomputer-class system could take hours to render just one single frame.

With the incorporation of Zync Render technology into its cloud, Google will bring world-class computing power and capacity to the task.

"Creating amazing special effects requires a skilled team of visual artists and designers, backed by a highly powerful infrastructure to render scenes," said Google exec Belwadi Srikanth in a blog post announcing the acquisition yesterday. "Many studios, however, don't have the resources or desire to create an in-house rendering farm, or they need to burst past their existing capacity.

"Together Zync + Cloud Platform will offer studios the rendering performance and capacity they need, while helping them manage costs," Srikanth continued. "For example, with per-minute billing, studios aren't trapped into paying for unused capacity when their rendering needs don't fit in perfect hour increments." Specific pricing information wasn't given.

Zync Render technology is specifically targeted for the cloud. "By provisioning storage and keeping a copy of your rendered files and assets on the cloud, file I/O is efficiently managed to ensure you are only using the data that is necessary to render images," the five-year old company says on its Web site. But until now, that cloud was Amazon Web Services (AWS). Zync has for more than a year been listed on the AWS Marketplace, with pricing starting at $1.69 per hour.

Zync provides Web-based management tools and Python access APIs for supported software applications like Maya, Nuke, Mental Ray and others. It was used on movies like Star Trek Into Darkness and Transformers: Dark of the Moon.

"Since its inception at visual effects studio ZERO VFX, nearly five years ago, Zync was designed to not only leverage the benefits and flexibilities inherent in cloud computing but to offer this in a user-friendly package," the company said on its site. "Pairing this history with the scale and reliability of Google Cloud Platform will help us offer an even better service to our customers -- including more scalability, more host packages and better pricing."

Google said it will provide more information about its new initiative in the coming months.

Posted by David Ramel on 08/27/2014 at 8:32 AM0 comments

When you're high in the sky, you have a long way to fall, and the trip down can be spectacular.

Take airplanes, for example. Study after study has concluded that airline travel is statistically safer than driving your car, but when crashes happen, they get everyone's attention.

So it is with cloud computing. This week's Microsoft Azure outage just blew up the tech newswires, and no doubt it was a serious issue. Heck, these days, I get frustrated when someone doesn't return an IM or text within a couple minutes. I can't imagine the angst of those running mission-critical business applications in the cloud seeing their systems go south for hours.

Yet those statistics remain. According to an IDC study last fall:

The cloud solution also proved to be more reliable, experiencing 76 percent fewer incidents of unplanned outages. When outages occurred, the response time of the cloud solution was half that of the in-house team, further reducing the amount of time that IT users of the services supported by the information governance solutions were denied access. Overall, the combination of fewer incidents and faster response times reduced downtime by over 13 hours per user per year at a cost of $222 per user, a savings of 95 percent.

A more recent report by Nucleus Research about cloud leader Amazon Web Services (AWS) concluded:

Although cloud services provider outages are often highly publicized, private datacenter outages are not. Our data shows customers can gain significant benefits in availability and reliability simply by moving to a cloud services provider such as AWS.

And it seems to me reliability has improved with the great cloud migration. Years ago my work used to be interrupted so often that the phrase "the system is down" became a cliché. I would hear the same thing all the time at some doctor's office or store: "Sorry, we can't do that right now -- the system is down." It's probably no coincidence that the rock band System of a Down was formed in 1994.

So you have to deal with it. You can bet there's hair on fire at Microsoft these days, and there will be plenty of incentive to diagnose the recent problems and fix them and improve the company's cloud service reliability.

And then something else will happen and it will go down again.

No matter how many failover, "always on" or immediate disaster recovery systems are in place, there will be outages. So you just have to help mitigate the risks.

Of course, the cloud providers want to help you with this. As Microsoft itself

states about its Windows Azure Web Sites (WAWS), you should "design the architecture to be resilient for failures." It provides tips such as:

- Design a risk-mitigation strategy before moving to the cloud to mitigate unexpected outages.

- Replicate your database across multiple datacenters and set up automated data sync across these databases to mitigate during a failover.

- Have an automated backup-and-restore strategy for your content by building your own tools with Windows Azure SDK or using third-party services such as Cloud Cellar.

- Create a staged environment and simulate failure scenarios by stopping your sites to evaluate how your Web site performs under failure.

- Set up redundant copies of your Web site on at least two datacenters and load balance incoming traffic between these datacenters.

- Set up automatic failover capabilities when a service goes down in a datacenter using a global traffic manager.

- Set up content delivery network (CDN) service along with your Web site to boost performance by caching content and provide a high availability of your Web site.

Remove dependency of any tightly coupled components/services you use with your WAWS, if possible.

But all this has been said before, many times, and some people disagree, saying it's time to hold the cloud providers more accountable. Take, for example, Andi Mann, who last year penned the piece, "Time To Stop Forgiving Cloud Providers for Repeated Failures." The headline pretty much says it all. Mann goes into detail about the issue and writes:

We cannot keep giving cloud providers a pass for downtime, slowdowns, identity thefts, data loss, and other failures.

It is time for all of us to stop excusing cloud providers for their repeated failures. It is time we all instead start holding them accountable to their promises, and more importantly, accountable to our expectations.

There has even been an academic research paper published concluding "that clouds be made accountable to their customers."

I personally believe that anything made by humans is going to fail, and all we can do is try to prepare for this inevitability and pick up the pieces and keep going when it's over. Some agree with me, such as David S. Linthicum, who wrote an article on the GigaOM site titled, "Are We Getting Too Outage-Sensitive?" Mann, who was partially responding to that article, obviously disagrees.

And, indeed, it's been a tough week for Microsoft. Fresh on the heels of reporting

cloud market share gains, Visual Studio Online experienced some

serious problems and this week's security update was a

disaster.

So what's your take? Are Microsoft and other cloud providers doing enough? Should cloud users take more responsibility? Comment here or drop me a line.

Posted by David Ramel on 08/20/2014 at 11:23 AM0 comments

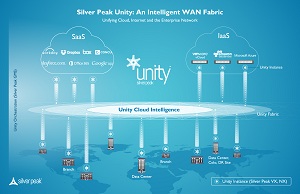

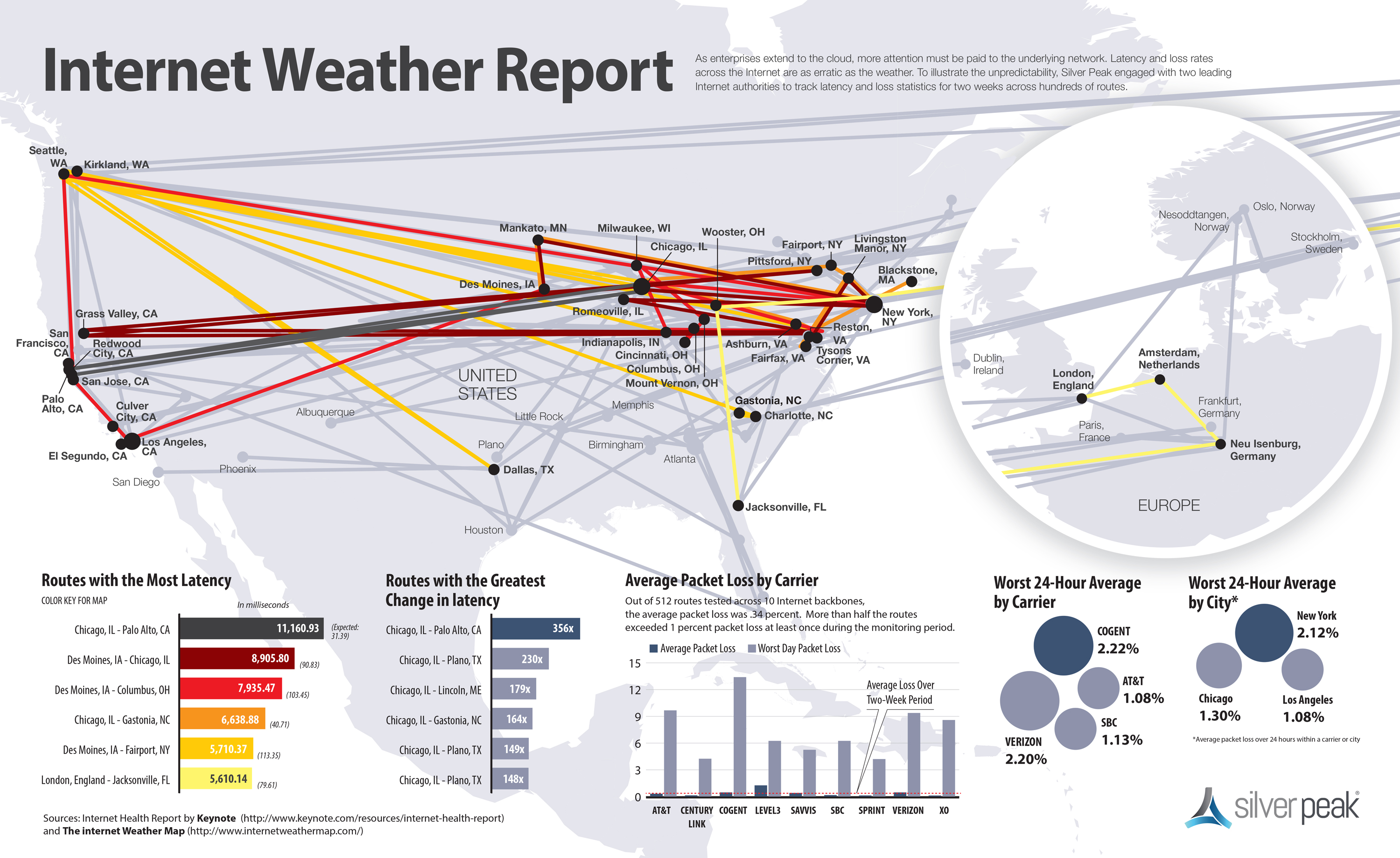

Silver Peak Inc. today announced a WAN fabric aimed at unifying enterprise networks with public clouds and optimizing Software-as-a-Service (SaaS) traffic by constantly monitoring service performance and orchestrating traffic according to the current "Internet weather."

Called Unity, the "intelligent" fabric provides a complete map of the cloud-connected network and uses new routing technology so enterprises can manage and optimize SaaS connectivity and bypass heavy weather -- or congested paths.

The Unity fabric is a network overlay generated by company software running in data centers, remote offices and cloud interconnection hubs along with the company's Cloud Intelligence Service. The fabric smoothes out connection performance for any combination of services, SaaS applications or Infrastructure-as-a-Service (IaaS) resources.

Unity instances use data collected by the cloud intelligence -- including the physical locations from which data is being served -- to track metrics such as data loss and network latency, which is then shared with other instances so optimal paths can be selected for any user to any SaaS connection. Another piece of company software, the Global Management System, orchestrates the optimization.

[Click on image for larger view.] The Unity system. (source: Silver Peak Inc.)

[Click on image for larger view.] The Unity system. (source: Silver Peak Inc.)

In addition to the advanced WAN routing and cloud intelligence, the Unity fabric also features accelerated encryption; data reduction achieved through WAN compression and deduplication; path conditioning that reconstitutes dropped packets and re-sequences those that might take multiple paths; and traffic shaping that prioritizes classes of traffic, giving the least attention to personal or recreational use, for example.

"SaaS has taken business productivity to new heights, but it has also dramatically changed the dynamics of IT networking," said company exec Damon Ennis. "The weather on the Internet can be congested one minute and tolerable the next, making the performance of cloud services unpredictable. Even worse, your IT staff has no way to monitor traffic to the cloud once it leaves the WAN. Silver Peak's Unity fabric gives them capabilities they've never had before. It turns the Internet into your own private, high-performance network and brings SaaS under the control of IT."

Unity support leading IaaS providers such as Amazon Web Services (AWS), VMware vCloud and Microsoft Azure. That includes more than 30 individual SaaS applications such as Microsoft Office 365, Dropbox, Salesforce.com and Adobe Creative Cloud. Eventually, the company said, "every" SaaS app will be supported.

[Click on image for larger view.] How's the weather? (source: Silver Peak Inc.)

[Click on image for larger view.] How's the weather? (source: Silver Peak Inc.)

One vCloud user, Nevro Corp., professed enthusiasm for the new solution. "The rate at which our employees use cloud services has spread like wildfire," said Nevro exec Jeff Wilson. "With users in different parts of the world, I was not only finding it difficult to maintain consistent performance for my users, but I've been constantly surprised by new cloud applications popping up on users' screens. Silver Peak has already been an instrumental partner in helping us accelerate data mobility for our VMware vCloud environment, and I'm excited to see Silver Peak Unity extend that expertise to give me the ability to control the performance and management of our core cloud-based services. Now we can punch a hole through to the systems that drive our business."

Silver Peak said Unity will help address those new cloud apps popping up on users' screens, exemplifying the problem of "shadow IT" in which staffers might use their own devices to access cloud services or unofficial cloud service providers without organizational knowledge or control. The company quoted a McAfee-sponsored study in which 81 percent of line-of-business workers and 83 percent of IT staff admitted to using non-approved SaaS apps.

Subscriptions to the Cloud Intelligence Service are $5,000 per enterprise, per year with unlimited SaaS application support, the company said, while Silver Peak software instances start at $551 per year. "To optimize their networks for SaaS, new customers must purchase a minimum of two Silver Peak software instances and a subscription to Unity Cloud Intelligence," the company said. "Existing Silver Peak customers simply need to upgrade their Silver Peak software to release 7 and subscribe to Unity Cloud Intelligence. Customers can expand their networks by adding Silver Peak instances in cloud hubs or IaaS providers."

Posted by David Ramel on 08/13/2014 at 5:24 PM0 comments

Small businesses and entrepreneurs are moving to the cloud along with the big boys, enabled to compete by new technologies that democratize IT, according to a new report commissioned by Intuit Inc.

While some 37 percent of U.S. small businesses have so far adapted to cloud computing, that number is expected to grow to more than 80 percent in the next six years, according to "Small Business Success in the Cloud," prepared by Emergent Research.

"Whether you're a tech start-up in Silicon Valley or a mom-and-pop shop on Main Street, cloud technology presents radically new opportunities, and potentially disruptive changes," said Intuit exec Terry Hicks in a statement accompanying the report. "This report is all about developing a deep understanding of how small business can stay ahead of the curve."

The report sponsored by Intuit -- which provides cloud services to small businesses -- reveals how small businesses progressively adapt to the new technology, at first looking for increased efficiency and then moving on to using new business models. The report divides the users into four "faces of the new economy" or "personas": plug-in players; hives; head-to-headers; and portfolioists.

[Click on image for larger view.] How the cloud democratizes IT.

[Click on image for larger view.] How the cloud democratizes IT.

(source: Intuit and Emergent Research)

Plug-in players are described as small businesses that just plug in to cloud services for business operations such as finance, marketing and human resources rather than managing the nuts and bolts themselves.

Hives are cloud-adapted businesses whose employees join together virtually from different locations, with staffing levels being adjusted to meet project needs.

Head-to-headers consist of an expanding number of small businesses who will compete head-to-head with large companies by utilizing the growing number of available platforms and plug-in services not previously available.

Portfolioists are described as freelancers who create portfolios from multiple income streams. "These largely will be people who start with a passion, or specific skill, and are motivated primarily by the desire to live and work according to their values, passions, and convictions," according to the companies' statement. "They will increasingly build personal empires in the cloud, finding previously unseen opportunities for revenue generation."

Together, the companies said, the above personas illustrate new opportunities presented by cloud for entrepreneurs and how the "human side" of cloud computing's ability to make it cheaper and easier to start and grow a business.

"Today, the U.S. and global economy is going through a series of shifts and changes that are reshaping the economic landscape," said Steve King of Emergent Research. "In this new landscape, many people are using the power of the cloud to re-imagine the idea of small business and create new, innovative models that work for their needs."

Posted by David Ramel on 08/13/2014 at 11:02 AM0 comments

I figured the government was on top of cloud security.

Awareness of the issue couldn't be much higher, what with all the news of data breaches and software vulnerabilities. Everyone is going to the cloud -- including Uncle Sam -- and security is always of paramount concern.

As a Ponemeon Institute report revealed in a study about the "cloud multiplier effect," moving to the cloud brings increased security concerns.

Cloud adoption isn't a new phenomenon anymore and with maturity of the technology comes decreased concern about security, according to the RightScale "2014 State of the Cloud Survey."

"Security remains the most-often cited challenge among Cloud Beginners (31 percent) but decreases to the fifth most cited (13 percent) among Cloud Focused organizations," the RightScale report said. "As organizations become more experienced in cloud security options and best practices, the less of a concern cloud security becomes."

Well, the government should be getting experienced. President Obama's "cloud first" initiative was announced in 2010.

Sure, the program had some rough spots. A year later, CIO.gov reported "each agency has individually gone through multiple steps that take anywhere from 6-18 months and countless man hours to properly assess and authorize the security of a system before it grants authority to move forward on a transition to the cloud."

To address that red tape, the Federal Risk and Authorization Management Program (FedRAMP) was enacted to provide "a standardized approach to security assessment, authorization, and continuous monitoring for cloud products and services."

That led to 18 cloud services providers receiving compliance status and a bunch more in the process of being accredited. So now we have government-tailored cloud services available or coming from major players such as Microsoft, Amazon Web Services Inc. (AWS) and Google Inc., along with a host of lesser-known providers.

So, like I said, I figured the government was all set.

Then I read about a report issued last month with the alarming title of "EPA Is Not Fully Aware of the Extent of Its Use of Cloud Computing Technologies."

[Click on image for larger view.] Report on EPA Cloud Usage: "The EPA did not know when its offices were using cloud computing."

[Click on image for larger view.] Report on EPA Cloud Usage: "The EPA did not know when its offices were using cloud computing."

(source: U.S. Environmental Protection Agency Office of Inspector General)

"Our audit work disclosed management oversight concerns regarding the EPA's use of cloud computing technologies," reported the U.S. Environmental Protection Agency's Office of Inspector General. "These concerns highlight the need for the EPA to strengthen its catalog of cloud vendors and processes to manage vendor relationships to ensure compliance with federal security requirements."

It then listed some bullet-point takeaways, including:

- The EPA didn't know when its offices were using cloud computing.

Which opened up a whole BYOD/shadow IT can of worms. Sure, the government addressed that issue. It addresses every issue. But if the EPA doesn't know when its offices are using cloud computing, how can the federal government know what each of its more than 2 million employees are doing with the cloud from their individual devices?

An article last year in Homeland Security Today found "Federal BYOD Policies Lagging."

"Tens of thousands of government employees show up for work every day with a personal mobile device that they regularly use for official work," the article said. "Yet few, if any, of those employees have knowledge of their agency's policy governing how and when personal mobile devices can be used, or what information they are allowed to access when using those devices."

And that's not to mention the shadow IT problem, as reported in an article by

Government Computing News titled "

Is Shadow IT Spinning out of Control in Government?"

An excerpt:

"People need to get their work done, and they'll do anything to get it done," said Oscar Fuster, director of federal sales at Acronis, a data protection company. "When tools that can help them appear in the marketplace, and in their own homes, they chafe when administrators do not let them use them. The result often is an unmanaged shadow infrastructure of products and services such as mobile devices and cloud-based file sharing that might be helpful for the worker, but effectively bypasses the enterprise's secure perimeter."

What's even more worrisome is that federal exposures can be deadly serious. Regular enterprise data breaches can cost a lot of money to organizations and individuals, but government vulnerabilities can get people killed. Think about covert agents' identities being revealed in Snowden-like exposès or Tuesday's revelation by Time that New Post-Snowden Leaks Reveal Secret Details of U.S. Terrorist Watch List."

"The published documents describe government efforts using the Terrorist Identities Datamart Environment (TIDE), a database used by federal, state and local law-enforcement agencies to identify and track known or suspected terrorist suspects," the article states.

And how about more organized threats? Yesterday, just a day after the Time article, The Washington Post published this: "DHS contractor suffers major computer breach, officials say. The victimized company said the intrusion "has all the markings of a state-sponsored attack." That means we're being targeted by foreign countries that want to do us harm. And it's been going on for a while.

VentureBeat last year reported that "Chinese government hackers are coming for your cloud," and last month The Guardian reported via AP that "Chinese hackers 'broke into U.S. federal personnel agency's databases.'"

And those are just a few of the attacks that the public knows about. If Russian hackers can steal more than 1 billion Internet passwords, as reported Tuesday by The New York Times, who knows what Russian government-backed hackers have been doing?

Scarier still, judging by the aforementioned three attacks revealed in the past three days alone, the pace of attacks seems to be picking up -- just as government cloud adoption is picking up. "Federal Cloud Spending Blows Past Predictions," Forbes reported last month. "Today, U.S. federal government cloud spending is on the rise as government agencies beat their own predictions for fiscal 2014," the article said.

I know the feds are addressing the cloud security issue, but government resources will probably be trending down and government red tape can make things so complicated I fear for the effectiveness of security precautions.

AWS alone, in noting that its government cloud service "has been designed and managed in alignment with regulations, standards and best-practices," lists 12 such programs, with names like "SOC 1/SSAE 16/ISAE 3402 (formerly SAS70)" and "FIPS 140-2."

If the government can bungle a much-publicized Web site rollout of this administration's signature health care program, how can all the individual entities moving to the cloud correctly implement their systems, even if the cloud service is properly vetted?

Yes, the allure of cost savings, efficiency and innovation provided by cloud solutions is great, but at what risk?

Posted by David Ramel on 08/07/2014 at 10:26 AM0 comments