We live in interesting times when it comes to technology. That much is certain. Interesting times because so much change is being introduced to the traditional way we did computing. Virtualization revolutionized the way we do computing, and changed it forever and for better.

The most important thing that virtualization introduces is the abstraction layer. Some of you may not have thought about this profoundly, but there are several layers of abstraction, some we have already achieved and some we hope to achieve in the future. The different abstraction layers are the fundamental building blocks of what we call "cloud computing."

So what are the different layers of abstraction? Here, I have listed some:

Abstraction of hardware from software This type of abstraction is commonly known as the hypervisor and serves as an intermediary layer between hardware and the software that installs immediately on it. This type of abstraction layer is available for servers and desktops.

Abstraction of applications from operating systems This type of abstraction is more commonly known as application virtualization and occurs anytime you are able to run an application on an operating system without actually having to install it on that operating system, or have it modify that operating system in any way -- it is simply layered on top of it.

Abstraction of user workspace This is more commonly known as user workspace virtualization. In earlier days it was known as profile management. The technology and software vendors have advanced so much that it would not be fair to just label them profile managers, since the technology does so much more. With user workspace virtualization, you abstract the user settings, files and preferences from the reliance on the local operating system by virtualizing them and making them available to the user regardless of device or access method. The user consistently gets the same user experience.

Abstraction of network This is commonly known as virtual networking, which allows virtual machines to connect to virtual networks. VMs are completely abtracted from the physical network while providing seamless integration.

I/O abstraction One of my favorite topics in the IT world today is the abstraction of I/O, more commonly known as I/O virtualization or converged networking. The idea behind I/O virtualization is to standardize the transport mechanism and eliminate moving parts.

For example, if you use an Infiniband card or a 10GE card, you are capable of transporting regular Ethernet traffic, 10GE, Fiber Channel Traffic and with some technologies, even FCoE traffic. Furthermore, based on the technology you deploy, you can take this farther by carving out virtual cards that take advantage of the same transport. If you place an InfiniBand card in your server that has a 20GB connection back to the top of rack switch, you can then carve out this 20GB of bandwidth into 3x1GB Ethernet, 1x10GE etc., without changing the cabling or the card in the server. When new technology comes out tomorrow, it can easily be added to the top of rack switch and transported using the same cable. Yes, it's future-proof.

Abstraction of storage This type of abstraction is interesting and depending on who you ask, you will get a different answer. Certainly, technologies are out there that can do storage virtualization in the form of abstracting different storage platforms behind a single array and managing it that way. But the abstraction I am looking for is one that eliminates the need for LUNs, for RAID, and is true storage virtualization, where things like VMware datastores are not reliant on an underlying LUN for instance, where a VM is not tied to a LUN, etc. While this form of abstraction is not here yet, it is within reach and a matter of time.

All these abstraction layers independently are great technologies. Collectively, they're the foundation for cloud computing. Some of these technologies have existed for a while, others are new and a few are yet to be developed, but one thing is for certain: We live in the age of abstraction.

What do you think? Have I missed an abstraction layer?

Posted by Elias Khnaser on 04/19/2011 at 12:49 PM7 comments

When I

wrote about type-1 client hypervisors, I made it very clear that I am biased towards them. I reiterate that again, so as to avoid getting grilled in the comments section below.

Don't get me wrong. I have VMware Fusion on my laptop and use it on a daily basis, but using a type-2 client hypervisor enterprise-wide? No thanks!

The idea behind desktop virtualization is to make management of desktops (among other things) easier. When using a type-2 client hypervisor, you double, triple or quadruple the number of OSes that you have to support. So instead of a user calling and saying, "I am having an issue with my desktop," they will call and say, "I am having an issue with my desktop; oh, by the way, I am also having a weird connectivity issue with VM2 and VM3 needs patching." Do you really want to do that?

I have been at conferences where speakers have touted type-2 client hypervisors as perfect for BYOPC, because you offload the support of the underlying OS to the manufacturer. My reaction is always: "Really? Seriously, you just said that?" So if a user calls up with an issue, you will tell them to call the manufacturer? Well in my book, that does not fly in the corporate world. While the VM you provided them is working, the OS needed to launch that VM is having an issue. Therefore the user cannot get to the VM and is not productive. How long do you think it will be before an executive calls in and asks what kind of a messed up support model you have?

To add insult to injury, Microsoft developed MED-V, so now you have a way of centrally managing all the VMs running on your desktop. Instead of addressing the issue, let's quadruple the numbers of OSes, and give an additional management console to manage them all. Now you are tasked with keeping all your VMs patched, AV software updated. Oh, and you get a new management tool that will end up being part of SCCM at some point. No way!

Now aside from my obvious dislike for type-2 in the enterprise, let's see... there's also:

- Reliance on parent OS rendering the VM at the mercy of that OS. If that instance of the OS does not work properly, your VM won't run.

- Performance is not on par, since the VM is sharing resources with the underlying operating system.

- Security is also tied to a certain extendt to the parent OS.

- Management and support of a new instance of an OS.

So, am I a fan of XP-mode in Windows 7? Absolutely not. Well, not for the masses at least. The excuse that we have applications that don't work on 64-bit Windows 7 and so we love the ability to serve up an application seamlessly into Windows 7 while it runs in XP, my answer to all that is: Instead of localizing the application to each user, deploy it using Terminal Server and XenApp. They've added the seamless application for ages and if you need apps that work on XP, you can publish those. Or, they might work on Windows Server 2003, so that is easier, smarter and better to manage.

In my book, type-2 client hypervisors are utterly useless in an enterprise deployment. Still, I would love to get your perspective on things and maybe, just maybe, I really am missing something here. I'd love to learn. Thoughts?

Posted by Elias Khnaser on 04/14/2011 at 12:49 PM7 comments

Recently, one of my customers was trying to decide whether or not to use type-1 or type-2 client hypervisor.

For those of that are not familiar with the technology, type-1 is a bare-metal install. You intall a thin software layer (the hypervisor) that then allows you to create virtual machines. It's very similar to VMware ESXi, Citrix XenServer and Microsoft Hyper-V, except instead of installing on server class hardware, you install it on laptops or desktops. Type-2, on the other hand, is installed on top of an operating system. An example is VMware Workstation, Fusion, Microsoft Virtual PC and Parallels.

Before I examine the pros and cons of each, I have to disclose that I am a bit biased towards type-1 client hypervisors. That being said, here's why:

Thin layer of software that abstracts the hardware. This is huge: In any environment you typically have several images that you use to deploy to different hardware profiles. These images are needed because of driver incompatibilities, chipsets, etc. With a type-1 hypervisor abstracting the hardware, you can deploy 1 VM to all hardware profiles.

Restoring user machine is fast. Speed is my second favorite feature. If a user corrupts his OS or for whatever reason it is deemed necessary to rebuild the user’s machine or replace it, it traditionally would take days. With a type-1, it takes minutes: Copy over the VM, run your scripts to configure the apps and printers, and the user is back online.

The ability to offer multiple VMs, with differing permissions. I can provide one that's locked down with no admin rights whatsoever, and another one with full admin rights that they can use for their personal use.

The ability to initiate a kill pill. This one is also huge: I have heard endless times how users lose their laptops with confidential information, etc. Well, if you could remotely initiate a kill pill and wipe it out, that data remains safe.

Performance is excellent with this type of client hypervisor. You should expect performance similar to what you see in its server counterpart.

Of course, with every gem there are cons. With type-1, the biggest con is limited hardware support. In some cases it might not be ideal for graphics-intensive applications (although we have seen significant strides and progress here). What I like about this approach is the hypervisor almost becomes ike a BIOS. Sure, you still patch and update your BIOS from time to time and you will most likely need to patch and update your type-1 client hypervisor from time to time as well. But you have just created another layer of security which makes breaking into the VM a bit harder.

For 98 percent of enterprise users, type-1 client hypervisors are perfect and by the time you are done deploying this to everyone, the issues and challeneges facing the remaining 2 percent will be resolved as well. This approach is perfect for scenarios where companies want to adopt BYOPC or just to provide better overall end point management. My favorite companies for providing this solution are Citrix XenClient, Virtual Computer and mokaFive.

Next time, I'll discuss the pros and cons of type-2 client hypervisors. But for now, I am eager to hear your thoughts on my analysis of type 1.

[Editor's Note: Corrected name of mokaFive.]

Posted by Elias Khnaser on 04/12/2011 at 12:49 PM6 comments

When designing your vSphere 4.1 infrastructure, it is important to know how to tweak it properly in order to yield the best performance possible based on your physical infrastructure. What I mean by that is: knowing the limitations of your network will determine how many concurrent vMotions you can successfully run without impacting performance (as an example).

Now there are many articles that describe how to limit the number of concurrent vMotions on earlier versions of ESX, but I could not find one that discussed how to limit it on vSphere 4.1. So I decided it would be useful to share what I know.

vMotion, and Storage vMotion operations for that matter, carry a per-resource cost of performing that operation on a per ESX host basis. For example, with vSphere 4.1 the cost of carrying a vMotion operation is 1, while in earlier versions of vSphere like 4.1, that cost was 4 (as is the case with ESX 3.5 as well).

Consequently, the maximum number of concurrent vMotions per ESX 4.1 host is 8, while the old maximum for 4.0 is 4, and for ESX 3.5 it's also 4. Now, it is important to know the maximum vMotions shared between Storage vMotions and vMotions. The cost of a Storage vMotion in vSphere 4.1 is 4. Therefore, you can have 4 concurrent vMotions and 1 Storage vMotion on a single ESX host.

To control these value, you can log in to your vCenter server, point to Administration | vCenter Server Settings. Table 1 lists the value, its cost and its maximum as it pertains to vSphere 4.1.

| Table 1. vSphere 4.1 settings for limiting vMotions. |

Value |

Description

|

Default Cost |

Maximum |

vpxd.ResourceManager.

costPerVmotionESX41 |

Cost to perform vMotion |

8 |

8 |

vpxd.ResourceManager.

costPerSVmotionESX41 |

Cost to perform Storage vMotion |

4 |

8 |

|

|

Those settings assume a 10G Ethernet for vMotions. vSphere 4.1 adjusts this maximum based on the network interface it detects; for example, a 10G Ethernet be default allows 8 concurrent vMotions, while a 1GB Ethernet defaults to 4 concurrent vMotions. If a less-than-1 GB network is detected, it defaults down to 2 concurrent vMotions.

Another way to modify these values is to edit the vpxd.cfg file located on the vCenter server.

Posted by Elias Khnaser on 04/05/2011 at 4:59 PM7 comments

I got lots of feeback on my last blog, "

Desktop Virtualization vs. Physical Desktops: Which Saves More?" Paulk from SF asked: 1. Won't there be client OS licensing costs for the virtual desktops or is that covered in the desktop virtualization software cost? 2. Does the $400/seat client operational/maintenance costs cover the operation and maintenance of the blade servers as well, or just the thin clients?

The answers are: Sure there are client licenses, but considering you have to pay this on physical or virtual, it washes out. So, no point in listing it. Microsoft has also significantly relaxed the VDI licensing; the $400 is just endpoint.

As for Anonymous, who comments: 100K IOPS, really? 15 x 1000 = 15k

My answer is: 100K IOPS is overkill, but keep in mind 15 IOPS per user when everything is running normal. With bootup storms it could go up to 100 IOPS per user. When using AV, if not architected properly it could go up to 100 IOPS per user. So, yes 100k IOPS at the price I am suggesting, it is a catch :)

Mike comments: So if I did the math correctly, you are going to put 60ish virtual desktops on each blade? It better be a pretty basic desktop you are rolling out to get that kind of density at a reasonable performance in my experience. The math starts to break down differently when you need a more robust desktop. So, this analysis could be correct for 1 use case, but not others.

Mike, 60 VMs is easily achievable especially if the idea is to run most apps using TS. However, on a blade with dual socket Nehalem or better Quad or six cores, this density is not a lot. If architected properly intensive applications are I/O intensive, and that can be overcome by offloading the IO and making sure your VMs are properly configured. I have run Sony Vegas at a university with a density of 60 VMs. The bottleneck with these VMs is usually the IO not the processor, so if you have a SAN that is going to perform, aka 100k IOPS, then it should cover your IO profile regardless. That being said, you can always add more servers, the CapEx spend will not change by much.

Adrian from Germany comments with a link to a

Brian Madden post, which references a Microsoft study. To Adrian, I say: Microsoft is not necessarily very VDI friendly, even though in their defense that attitude is changing. It is a Microsoft study geared at satisfying Microsoft's interest at the time of writing; there are hundreds of papers from Gartner, IDC and others that prove otherwise.

Nonetheless, I clearly stated that there are no CapEx savings and I am still unclear as to why you guys are still chasing CapEx. At the very least, desktop virtualization and physical rollouts are tied when it comes to CapEx. On the other hand, why do it the traditional way if you can save so much in OpEx and do things differently?

James K. tells us: I've seen 55 Windows XP VMs run successfully on a dual core server way back in ESX 2.5.3. Those were fairly light use systems, but even back then we could get good performance--and that limit of 55 was brought on more by running out of disk than processor or RAM. So, 60 VMs per host is definitely doable on 4 or 6 core processors.

Elias, where are you getting the estimates of yearly operational maintenance for VMs and desktops from? Just wondering if there's an industry-wide average for those numbers from an independent source.

James, thanks for reinforcing the math, it is always a breath of fresh air when someone else has seen similar results. Regarding OpEx, there are numerous Gartner, IDC and Forrester papers that list these numbers, but in all honesty I was modest, the numbers are higher for physical desktop maintenance. Still, I wanted to be fair, so I increased the number for desktop virtualization OpEx and decreased that of physical desktops.

And finally, NBrady notes: There's a third option not addressed here, cloud hosted virtual desktops (as a service). Whitepaper TCO comparison of PCs, VDI and Clous Hosted Desktops.

Absolutely, NBrady. Cloud based desktops are an option, albeit in my opinion still a bit premature. This will become more of a viable and realistic option when companies decide how they are going to embrace the cloud. Most companies like the idea of the cloud but are in flux as to how to approach it, what services to put in it, etc. Once that is identified, then yes I would agree with you. At this point there are challenges, especially with user data and intensive applications, and so on.

Another reader e-mailed me directly and brought up the fact that the cost of desktop virtualization was a little low and maybe I was pricing it based on educational pricing. That is actually true; my bad. Yet, even if we increase the price of desktop virtualization software by $100, thatt would increase the CapEx by $100,000, which is still tied with physical desktops.

Again, CapEx is the wrong area to focus on, as it represents less than 20 percent of the total desktop project. OpEx represents more than 80 percent, so why chase that 20 percent?

CapEx savings were huge in server virtualization, but desktop virtualization is very different. Servers have traditionally had a presence in datacenters and have had all the infrastructure needed. Desktops are decentralized with no presence in the datacenter, so building that presence will cost money.

Thanks for your comments, and keep them coming!

Posted by Elias Khnaser on 03/31/2011 at 12:49 PM2 comments

One of the biggest hurdles for desktop virtualization adoption is price. Through all my interactions with customers, I am always hearing: "I heard it was more expensive, I heard there are no cost savings," etc. So, let's compare a desktop virtualization rollout versus a traditional physical desktop rollout and see if it truly is more expensive.

That being said, keep in mind that from a CapEx expenditure stand point, you will not see much savings. But you will see significant OpEx savings. Usually when I say this, customers will say, "My CFO does not care about OpEx, we can quantify OpEx, we can't touch it." I say, have a little more faith. I will accept that argument and respond to you as follows: While it is not easy for every organization to quantify or justify OpEx, the next time your manager needs a project completed in a week and you have no cycles or your current employees have no cycle, the only option is to hire more help or use consultants.

The next time your CFO's laptop breaks down and it takes two days (being generous) to replace it and bring him back to productivity, the next time your CFO or CIO flames you for not providing adequate technical support or timely technical support to the user community which is generating money for the business, at that point you can reply to them by saying, "We have no cycles, we have been supporting our dispersed and remote user community for years using dated methods; we need a change." At that point, the OpEx will all of a sudden look very lucrative.

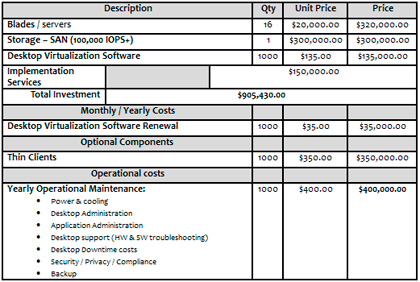

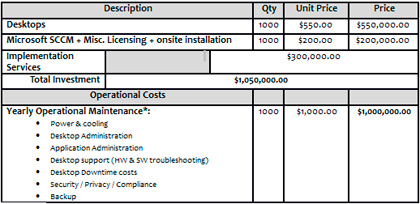

Let's proceed with the following scenario: Gordon Gekko Enterprises has 1,000 physical desktops that are 7 years old running Windows XP and are up against a hardware cycle refresh and an operating system upgrade to Windows 7. Let's also assume the company has done its homework and knows the benefits of desktop virtualization. The company is interested in a ball park price comparison between physical and virtualized desktops. To accomplish this, I am choosing the VDI type of virtualizing desktops. While there are other types that can be used to lower the cost, I'm going to assume worst-case scenario.

The company ran all the proper assessments and identified 15 IOPS per user as an acceptable number. (We'll keep it simple and not go into the different profiles etc...) The company has also identified that it wishes to give each VM running Windows 7 2 GB of memory and 1 vCPU. Again, we are going to ignore application delivery and assume they have that figured out. The company has also identified that they wish to use shared storage in the form of a SAN and have taken the proper steps to avert bootup and login storms as well as anti-virus storms, etc...

Gekko wants to use blade technology to support this environment and its calculations and risk factors accept 60 VMs per host. The math would be as follows:

1000 VMs / 60 VMs per host = 16 hosts

Considering 2GM memory per VM, this would translate into 120GB (128 of course is the right configuration) memory per host. The following tables show the TCO.

|

Table 1. Desktop Virtualization TCO |

|

|

Table 2. Physical Desktop Rollout TCO |

|

When reading these numbers, you can of course draw your own conclusions. Still, I want to discuss a few here and invite comment from you.

Now keep in mind I have put a lot of thought into this, so read the numbers carefully. I have also been very generous with these numbers.

For example, you can get more and better special pricing on servers from manufacturers than you can on desktops. Also note that I listed the cost of acquiring 1,000 new thin clients as optional, simply because you can turn your existing 7-year-old machines into thin clients and use them until they break, and so on.

I did want to list the cost of acquiring 1,000 new thin clients because I was always criticized about ignoring that number. So, for new companies that are just being formed that want to deploy desktop virtualization and have no equipment I have taken that into consideration as well.

I have provided these numbers to ruffle some feathers and stir up some healthy conversation and invite comment to get everyone's perspective, I really would love to take the pulse of our readers as it pertains to desktop virtualization cost.

Posted by Elias Khnaser on 03/29/2011 at 12:49 PM15 comments

Microsoft announced some really cool features with the upcoming System Center 2012. The demos and features are just awesome.

First you can now build private clouds and manage Citrix XenServer in addition to what was already supported (VMware ESX and Hyper-V, of course).

The cool thing about the new release is the way it aggregates physical equipment. You now have the ability to find bare metal servers, communicate with their management port and deploy an image on them. Sure, that's cool, but nothing special; we have seen this before, right? Yet, what's really cool is how you can add it to a resource pool and extend capacity on the fly. What I am very curious to know is, if you can have a cloud that is powered by heterogeneous hypervisors, for example, can resources be pooled from ESX, XenServer and Hyper-V under the same private cloud?

System Center 2012 will now be able to manage mobile devices, which is huge and reinforces a notion that I have been spreading for a long time: Mobile devices, -- cell phones, in particular -- are the next PC. System Center 2012 will allow you to manage devices built on Google Android, Apple iOS, Windows 7 Phone and Symbian.

Of course, I would not be this excited about it if it did not touch in some way on desktop virtualization, and the System Center 2012 announcement touched a lot on this subject. You will be able to optimize application delivery to your users based on the device they are using. System Center detects when someone is using an iPad and enforces the iPad policy and delivers applications and desktops that would run optimally in iPad, such as VDI for example. However, if the user is using a laptop, then it would deliver applications using App-V and would allow offline access as well. All seamless, all policy driven, all automated -- and all cool.

Microsoft also mentioned and demonstrated System Center 2012's ability to leverage a single Windows image for multiple applications -- basically, it's application and OS separation, as well as OS and endpoint device separation. Again, it's the modularization of IT and Microsoft's coming to terms with the fact that a desktop does not necessarily mean what is under your desk and that there will be instances where devices used don't necessarily have an OS.

I think System Center 2012 and all its subcomponents is going to be one of the most exciting releases Microsoft has had in a long time.

Posted by Elias Khnaser on 03/24/2011 at 12:49 PM5 comments

VMware PVSCSI is an optimized SCSI controller driver that is optimized to handle heavy I/O loads generated by a virtual machine. I am often asked, "When should one use VMware PVSCSI?" The answer is simple: Use it when virtualizing high I/O applications, such as Microsoft SQL Server or Exchange Server.

VMware recommends that you use the PVSCSI driver when virtual machines are generating over 2,000 IOPS. That is a very high number of IOPS. To put things in perspective for you, a 15K SAS drive generates about 170 randomized IOPS.

That being said, the LSI SCSI driver that is available and used in most cases by default is optimized to handle both low and high I/O and can adjust accordingly. In most cases, the use of the LSI SCSI driver will yield excellent performance and will consume acceptable CPU cycles on the ESX host.

Where VMware PVSCSI shines is when you get into the larger IOPS requirements. In those cases, the PVSCSI driver will consume significantly fewer CPU cycles than the LSI driver.

Now with the release of vSphere 4.1, VMware tweaked the PVSCSI driver for better performance with workloads generating less than 2,000 IOPS. However, the accepted best practice still dictates the use of the LSI driver for all low to medium workloads.

VMware spent a lot of time tweaking the LSI driver for random VM workloads and, as such, it's a victim of its own success. Now, I am sure that with every release of vSphere, the PVSCSI driver will be further enhanced and will get to a point where it can handle all types of workloads without any penalties.

Posted by Elias Khnaser on 03/22/2011 at 12:49 PM0 comments

There is an application virtualization riddle out there, wrapped in DVI (Desktop Virtualization Infrastructure) enigma, inside a vendor frenzy. Everyone wants to virtualize applications, and every vendor tells you to do it differently. So what is the right way of determining when to bake an application as part of the image, virtualize an application, stream it or deliver it via Terminal Services?

I will not give you the standard consultant line of "it depends," but rather I will start by saying that baking the application as part of the image should be the last resort approach -- it is the last ditch effort when all else fails. I bring this up first because I have seen many vendors recommend, off the bat, to bake it in the image. NO. OK, Eli, but saying no is easy, Why no? Well, for starters, you have now increased the size of that image exponentially, but assuming you are working off a master image technology (LinkedClones, Machine Creation, Provisioning, etc...) that is not a huge deal. Or is it? if you install all applications locally, doesn't your application updates become more frequent? Thus you have to constantly update that master image? Will you not be transferring the same issues you have on the physical desktops to the virtual desktops? Application conflicts, compatibility, testing, etc...?

In addition, you now require significantly more resources on the virtual infrastructure and the virtual machine itself to support this image as all processing is happening locally. On the other hand, if you take the approach of VDI with Windows 7, using technologies like terminal Server to deliver applications, you significantly reduce the resource requirements from a memory and processor standpoint. With this approach, you can most likely get away with having Windows 7 VM with 2 GB of memory or less, with 1 or 2 vCPUs, as opposed to at least 4 GB of memory and 2 vCPUs if you take the bake as part of the image approach. Consequently, this reduces the number of VMs per host, which leads to a bloated project, which leads to a failed project.

The right approach depends on the situation. Once again, let's take the example of implementing VDI: The ideal scenario would be to have the operating system image with nothing on it, just a shell and then layer applications on top of it using Terminal Services / Citrix XenApp / Ericom or other solutions. This allows you to deliver a rich user experience, all the benefits of VDI, maximum user density and extreme application delivery and flexibility. But we all know that is not the case and sometimes applications do not work from terminal server or support for certain hardware peripherals.

So now do I bake it into the image, Eli? Possibly. If you don't have any application virtualization technologies, then yes. If you have application virtualization or streaming, then that would be your second choice. It would liberate you from having to slip the application as part of the image. But why is streaming my second choice? Why can't it be my first choice? I don't want to invest in Terminal Services. Application virtualization, or streaming for that matter, takes up the same resources as an application baked into the OS would. Remember, the application is running locally, it is just not installed locally, so again this would significantly increase resources, whereas terminal server uses a shared application model, requires less resources and delivers higher density.

So to summarize things, here is a decision matrix on how to go about determining which technology to use:

- If you can use Terminal Server, that should be your first choice. Terminal Server offers easier application installation, management, higher density, and requires fewer resources at the endpoint device and at the Terminal Server.

- Your second choice is Application Virtualization / Streaming. This technology is more complicated when compared with Terminal Server. The application packaging process is complicated, with the ongoing update process also being complicated. It offers easy management and application isolation. However, it does require the same amount of resources at the endpoint as applications that are installed locally

- If both solutions don't get the job done, then, and only then, bake it into the image

Now that we have laid all this out, it is important to keep in mind that some applications will not work in Terminal Server, but will work virtualized (and vice-versa). Some applications will not work virtualized or on terminal server -- and as a result, you have no choice but to install it as part of the image. This is why we are using desktop virtualization in the first place, it provides the flexibility of using multiple technologies to solve a particular challenge. Just terminal Server does not always work, just VDI does not make sense, Application Virtualization does not meet all circumstances. It really is a blend.

Posted by Elias Khnaser on 03/17/2011 at 12:49 PM3 comments

As organizations continue on the journey of virtualizing more servers, there's a need for more attention and customization in order to yield the desired performance benefits while taking advantage of the virtualization drivers. This is especially true for those servers that host applications dubbed as tier-1 applications, such as SQL, Exchange and Citrix XenApp.

Citrix XenApp is particularly interesting when virtualized due to the nature of the server that typically consumes a lot of CPU, memory and disk I/O. So, addressing these categories in particular is imperative. Here are some best practices on how to tweak Citrix XenApp on VMware vSphere 4.1.

At the vSphere Infrastructure Level

At the virtual infrastructure level, the use of VMware vSphere 4.1 or newer with vStorageAPIs for Array Integration is imperative. VAAI offloads certain tasks previously executed in the hypervisor onto the storage array; however, the most important feature of VAAI as it pertains to virtualizing XenApp is that it offloads SCSI locking to the array. When you have multiple XenApp VMs on the same LUN and they are simultaneously utilizing the disk, the benefits of VAAI will surely be apparent.

If you have not upgraded to vSphere 4.1, I strongly recommend it, especially if you intend on virtualizing tier-1 applications. Check with your storage manufacturer to make sure your array supports VAAI. In some cases you may have to upgrade the firmware on your controller to take advantage of it.

Another tweak to the vSphere host is again related to heavy workload disk performance, and XenApp is most definitely fits that category. This tweak addresses the maximum simultaneous number of outstanding disk requests to a specific LUN.

By default, the limit is set to 32 and can be increased to a maximum of 256. This value should be set to the maximum as a regular performance best practice, and in particular when tier-1 applications are involved. This value can be changed by navigating to the Configuration tab of a vSphere host, selecting Advanced Settings and selecting the node Disk. You should then browse to Disk.SchedNumReqOutstanding and set it to 256.

At the VM Virtual Hardware Level

It is critical that the the virtual hardware that is configured for a XenApp deployment be configured with the most efficient components as follows:

- Disable and remove any unnecessary virtual hardware components such as CD-ROM and Floppy

- When configuring a virtual network Interface Card, select the VMXNET3 adapter, this provides the most efficient vNIC possible and yields the best performance while minimizing load on the vSphere host CPU

- The ideal disk controller for XenApp is the LSI Logic SAS Controller. While converting a XenApp server from physical to VM is strongly discouraged (particularly because changing the disk controller results in an unstable operating system), if you have to convert, try and change the disk controller as this will affect performance.

- You can edit the VM settings and select the Options tab, configure CPU and meory hardware assist appropriately. By default it is set to Automatic; your testing might reveal a performance gain if hardcoded.

For 32-bit Windows Operating Systems

When using a 32-bit operating system, you are limited to the amount of memory that Windows 32-bit can effectively take advantage of. Therefore, I suggest CPU and memory configurations to be 4096 MB virtual RAM and one or two vCPUs. While you can most certainly configure four vCPUs, XenApp does not linearly scale very well. As such, two vCPUs is recommended before adding another XenApp VM.

Ideally, if you have to use 32-bit operating system, use Windows Server 2008, aside from the fact that Windows Server 2003 is almost end of life, Windows Server 2008 has many specific advantages, including one that automatically aligns the virtual disk to the underlining storage where it resides. This is significant from a disk I/O performance stand point.

However, if you must use Windows Server 2003, I suggest splitting the operating system and the data drive into separate VMDKs for the simple sake of being able to disk align the data drive. In Windows Server 2003, disk alignment is a manual process that should be performed on the data drive.

To align the drive on Windows Server 2003, use follow these steps:

- Add a Virtual Disk to the VM settings

- In the operating system, do not use the GUI to initialize the partition, instead open a command line and use the following command: Diskpart

- Then type list disk, this will display a list of available disks

- Use the select disk command in conjunction with the appropriate disk number to set the focus on the disk you want

- Finally type create partition primary align=64

- You can now use the Disk Management MMC to assign the disk a drive letter and format it

You will not be able to align the system drive and you don’t need to, considering that all your applications will be installed on the data drive anyway and that is the drive that will drive the disk I/O when the applications are used.

Multiple Drives for OS and Applications

I have read many times about the benefits of splitting the operating system and the applications onto separate drives. The fact of the matter is, if you are splitting them up logically but they physically reside on the same disk, then there is no performance benefit whatsoever. If you are splitting them up on different physical disks of different tier class (ex: SSD, SAS, SATA) with different RAID configurations, then a performance gain is noticeable, albeit not significant.

What I am trying to say here is, if you are going to have a dedicated VMDK for operating system and applications that live on the same datastore, then might as well have one large C: drive, as you will gain no performance at all. But if you plan on splitting your VMDKs on different datastores which have different tier of disk and RAID levels, then go for it.

General Recommendations

Disable the Last Time Access Attribute for NTFS process. It's a chatty process that keeps track of the last time a file was accessed. Disabling it relieves the operating system from having to constantly read and write this information. To disable, run the following command:

fsutil behavior set disablelastaccess 1

Finally, as with the build and setup of traditional XenApp servers, any unnecessary processes, services, USB, screen savers, animations, etc., should be disabled for best performance.

This process may seem long and exhausting at first, but isn’t that the case for every build of XenApp? Those of you that have been working with XenApp for a while can appreciate it this. That being said, virtualization makes it easier for us to create templates and deploy from these templates, thereby streamlining and efficiently increasing our ability to deploy a XenApp server.

Posted by Elias Khnaser on 03/14/2011 at 12:49 PM3 comments

What comes to mind as one of the biggest roadblocks to mass desktop virtualization adoption? Storage might come immediately to mind for most of you. We constantly hear about storage in terms of IOPS, cache and how much it will cost to implement desktop virtualization. Some have taken it even farther by suggesting local disk as an alternative, which gives better performance/lower cost at the expense of sacrificing virtualization features. Is there a way to have the best of both worlds?

Yes. I give you Xiotech Hybrid ISE, a 3U box with hybrid SSD and SAS drives. To be exact, it has 20 SSD drives and 20 10K SAS drives, which collectively generate up to 60,000 sustained IOPS and a usable capacity of about 15TB.

If you're not impressed, what if I told you it has built-in adaptive intelligence that can move hot sheets of data between disk tiers for faster processing? It can do that in real-time. The key takeaway here is that it's "adaptive," which means the system will learn on its own specific application patterns and will perform data migration accordingly when pattern evidence suggests a performance gain.

So you may be thinking, okay, Eli, we have seen this before with dynamic sub LUN tiering (not talking about automatic tiering, which typically occurs every 24 hours; dynamic sub LUN tiering is live). It does not help with desktop virtualization, because it cannot react fast enough to weather a boot storm or login storm, so we are back at square one.

Not really. The Hybrid ISE also has application profiling capabilities, which means you can configure the system to record the pattern of a certain application, then save that as an application profile. If the system learns that a boot storm happens every day at 8 a.m., Hybrid ISE will move those affected sheets to SSD at 7:45 and then move them back at 8:30 to 10K SAS.

One downside I see right off the bat from a desktop virtualization perspective is that while you can use application profiling and that may suit your needs at this moment, application profiling is a very static process that needs constant updating in order to stay effective. What I mean by that is, today your boot storms may be happening at 8 a.m., but in few months, that might change to 8:30 a.m. The system does not have built-in intelligence to allow application profiling that's also dynamic. (Hint, hint, Xiotech!)

Even so, Hybrid ISE is a very welcome product for desktop virtualization enthusiasts, such as yours truly, and it could potentially be used at the storage platform for virtualizing desktops. The platform almost feels like it was purpose-built for desktop virtualization, and with support for both VMware and Citrix storage adapters, what else do you need?

Hybrid ISE also opens the door for more desktop virtualization storage innovations from other manufacturers, I know for a fact that Hitachi Data Systems, BlueArc, IBM and EMC are also building innovation to take down a potential 600 million physical desktops worldwide.

Posted by Elias Khnaser on 03/08/2011 at 12:49 PM2 comments

The release of Citrix XenDesktop 5 introduced a powerful PowerShell engine that allows you to tweak and script almost every aspect of XenDesktop 5. This time, I want to show you a handy little tip that I frequently use when troubleshooting or even during a proof of concept.

When users connect to a XenDesktop 5-pooled VM, the default behavior is to restart that VM as soon as the user logs off. This action flushes all the changes that the user has made during the session and restores the VM to its pristine state, ready to accept a new user. This behavior, of course, only affects VMs that are based on a master image using MCS in Pooled mode or using Streamed with Provisioning Services.

While this behavior is very desirable when everything is working well and there are no issues, it can quickly become very annoying when you are troubleshooting, because the VM will reboot every time you log off. This is also an annoyance if you are in a POC and need to log off but don't want the VM to reboot.

To change this log-off behavior, you can use the PowerShell tab from the Citrix Desktop Studio console as follows:

- Load the correct Citrix modules that enable this feature. In most cases, these modules are enabled by default. But just in case they are not, run this command: Add-PSSnapin Citrix.*

- Identify which Desktop Group you want to apply this change against. You would typically do this against the Desktop group you are working on. To list the available Desktop Groups, run this: Get-BrokerDesktopGroup

- Once you have identified the Desktop Group, change the log-off behavior from "reboot VM" to "don't reboot VM" by running this command: Set-BrokerDesktopGroup -Name "DG Name" -ShutdownDesktopsAfterUse $False

When you are done troubleshooting or if for any reason you want to change the log-off behavior back to reboot VMs, run this command: Set-BrokerDesktopGroup -Name "DG Name" -ShutdownDesktopsAfterUse $True

PowerShell is very useful when working with software like XenDesktop, where you need to script changes.

Posted by Elias Khnaser on 03/02/2011 at 12:49 PM1 comments