News

Microsoft's New OpenAI/.NET Blog Provides Prompt Engineering Tips

Microsoft's new blog series on OpenAI and .NET is out with a new post that explains all the ins and outs of prompt engineering to get the best out of GPT large language models (LLMs).

Microsoft is infusing advanced AI functionality across its products and services thanks to a $10 billion-plus investment in OpenAI, now widely viewed as the leader in advanced generative AI thanks to advancements like the sentient-sounding AI-powered chatbot, ChatGPT.

That partnership resulted not only in Microsoft's Azure OpenAI Service, but also the debut of the OpenAI/.NET blog series on April 12.

The second installment, published last Friday, aims to help developers "Level up your GPT game with prompt engineering."

Luis Quintanilla, a program director in Microsoft's Developer Division, explains how devs can refine the inputs they provide to OpenAI models -- like ChatGPT-4 -- to produce more relevant responses.

That ability is so important in our new AI-dominated tech world that it popularized the discipline of "prompt engineering," for which salaries up to $335,000 per year are being offered.

"Prompt engineering is the process and techniques for composing prompts to produce output that more closely resembles your desired intent," Quintanilla said.

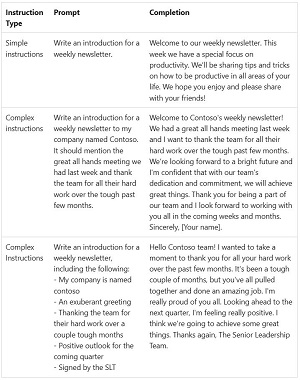

[Click on image for larger view.] Prompt/Completion Examples (source: Microsoft).

[Click on image for larger view.] Prompt/Completion Examples (source: Microsoft).

His post explains the structure of a prompt (user input provided to a model to generate responses, called "completions") while also listing these tips for composing prompts:

- Be clear and specific: "When crafting a prompt, the less details you provide, the more assumptions the model needs to make. Place boundaries and constraints in your prompt to guide the model to output the results you want."

- Provide sample outputs: "The quickest way to start generating outputs is to use the model's preconfigured settings it was trained on. This is known as zero-shot learning. By providing examples, preferably using similar data to the one you'll be working with, you can better guide the model to produce better outputs. This technique is known as few-shot learning."

- Provide relevant context: "Models like GPT were trained on millions of documents and artifacts from all over the internet. Therefore, when you ask it to perform tasks like answering questions and you don't limit the scope of resources it can use to generate a response, it's likely that in the best case, you will get a feasible answer (though maybe wrong) and in the worst case, the answer will be fabricated."

- Refine, refine, refine: "Generating outputs can be a process of trial and error. Don't be discouraged if you don't get the output you expect on the first try. Experiment with one or more of the techniques from this article and linked resources to find what works best for your use case. Reuse the initial set of outputs generated by the model to provide additional context and guidance in your prompt."

The new blog post complements other Microsoft guidance on prompt engineering, such as April's "Introduction to prompt engineering," which lists its own best practices, some quite like Quintanilla's:

- Be Specific: Leave as little to interpretation as possible. Restrict the operational space.

- Be Descriptive: Use analogies.

- Double Down: Sometimes you may need to repeat yourself to the model. Give instructions before and after your primary content, use an instruction and a cue, etc.

- Order Matters: The order in which you present information to the model may impact the output. Whether you put instructions before your content ("summarize the following…") or after ("summarize the above…") can make a difference in output. Even the order of few-shot examples can matter. This is referred to as recency bias.

- Give the model an "out": It can sometimes be helpful to give the model an alternative path if it is unable to complete the assigned task. For example, when asking a question over a piece of text you might include something like "respond with 'not found' if the answer is not present." This can help the model avoid generating false responses.

Yet more prompt engineering guidance can be found in Microsoft posts including April's "Prompt engineering techniques" post and "The art of the prompt: How to get the best out of generative AI," published earlier this month.

The next post in the OpenAI/.NET blog series will go into more detail about ChatGPT and how developers can use OpenAI models in more conversational contexts.

About the Author

David Ramel is an editor and writer at Converge 360.