In-Depth

Microsoft Security Copilot & Agents

When ChatGPT first entered the collective consciousness and became the fastest growing consumer technology ever, there was a fair bit of handwringing in the cybersecurity space. AI was going to churn out malware automatically, produce infinite variants of flawless phishing emails, analyze firewall configurations in real time and find unknown vulnerabilities to exploit. By and large, most of these risks haven't materialized, and indeed, at least for now, AI has mostly popped up all over cyber defenders' tools instead.

Microsoft released Security Copilot about a year ago, and recently at their Secure event announced their new Security Copilot agents. In this article I'll look at Security Copilot, what it can and can't do, as well as dive into these agents, followed by my own takes on the overall usefulness, licensing model and where I see AI in Microsoft's security tools go in the future.

Security Copilot

In a true sign that there are definitely too many marketing people at Microsoft compared to engineers, this product has already had one name change, and it's not even a walking toddler yet -- originally it was Copilot for Security.

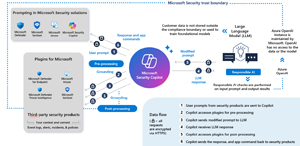

It's built on one of the latest OpenAI models (they update the base LLM model regularly) and runs in Azure. Microsoft then layers on top a security specific orchestrator, their Threat Intelligence (TI) database that's updated in real time and specific skills in each of the areas where it can be used.

It's available in two modes, the standalone UI at securitycopilot.microsoft.com and embedded in the various portals such as Entra, Defender XDR, Defender for Cloud, and Purview. Initially targeted at SOC analysts, the scope for who can benefit from this tool has expanded to data governance folks in Purview, device administrators in Intune and identity managers in Entra.

Here you can see the flow of a prompt a user has entered. There are two takeaways: first that your prompt is both pre and post processed by the applicable plugins (Intune, Sentinel, Defender for Endpoint for example); and that Responsible AI is built in to make sure the user isn't entering a malicious prompt and that the response back to the user isn't dodgy.

[Click on image for larger view.] Security Copilot Prompt Flow

[Click on image for larger view.] Security Copilot Prompt Flow

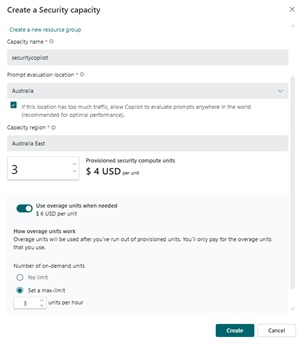

Let's start with the standalone experience. Here you can deploy it to your tenant by creating capacity for it to run, called Secure Compute Units (SCUs). These are priced at $4 USD per hour, per SCU, and Microsoft recommends at least 3 (max is 100) to start with for testing. I delivered a three-day course on Security Copilot recently and let me tell you, three SCUs don't get you very far. And then Microsoft recommends that you leave them available 24x7. When I delivered that course, if I used more than 3 in my demos, the over usage was provided for free. Since then Microsoft has started charging for this overage, at $ 6 per SCU! (I'll come back to the cost question at the end of the article).

[Click on image for larger view.] Create Security Copilot Capacity

[Click on image for larger view.] Create Security Copilot Capacity

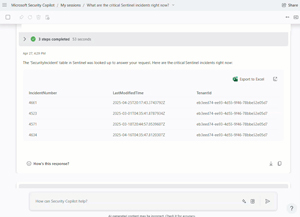

Here you can see the standalone experience in action, summarizing critical incidents in Sentinel for me.

[Click on image for larger view.] Security Copilot Standalone Experience

[Click on image for larger view.] Security Copilot Standalone Experience

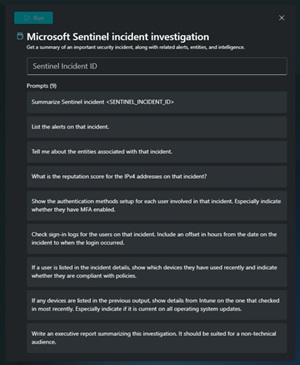

Interactive prompting is all good, but often security investigations are about building on what you learn in one step in the next one and writing a report at the end. This is encapsulated in Promptbooks, where two or more prompts are run in sequence. There are some built in, and you can create your own, and (given the right permissions) share them with the rest of your organization.

[Click on image for larger view.] Security Copilot Prompt Book

[Click on image for larger view.] Security Copilot Prompt Book

The last thing analysts need is another portal to work in, though, and while there are some tasks that can only be done in the standalone UI (activating plugins, creating and running promptbooks), most admins will interface with the embedded experiences.

Security Copilot Embedded

Initially Security Copilot was only embedded in the Defender XDR portal, appearing as a button, and if clicked, a fixed size panel on the right, allowing you to prompt and investigate whatever incident you were working on.

[Click on image for larger view.] Incident Summary in Defender XDR

[Click on image for larger view.] Incident Summary in Defender XDR

Since then, it's now appearing inside the Entra portal where it at first only gave you some background information about identity risks but is now being enhanced with many more skills to help with identity tasks. It can now (in private preview but officially announced) help you streamline your identity lifecycle workflows (automating joiner, mover and leaver steps for your user accounts). It can also help you with application risks, identifying app / service principal owners to help you find the right person to talk to for apps that are unused, plus check if apps have verified publishers to understand your risks.

It also handles complex questions and asks clarifying questions back to you when required ("there are three user accounts named Paul, which one did you mean?").

Security Copilot is also embedded in Purview, where it helps you triage Data Loss Prevention (DLP) and Insider Risk Management (IRM) alerts. It's also in Intune where it can help you optimize your configuration and compliance policies, and help you troubleshoot settings.

Oh, and Security Copilot has skills to translate natural language into Kusto Query Language (KQL) to help you in Advanced Hunting in XDR and Sentinel. Plus, it also understands Keywork Query Language (KeyQL) as it's used in Purview to create content searches or eDiscovery cases. Furthermore, it can analyze malicious / obfuscated scripts and break down their logic, something that's a rare skill, even in a larger SOC team.

Security Copilot Goes 007

That's Security Copilot up until recently, a custom prompt-based interface in various Microsoft security / IT products. But it never took any action, simply providing you a handy shortcut to existing information, speeding up triage and helping junior analysts learn on the job with an AI assistant.

Announced, with one in private preview at the moment, are Security Copilot agents. Specialized independent AI agents that run on a schedule or on-demand and complete a particular task, and that can learn and adapt to your environment over time. The Microsoft ones are:

- Conditional Access Optimization Agent

- Phishing Triage Agent

- Alert Triage Agents for Data Loss Prevention and Insider Risk Management

- Vulnerability Remediation Agent

- Threat Intelligence Briefing Agent

There are also five partner-developed agents:

Let's start with the Microsoft ones, where the CA optimization agent runs once a day to investigate if there are any applications not protected by a CA policy, or if new users have been added that are also not covered by CA policies.

[Click on image for larger view.] Conditional Access Policy Agent After First Run (courtesy of Microsoft)

[Click on image for larger view.] Conditional Access Policy Agent After First Run (courtesy of Microsoft)

The Phishing Triage Agent, on the other hand, assists with a well-known time sink in SOC teams: investigating user submitted phish reports. In my experience, more than 90% of these are benign or spam, but of course you can't just assume this. This agent gathers information about each user reported phishing email and renders a verdict, with a natural-language explanation for how it got there and will also learn from analyst feedback on particular emails for this tenant.

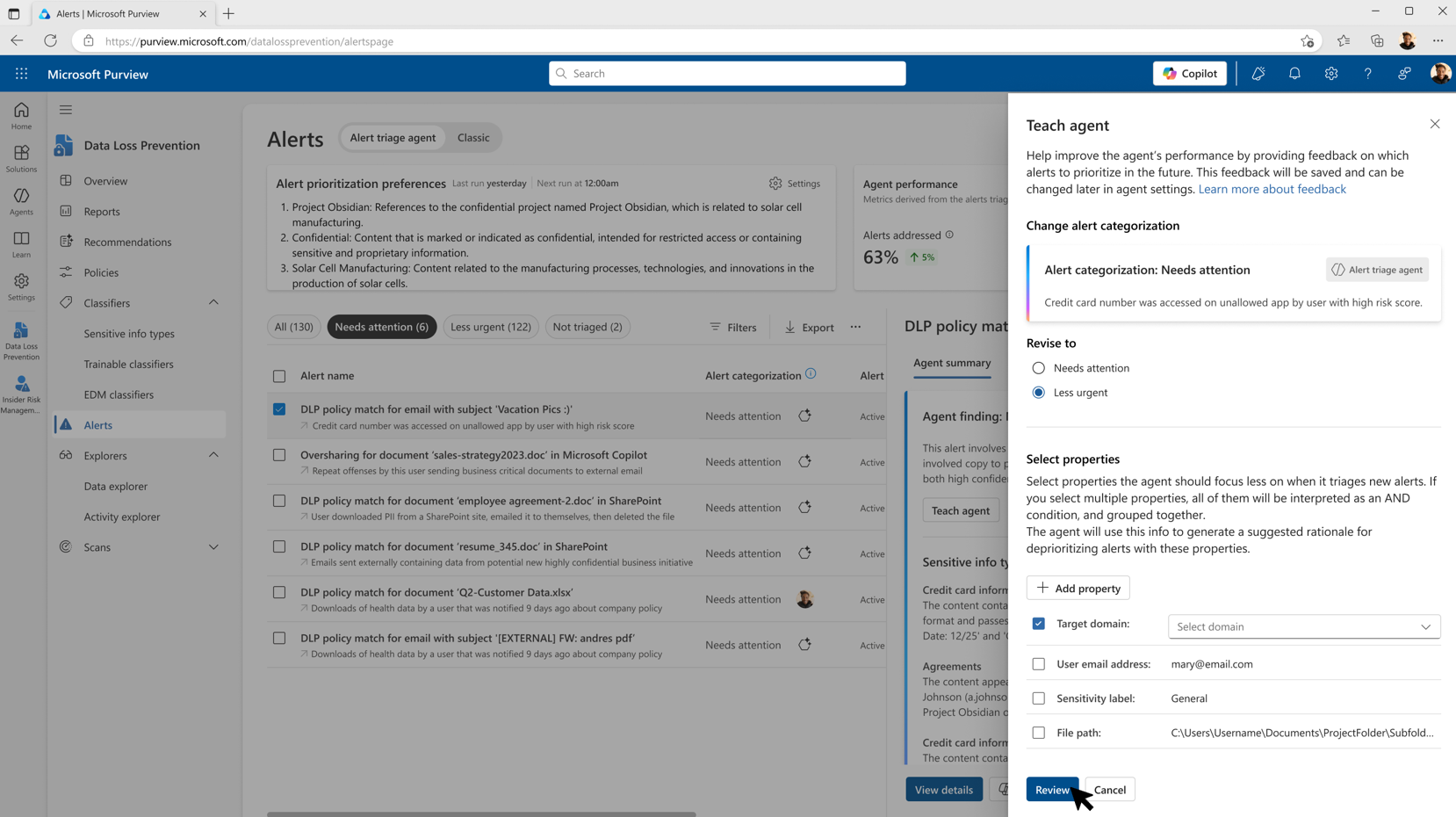

For Purview, the agent creates a prioritized queue of DLP and IRM alerts with the biggest risk to the organization, again with a comprehensive explanation as to why it made that determination. This agent will also learn over time, based on analyst input.

[Click on image for larger view.] Purview Alert Triage Agent Feedback Loop (courtesy of Microsoft)

[Click on image for larger view.] Purview Alert Triage Agent Feedback Loop (courtesy of Microsoft)

In Intune, the Vulnerability Remediation Agent will evaluate the risk of endpoint vulnerabilities (based on data from Defender for Endpoint Vulnerability Management) and create a list of prioritized remediation actions.

[Click on image for larger view.] Intune Agent - Recommended Remediation Step (courtesy of Microsoft)

[Click on image for larger view.] Intune Agent - Recommended Remediation Step (courtesy of Microsoft)

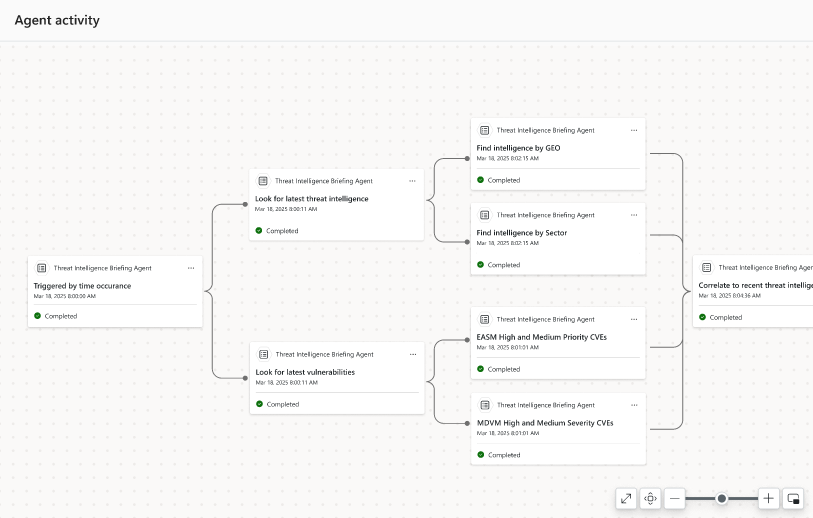

Finally, the Threat Intelligence Briefing Agent made for Cyber Threat Intelligence (CTI) analysts, produces daily summaries and detailed technical analysis of actively exploited vulnerabilities and their potential impact on the organization. It uses signals from Defender for Threat Intelligence, Defender Vulnerability Management (if deployed), and Defender External Attack Surface Management. It also shows you how it arrived at its result in a graph, so that you can start building up trust with it.

[Click on image for larger view.] Threat Intelligence Briefing Agent Reasoning (courtesy of Microsoft)

[Click on image for larger view.] Threat Intelligence Briefing Agent Reasoning (courtesy of Microsoft)

The third-party agents are an interesting taste of what's to come (there are already 32 third-party plugins for Security Copilot) where the Privacy Breach Response agent looks at a data breach based on the data, region and regulations to provide guidance for teams how to handle it.

The Network Supervisor Agent monitors VPN, gateways or Site2Cloud connections and gathers details about any failures. The SecOps Tooling agent analyzes your SOC and deployed controls and makes suggestions for optimizations. The Alert Triage agent provides context to help analysts make confident decisions, whereas the Task Optimizer agent helps by forecasting and prioritizing the most critical threat alerts.

Conclusion

In Security Copilot I feel we are getting to see the plane being built as it's in the air more than any previous Microsoft product or service. It's a unique offering because it's popping up in so many different products and can do so many different things. There are many other AI solutions for cybersecurity, but none of them cover as wide an area as Security Copilot does. It's also developing at a furious pace with new features and plug ins showing up weekly.

However, as someone who's using Microsoft's security stack to protect my SMB clients, I'm disappointed by the current cost model. The cost of running Security Copilot in each client's tenant -- even if I used automation to scale it down during non-work hours -- is simply prohibitive. And apart from using it for Sentinel that's connected via Azure Lighthouse, today the capacity must be created in each client's tenant. There's no way to buy SCUs as the MSSP and then use them in different tenants as the need arises.

And even for larger businesses with bigger budgets, having the tool showing up for administrators in many different roles virtually guarantees heavy usage which is almost impossible to budget for accurately.

I think Security Copilot is the new shiny for Microsoft and I'm worried that they'll add so many features to it that they'll forget about those of us that can't afford it, and who rely on non-Security Copilot features thus missing out.

Only time will tell if there's a better cost model on the horizon, but until then, even though it, and the new agents are very cool, and potentially extremely useful, there's no way I can justify using this for my clients.