Virtualization I/O: Blended and Not on The Rocks

Unless you have been stuck on a remote tropical island without an Internet

connection for the past few years, you couldn't help but notice that every storage vendor has introduced at least one solution for virtualization. Putting aside the veracity of any superiority claims, the number of virtualization offerings from storage vendors is a key indicator that storage in the virtualized world is

different.

What makes it so? Well, one answer is surprisingly simple: the disk drive.

The hard disk drive, an assembly of spinning platters and swiveling actuators (affectionately referred to as "rotating rust") is one of the last mechanical devices found in modern computer systems. It also happens to be the primary permanent data storage technology today. Advances in disk drive technology have been nothing short of amazing -- as long we are talking about capacities and storage densities. When it comes to the speed of data access the picture is far from rosy.

Oops

Consider this: back in the 1980s, I played with Seagate ST412 drives that had been smuggled into the USSR through some rusty hole in the Iron Curtain. Those drives were tough enough to survive that ordeal. They could store about 10 megabytes of data with an average seek time of 85 milliseconds, and average rotational latency added another 8 ms. Fast forward 25 years: modern drive capacities go into terabytes in half the cubic volume. That's over 5 (five!) orders of magnitude increase. Access time? About 10 ms, less than one order of magnitude improvement. One can move the disk head actuator arm and spin the platters only so fast. Capacity has increased more than 10,000 times faster than access time.

This is a huge mismatch. We think about disk drives as random access devices. However, disk drives move random data much slower than sequential, up to a hundred times slower.

This fundamental property of disk drives presents a major challenge to storage performance engineers. Filesystems, databases, software in storage arrays -- are all designed to maximize the sequential I/O patterns to disk drives and minimize randomness. This is done through a combination of caching, sorting, journaling and other techniques. For example, in VxFS (the Veritas File System) -- one of the most advanced and high performance storage software products -- about half of the code (tens of thousands of lines) is dedicated to I/O pattern optimization. Other successful products have similar facilities to deal with this fundamental limitation of disk drives.

Enter virtualization: a bad storage problem gets a lot worse

Virtualization exacerbates this issue in a big way. Disk I/O optimizations in operating systems and applications are predicated on the assumption that they have exclusive control of the disks. But virtualization encapsulates operating systems into guest virtual machine (VM) containers, and puts many of them on a single physical host. The disks are now shared among numerous guest VMs, so that assumption of exclusivity is no longer valid. Individual VMs are not aware of this, nor should they be. That is the whole point of virtualization.

The I/O software layers inside the guest VM containers continue to optimize their individual I/O patterns to provide the maximum sequentiality for their virtual disks. These patterns then pass through the hypervisor layer where they get mixed and chopped in a totally random fashion. By the time the I/O hits the physical disks, it is randomized to the worst case scenario. This happens with all hypervisors.

This effect has been dubbed the "VM I/O blender". It is so-named because the hypervisor blends I/O streams into a mess of random pulp. The more VMs involved in the blending, the more pronounced the effect.

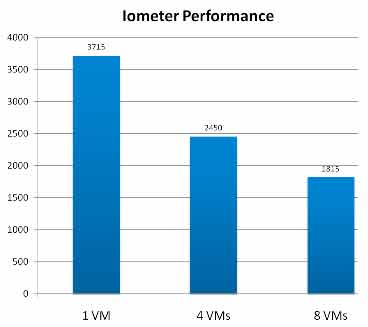

Figure 1 clearly shows the effects of the VM I/O blender on overall performance. It compares the combined throughput of a physical server running multiple guest VMs, each identically configured with an excellent disk benchmark tool called Iometer. The configuration is neither memory nor CPU bound, by the way.

|

Figure 1. As we add more VMs, combined throughput drops dramatically.

|

The performance of a single VM is almost identical to that of a non-virtualized server. As we add more VMs, the combined throughput of the server decreases quite dramatically. At 8 VMs it is about half that of a single VM and it goes downhill from there. This is an experiment you can try at home to see the results for yourself.

Performance evaluation and tuning has always been one the most challenging aspects of IT, with storage performance management rightly attaining a reputation as a black art. Storage vendors tend to focus the attention on such parameters as total capacity, data transfer rates, and price per TB. As important as these are, they do not paint the complete picture required to fully understand how a given storage system will perform in a virtualized environment.

The somewhat ugly truth is that the only way to achieve the required performance in a traditional storage architecture is to increase the disk spindle count and/or cache size, which can easily push the total system cost beyond reasonable budget limits. If you don't have budget constraints, than you don't have a problem. But if you have an unlimited budget, you should be relaxing on that tropical island without the Internet connection that I mentioned in the beginning.

For the unfortunate rest of us, it makes a lot of sense to look at new approaches to control storage and deal with the I/O blender at the source -- in the hypervisor.

This has been a discussion on mechanical disk drives. Solid state disks (SSDs) do change the equation, but are not magic bullets. SSDs pose a number of non trivial caveats of their own. But this is a subject for another post.

Posted by Alex Miroshnichenko on 08/11/2010 at 12:48 PM