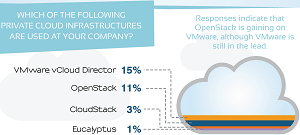

New research suggests Amazon AWS is the platform of choice for public clouds while VMware is being chased by open source contender OpenStack in the private cloud arena.

Commissioned by Database-as-a-Service (DBaaS) start-up Tesora, the "Database Usage in the Public and Private Cloud: Choices and Preferences" survey garnered more than 500 responses from North American open source developer communities.

"OpenStack is catching up to VMware as the preferred private cloud platform even though it has only been around for a few years," Tesora said in an accompanying statement today. "Of organizations that are using a private cloud, more than one-third now use OpenStack."

[Click on image for larger view.]

Tesora says OpenStack deployments are gaining on VMware

[Click on image for larger view.]

Tesora says OpenStack deployments are gaining on VMware

(source: Tesora)

The database service being developed by Tesora is based on the open source Trove DBaaS project introduced in the April "Icehouse" release of the open source OpenStack cloud platform. Tesora -- formerly called ParElastic -- launched in February and last week open sourced its database virtualization engine (DVE). It now offers a free community edition and a supported enterprise edition of DVE. The company states it's also developing what it claims to be the first enterprise-class, scalable DBaaS platform for OpenStack.

In the Tesora-commissioned survey, 15 percent of respondents reported that VMware vCloud Director was being used as a private cloud infrastructure at their organizations, followed by OpenStack at 11 percent, CloudStack at 3 percent and Eucalyptus at 1 percent. Tesora said that of all organizations using a private cloud, more than one-third use OpenStack.

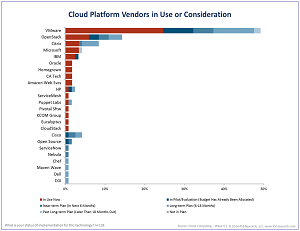

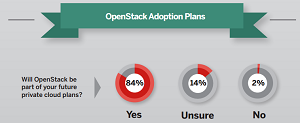

A report caveat noted: "This is a survey of open-source software developers, and surveys of other groups will have different results. For example, a report by 451 Research, 'The OpenStack Tipping Point -- Will It Go Over the Edge?' (May 2014) shows a somewhat wider gap between VMWare and OpenStack, while a 2013 IDG Connect survey (funded by Red Hat) found that fully 84 percent of IT decision-makers plan on implementing OpenStack at some point."

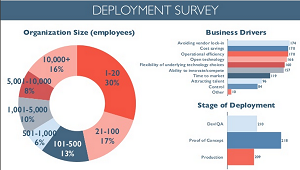

Tesora noted that OpenStack's most recent survey of its own members, taken last month at an Atlanta conference, found more than 500 OpenStack clouds in production.

[Click on image for larger view.]

451 Research shows VMware's lead over OpenStack is larger

[Click on image for larger view.]

451 Research shows VMware's lead over OpenStack is larger

(source: 451 Research)

[Click on image for larger view.]

IDG Connect report shows wider planned OpenStack adoption

[Click on image for larger view.]

IDG Connect report shows wider planned OpenStack adoption

(source: Red Hat)

[Click on image for larger view.]

OpenStack's user survey deployment chart

[Click on image for larger view.]

OpenStack's user survey deployment chart

(source: OpenStack)

In the public cloud arena, Amazon AWS was reported as the infrastructure being used the most, garnering 24 percent of responses, followed by Google Compute Engine (GCE) at 16 percent, Microsoft Azure at 8 percent, Rackspace at 6 percent and IBM Softlayer at 4 percent. Tesora said that while Amazon AWS coming in at No. 1 was not surprising, "what is a surprise is that Google GCE was fairly close behind at 16 percent even though it has only been generally available since December 2013."

Tesora said another expected result in the survey findings was that Microsoft SQL Server was the No. 1 database in use for public and private clouds, mentioned by 57 percent of respondents. MySQL was second at 40 percent, followed by Oracle, 38 percent, and MongoDB (the most popular NoSQL choice), at 10 percent.

For both public and private clouds, Web services was the most-reported workload, followed by quality assurance and databases. Cost savings was the primary reason given for implementing a private cloud, followed closely by operational efficiencies and integration with existing systems.

"While more than half of all respondents indicated that they are likely or very likely to use their company’s DBaaS on a private cloud, 31 percent plan on implementing or have implemented DBaaS in a private cloud," noted Tesora in reporting on further survey findings. "Of the people who are interested in DBaaS, nearly all of them were looking for relational DBaaS. Roughly a third (34 percent) of those people were interested in NoSQL DBaaS."

The survey report also made the following statements:

- The results suggest that relational databases still dominate despite rapid adoption of NoSQL solutions by high-profile enterprises like Twitter and Facebook.

- Only 5 percent of respondents were doing any kind of Big Data/data mining/Hadoop workloads, suggesting that the marketing hype around 'Big Data' may be ahead of reality.

- The results also indicate how mainstream cloud computing has become, with more than half of respondents reporting that their organizations are using public clouds, while 49 percent are using private clouds.

Tesora noted that respondents were early adopters of new technology and the survey provided a snapshot of OpenStack and DBaaS in early stages of development. "It's encouraging to see the traction of OpenStack in this early adopter segment of the private cloud market," said Ken Rugg, CEO of Tesora. "These findings are important because they are leading indicators of the kinds of technology and architecture decisions we can expect to see as private cloud adoption explodes and OpenStack matures and more vendors and customers go down this open source path."

Tesora used SurveyMonkey to conduct the survey (available for download after registration), while The Linux Foundation, MongoDB and Percona helped distribute it.

Posted by David Ramel on 06/18/2014 at 8:36 AM0 comments

Red Hat's brand-new, major Linux OS enterprise release is all about the cloud.

The company is positioning Red Hat Enterprise Linux 7 (RHEL 7) as going beyond an old-fashioned commodity OS to be instead a next-generation "catalyst for enterprise innovation." It's a redefined tool to help companies transition to a converged datacenter encompassing bare-metal servers, X as a Service offerings and virtual machines (VMs) -- with a focus on open hybrid cloud implementations.

In case there's any doubt about the cloud emphasis, Red Hat used the term 23 times in its announcement yesterday.

"Answering the heterogeneous realities of modern enterprise IT, RHEL 7 offers a cohesive, unified foundation that enables customers to balance modern demands while reaping the benefits of computing innovation, like Linux Containers and Big Data, across physical systems, virtual machines and the cloud -- the open hybrid cloud," the company said.

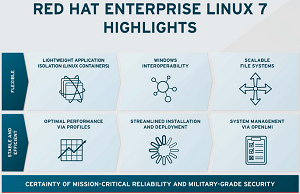

[Click on image for larger view.]

The New OS (source: Red Hat Inc.)

[Click on image for larger view.]

The New OS (source: Red Hat Inc.)

New features in the OS -- practically a ubiquitous standard in Fortune 500 datacenters -- include "enhanced application development, delivery, portability and isolation through Linux Containers, including Docker, across physical, virtual, and cloud deployments, as well as development, test, and production environments," the company said.

XFS is the new default file system, with the capability to scale volumes to 500TB. Ext4, the previous default -- now an option -- had been limited to 16TB file systems, which in the new release has been increased to 50TB.

Also included are tools for application runtimes and development, delivery and troubleshooting.

The new release is designed to coexist in heterogeneous environments, as exemplified by cross-realm trust that allows secure access from users on Microsoft Active Directory across Windows and Linux domains.

Other features as listed by Red Hat include:

- A new boot loader and redesigned graphical installer.

- The kernel patching utility Technology Preview, which allows users to patch the kernel without rebooting.

- Innovative infrastructure components such as systemd, a standard for modernizing the management of processes, services, security and other resources.

- The Docker environment, which allows users to deploy an application as a lightweight and portable container.

- Built-in performance profiles, tuning and instrumentation for optimized performance and scalability.

- The OpenLMI project, a common infrastructure for the management of Linux systems.

- Red Hat Software Collections, which provides a set of dynamic programming languages, database servers and related software.

- Enhanced application isolation and security enacted through containerization to protect against both unintentional interference and malicious attacks.

A new RHEL edition to be released later -- Atomic Host -- was announced during a virtual keynote presentation (registration required to view on-demand) by Red Hat executive Jim Totton. "It's a lightweight version of the same Red Hat Enterprise Linux operating system, tuned to run in container environments," he said. "So this is a lightweight version of RHEL, but it's the same RHEL as we've been talking about."

Totton also announced Red Hat Enterprise virtualization product technology, where a hypervisor is engineered as part of the RHEL kernel. He said it can provide the virtual datacenter fabric for hosting RHEL as a guest. Totten also highlighted the Red Hat Enterprise Linux OpenStack platform "for really deploying the flexible and agile private cloud and public cloud type of environments."

RHEL 7 got a thumbs-up from Forrester Research analyst Richard Fichera, who wrote that it "continues the progress of the Linux community toward an OS that is fully capable of replacing proprietary RISC/Unix for the vast majority of enterprise workloads. It is apparent, both from the details on RHEL 7 and from perusing the documentation on other distribution providers, that Linux has continued to mature nicely as both a foundation for large scale-out clouds, as well as a strong contender for the kind of enterprise workloads that previously were only comfortable on either RISC/Unix systems or large Microsoft Server systems. In effect, Linux has continued its maturation to the point where its feature set and scalability begin to look like and feel like a top-tier Unix."

Oh, it also works in that cloud thing.

Posted by David Ramel on 06/11/2014 at 11:10 AM0 comments

Amid vendor squabbling among companies such as VMware and Cisco about competing next-generation data center technologies, HP was up for a little Cisco-bashing of its own when it announced new cloud computing and software-defined networking (SDN) products at this week's HP Discover conference in Las Vegas.

Featured in the many new offerings were: the HP Virtual Cloud Networking SDN Application, an open standards-based network virtualization solution; the HP Helion Self-Service HPC, a private cloud product running on HP's OpenStack-based cloud platform; and the HP FlexFabric 7900 switch series, which supports the OpenFlow standard managed by the Open Networking Foundation (ONF) and the Virtual Extensible LAN (VXLAN) specification originally created by VMware, Arista Networks and Cisco.

In describing various new products, HP exec Kash Shaikh led off a blog post with a reference to Gartner analyst Mark Fabbi's recent disparaging remarks about Cisco's datacenter networking strategy, wherein he said "a reactive vendor isn't a leader."

"We couldn't agree more," said Shaikh, senior director of product and technical marketing. "We announced our complete SDN strategy almost two years back and we have been leading and consistently delivering on this strategy to take you on a journey that doesn’t require forklift upgrades."

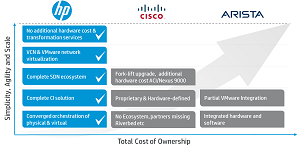

[Click on image for larger view.]

HP's Technology Comparison (source: Hewlett-Packard Co.)

[Click on image for larger view.]

HP's Technology Comparison (source: Hewlett-Packard Co.)

In case there was any doubt about Shaikh's target, he included a product comparison chart that indicates Cisco's solution requires: "fork-lift upgrade, additional hardware cost ACI/Nexus 9000." He also noted HP "doesn't require specific switch hardware for its cloud-based SDN solution."

Networking powerhouse Cisco has been criticized for an inconsistent approach to the advent of SDN technology, eventually developing its own flavor of the same -- Application Centric Infrastructure (ACI) -- with proprietary components such as Nexus 9000 switches.

Of course, Cisco has been a willing participant in the back-and-forth, with CEO John Chambers recently stating the networking industry was in for a "brutal consolidation" that will see competitors such as HP fail and vowing the company was going to win back customers and "crush" competitors such as VMware.

Anyway, for those interested in speeds and feeds more than snarky sniping, HP's announcements are summarized here:

- The HP Virtual Cloud Networking SDN application integrates with the HP Virtual Application Networks SDN controller and uses OpenFlow for dynamic deployment of policies on virtual networks such as those using the Open vSwitch implementation and physical networks from HP and others, HP said.

"VCN [Virtual Cloud Networking] provides a multitenant network virtualization service for KVM and VMware ESX multi-hypervisor datacenter applications, offering organizations both open source as well as proprietary solutions," the company said. "Multitenant isolation is provided by centrally orchestrated VLAN or VXLAN-based virtual networks, operating over standard L2 or L3 datacenter fabrics."

The SDN application will come out in August with the company's HP Helion OpenStack cloud platform release.

- The HP Helion Self-Service HPC is designed to make high-performance computing (HPC) resources easier to use through an optimized private cloud.

"This new solution provides a self-service portal that makes using HPC resources as easy as using a familiar application -- thereby making them accessible for more staff," the company said. "The solution also makes HPC accessible and manageable for organizations by allowing them the choice to manage it themselves or have HP HPC experts implement and manage it for them through a pay-for-use model that lets them stay focused on delivering products and innovation."

- The HP FlexFabric 7900 switch series, available now with a $55,500 price tag, is described as a compact modular switch targeting virtualized datacenters that supports VXLAN, Network Virtualization using Generic Routing Encapsulation (NVGRE) and OpenFlow. HP said the switch supports open, standards-based programmability via its SDN App Store and SDK. The switch federates HP FlexFabric infrastructure via a VMware NSX virtual overlay. It provides 10GbE, 40GbE and 100GbE interfaces.

HP also made a number of other product announcements, including: new HP Apollo HPC systems; enhanced all-flash HP 3PAR StoreServ and HP StoreOnce Backup applications; an enhanced HP ConvergedSystem for Virtualization platform for IT as a Service; an HP Trusted Network Transformation service to help customers with on-the-go network upgrades; and an HP Datacenter Care Flexible Capacity pay-as-you-go model.

The company said the new offerings "enable a software-defined datacenter that is supported by cloud delivery models and built on a converged infrastructure that spans compute, storage and network technologies."

Shaikh was more pointed in his summary of the market, including one more shot at Cisco. "While some niche vendors only address certain aspects of datacenter and cloud, others are taxing customers with a series of disjointed, proprietary and expensive hardware-defined fork-lift upgrades," he said. "Meanwhile, HP has been leading in software-defined networking since its beginning in 2007 and has the most comprehensive SDN offering."

Posted by David Ramel on 06/11/2014 at 11:41 AM0 comments

A new report shows IT pros believe using cloud services increases the risk of data breaches that -- with an estimated cost of about $201 per compromised record -- can easily cost victimized companies millions of dollars.

Furthermore, IT and security staffers believe they have little knowledge of the scope of cloud services in use at their companies and are unsure of who is responsible for securing these services, according to a study, "Data Breach: The Cloud Multiplier Effect," conducted by Ponemon Institute LLC for Netskope, a cloud application analytics and policy enforcement company.

Netskope yesterday released the reported first-ever research conducted to estimate the cost of a data breach in the cloud, gathering information from a survey of 613 IT and security professionals. They were asked to estimate the probability of a data breach involving 100,000 or more customer records at their organizations under current circumstances and how the increased use of cloud services would affect that probability. The conclusion, Netskope said, is that increased cloud use could triple the risk of such a data breach.

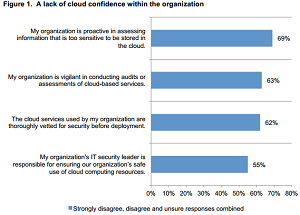

[Click on image for larger view.]

IT and security pros show little trust in their organizations' security practices.

[Click on image for larger view.]

IT and security pros show little trust in their organizations' security practices.

(source: Netskope)

And that breach could be quite expensive for a company, according to the study, which extrapolated the potential cost by using data from a previous Ponemon Institute report released last month, "Cost of a Data Breach." It pegged the cost of each lost or stolen customer record at about $201, meaning a 100,000-customer breach could cost more than $20 million.

"Imagine then if the probability of that data breach were to triple simply because you increased your use of the cloud," said Sanjay Beri, CEO and founder of Netskope. "That's what enterprise IT folks are coming to grips with, and they've started to recognize the need to align their security programs to account for it. The report shows that while there are many enterprise-ready apps available today, the uncertainty from risky apps is stealing the show for IT and security professionals. Rewriting this story requires contextual knowledge about how these apps are being used and an effective way of mitigating risk." That, of course, is what Netskope seeks to sell to customers.

Several factors contribute to the general perception that an organization's high-value intellectual property and customer data are at higher risk in today's typical corporate environment when the use of cloud services increases. For example, respondents said their networks are running cloud services unknown to them, they aren't familiar with cloud service provider security practices and they believe their companies don't pay enough attention to the implementation and monitoring of security programs.

"Cloud security is an oxymoron for many companies," the report stated. "Sixty-two percent of respondents do not agree or are unsure that cloud services are thoroughly vetted before deployment. Sixty-nine percent believe there is a failure to be proactive in assessing information that is too sensitive to be stored in the cloud."

Also worrisome is the percentage of business-critical applications entrusted to the cloud. Survey respondents estimated that about 36 percent of such apps are cloud-based, yet nearly half of them are invisible to IT. Respondents also said 45 percent of all applications are based in the cloud, but IT lacks visibility into half of them.

Similar cloud-related security research conducted earlier by Ponemon Institute indicated that 53 percent of organizations were trusting cloud providers with sensitive data. Only 11 percent of respondents in that survey said they didn't plan on using cloud services in the next couple of years.

According to yesterday's report, respondents also don't seem to trust their cloud service providers much. "Almost three-quarters (72 percent) of respondents believe their cloud service provider would not notify them immediately if they had a data breach involving the loss or theft of their intellectual property or business confidential information, and 71 percent believe they would not receive immediate notification following a breach involving the loss or theft of customer data," the report stated.

Netskope listed the following highlights of the study:

- Respondents estimate that every 1 percent increase in the use of cloud services will result in a 3 percent higher probability of a data breach. This means that an organization using 100 cloud services would only need to add 25 more to increase the likelihood of a data breach by 75 percent.

- More than two-thirds (69 percent) of respondents believe that their organization is not proactive in assessing information that is too sensitive to be stored in the cloud.

- 62 percent of respondents believe the cloud services in use by their organization are not thoroughly vetted for security before deployment.

"We've been tracking the cost of a data breach for years but have never had the opportunity to look at the potential risks and economic impact that might come from cloud in particular," said Dr. Larry Ponemon, chairman and founder of Ponemon Institute. "It's fascinating that the perceived risk and economic impact is so high when it comes to cloud app usage. We'll be interested to see how these perceptions change over time as the challenge becomes more openly discussed and cloud access and security broker solutions like Netskope become more known to enterprises."

The 26-page report is available in a PDF download after free registration.

Posted by David Ramel on 06/05/2014 at 8:58 AM0 comments

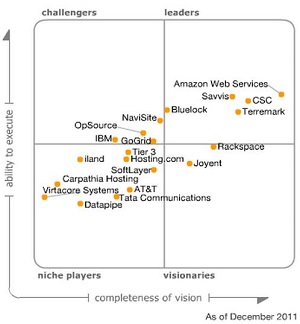

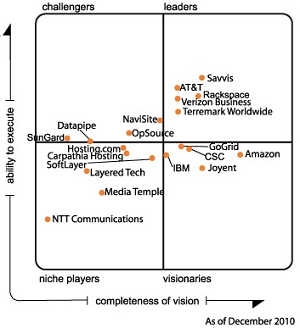

For the fifth straight year, Amazon Web Services (AWS) was ranked as the top cloud Infrastructure as a Service (IaaS) vendor in the "magic quadrant" report released by research firm Gartner Inc.

However, it's being chased by Microsoft -- new to the party but coming on strong -- and Google, which made the report for the first time this year.

Gartner's magic quadrant report uses exhaustive research to place vendors in a four-square chart with axes based on "ability to execute" and "completeness of vision." The top-right square contains "leaders" based on cumulative scores. The bottom-left vendors are at the opposite end, "niche players." Top-left is "challengers" and bottom-right is "visionaries." Figure 1 shows the results of the May 2014 report.

[Click on image for larger view.]

Figure 1. Gartner 2014 Magic Quadrant IaaS report

[Click on image for larger view.]

Figure 1. Gartner 2014 Magic Quadrant IaaS report

(source: Gartner Inc.)

AWS was again at the top in the leaders square, joined by Microsoft. Last year (in an August report), CSC was the only other company in the leaders square. This year, it changed places with Microsoft, which moved up from the visionaries quadrant. Google debuted on the chart in the No. 3 slot on the vision axis, though it was ranked eighth on the execution axis. Although Google has been offering its App Engine service since 2008, Gartner said it didn't enter the IaaS arena until the December 2013 launch of its Google Compute Engine.

Likewise, Microsoft has been in the cloud business for some time with its Azure service, but the company was considered by Gartner to be in the Platform as a Service (PaaS) market until the April 2013 debut of Azure Infrastructure Services.

VMware was the second vendor to make the chart for the first time in the latest report. The only companies dropped were due to acquisitions: SoftLayer (now IBM); and Saavis and Tier 3 (now both CenturyLink).

The continued dominance of AWS was evident in Gartner's assessment of its strengths. Enjoying a diverse customer base and wide range of use cases, Gartner said AWS "is the overwhelming market share leader, with more than five times the cloud IaaS compute capacity in use than the aggregate total of the other 14 providers in this Magic Quadrant. It is a thought leader; it is extraordinarily innovative, exceptionally agile, and very responsive to the market. It has the richest array of IaaS features and PaaS-like capabilities, and continues to rapidly expand its service offerings. It is the provider most commonly chosen for strategic adoption."

However, the research firm cautioned that the market is still maturing and evolving, and things may not stay the same.

"AWS is beginning to face significant competition -- from Microsoft in the traditional business market, and from Google in the cloud-native market," Gartner said. "So far, it has responded aggressively to price drops by competitors on commodity resources. However, although it is continuously reducing its prices, it does not commodity price services where it has superior capabilities. AWS currently has a multiyear competitive advantage, but is no longer the only fast-moving, innovative, global-class provider in the market."

Here are the quadrant charts from previous years (click on the images to see larger versions):

August 2013 (source: Gartner Inc.)

August 2013 (source: Gartner Inc.)

October 2012 (source: Gartner Inc.)

October 2012 (source: Gartner Inc.)

December 2011 (source: Gartner Inc.)

December 2011 (source: Gartner Inc.)

December 2010 (source: Gartner Inc.)

December 2010 (source: Gartner Inc.)

Posted by David Ramel on 06/04/2014 at 6:59 PM0 comments

Fresh on the heels of IBM's offering of mobile, cloud-based Big Data analytics in its cloud marketplace, the company today unveiled new, customized, cloud-based business solutions to provide services such as counter fraud, digital commerce and -- of course -- mobile and analytics.

IBM Cloud Business Solutions are industry-specific, subscription-based packages combining components such as consulting, prebuilt assets, software, support and cloud infrastructure from IBM's Softlayer acquisition. The company said customers pay one up-front fee followed by monthly payments on contracts that average about three years.

"This approach recognizes the mandate for speed and time to value, along with the requirement of clients to personalize business solutions to their own processes and culture and deploy them via the cloud," the company said in a statement. "For example, a healthcare provider could start by moving their existing solutions to the cloud and then add prebuilt IBM assets to counter medical fraud or address a range of additional business priorities. The solution would then be customized with consulting services and support aimed at transforming their business."

The company plans on implementing 20 such solutions, with the 12 initial "X as a Service" offerings including:

- Customer analytics: Designed to enhance customer relations, this involves helping companies make correct decisions at appropriate times, predicting customer behavior through data analysis and strengthening engagement.

- Predictive asset optimization: This provides visibility into the health of equipment so companies can plan to deal with hardware failures.

- Counter fraud: Coming from IBM Research, this is designed to prevent various kinds of fraudulent activities, waste, abuse and errors surrounding data. Analytics are used to inspect a customer's data and internal behavior and use the information to compare the customer with peers to help uncover suspicious activity by detecting anomalies.

- Customer data: This service aims to improve marketing and planning through the use of analytics about internal and external data sources.

- Mobile: This will improve engagement with mobile employees, customers and partners by providing business designs, strategies, and models for development, along with managed services to help customers implement Agile and iterative development.

- Digital commerce: This "allows business leaders to deliver

exceptional digital experiences and accelerate time to market for a range of customer engagement solutions from order capture to fulfillment."

Other initial services include: smarter buildings; smarter asset management; marketing management; emergency management; digital environment for adaptive learning; and care coordination.

IBM said these managed services, based on proven assets and patterns, will be available in a private or hybrid cloud setup. Customers can choose to eventually transition the services to in-house products, the company said.

"Our clients view cloud as the catalyst for entirely new business models, not just technology models," said CTO Kelly Chambliss in a statement. "The decision to use cloud to support business priorities is being strongly influenced -- if not entirely determined -- by leaders across the C-suite. IBM Cloud Business Solutions represent the next evolution in high-value business services, in line with the expectations and priorities of our clients. We're providing access to the best IBM has to offer -- from cloud to data analytics -- in a single, simple, highly accessible package."

Posted by David Ramel on 05/29/2014 at 9:44 AM0 comments

More on this Topic:

You can implement all the security precautions you want, but data center and cloud outages are often just accidents from within, as happened yesterday when an operator's "fat finger" brought down a data center operated by cloud provider Joyent Inc.

"Due to an operator error, all compute nodes in us-east-1 were simultaneously rebooted," the company said in a support notification. The problem was apparently cleared up in about an hour, with the company providing a 6:45 p.m. EDT update that all compute nodes and virtual machines were back online.

The "high-performance cloud infrastructure and Big Data analytics company" operates three data centers in the U.S., including the one that went down in northern Virginia. The others are in the Bay Area and Las Vegas. The company lists nearly 30 corporate customers on its Web site, including ModCloth, Voxer, Wanelo, Quizlet and Digital Chocolate.

"It should go without saying that we're mortified by this," said Joyent CTO Bryan Cantrill in a post on Hacker News. "While the immediate cause was operator error, there are broader systemic issues that allowed a fat finger to take down a data center."

Although the exact duration of the outage wasn't specified, the recovery time was apparently extended more than hoped. "Some compute nodes are already back up, but due to very high load on the control plane, this is taking some time," the initial support notification said.

Cantrill apparently wasn't happy with the recovery process.

"As soon as we reasonably can, we will be providing a full postmortem of this: how this was architecturally possible, what exactly happened, how the system recovered, and what improvements we are/will be making to both the software and to operational procedures to assure that this doesn't happen in the future (and that the recovery is smoother for failure modes of similar scope)," he said in his Hacker News post.

Yesterday's outage wasn't the first experienced by the company, as a Hacker News poster complained that Joyent's server where he hosted some Web sites and mail servers was down for two days in February 2011.

Despite all the attention paid since then to high availability, redundancy and failover systems, notable cloud outages continue to occur. The Joyent outage happened less than two weeks after Adobe System Inc.'s Creative Cloud services were down for about a day because of a database maintenance error, leaving designers unable to access their tools (Adobe had announced the previous year it was discontinuing packaged software or downloaded programs in favor of cloud-only services).

And although neither that incident nor the Joyent outage were as serious as well-publicized security breaches, such as one experienced by Adobe last October when 38 million accounts were compromised -- they continue to frustrate users and damage the reputations of individual cloud providers and the cloud-hosting industry in general.

For example, in February of last year, Microsoft's Azure cloud platform suffered a worldwide storage outage attributed to an expired SSL certificate. Some eight months later, another Azure outage of more than 20 hours was attributed to a system subcomponent.

And just last week, Microsoft experienced a less severe issue in which some customers of its compute cloud service apparently experienced access problems, according to information on the company's Azure Service Dashboard. No causal details were given for the incident, described as a "performance degradation" rather than a service interruption, but it indicates problems of all kinds may be going unreported in mainstream media.

We'll wait to get more details on the Joyent outage and steps that can be taken to prevent such problems, but they will certainly continue. As one commenter on Hacker News said about Cantrill's post: "[Stuff] happens. You deal with it, then do what you can to keep it from happening again."

Then some more stuff happens.

Posted by David Ramel on 05/28/2014 at 9:44 AM0 comments

IBM yesterday targeted four of today's hottest transformative technologies -- cloud, Big Data, social and mobility -- with its new IBM Concert software.

The new offering in the IBM Cloud Marketplace is designed to give more enterprise staffers access to data analytics wherever they might be working, from whatever device they might be using.

At its Vision 2014 conference in Orlando, IBM said the new analytics software "can manage the increasing volume and complexity of processes and tasks, support an increasingly mobile workforce, and emphasize social collaboration as an integral part of decision making.

[Click on image for larger view.]

IBM Concert in action (source: IBM)

[Click on image for larger view.]

IBM Concert in action (source: IBM)

"By accessing this technology in the cloud, business users can now easily view, understand and interact with specific performance insights to more easily determine what actions to take and when," the company continued. "Remote employees can also contribute and update data immediately via mobile devices, improving accuracy and providing real-time planning and forecasting."

The new marketplace item features guided business processes, customized, personalized task lists and other services that users access through a GUI. Users can get data such as critical metrics and key performance indicators (KPIs) over the Web and from a range of mobile devices.

For example, personalized task lists let users see highlighted priorities and step through projects, be alerted to important, time-sensitive tasks and see in-context performance metrics and KPIs with accompanying charts, graphs and tables.

IBM also previewed another upcoming analytic solution, Project Catalyst Insight, also designed to give data analysis capabilities to more users. The project -- deemed a "pretty amazing data scientist in a box" by one Twitter user -- seeks a more automated approach.

"Catalyst Insight automatically builds predictive models and presents those results as interactive visuals with plain-language descriptions," the company said. "Using Catalyst Insight, a marketing manager could use advanced analytics to better understand the drivers of marketing campaign effectiveness without having to wait for a data scientist to prepare the information, develop predictive models and interpret the results."

In yet another announcement, IBM said its OpenPages enterprise software family for risk and compliance management is available on its SoftLayer cloud infrastructure as a managed service.

"OpenPages allows businesses to develop a comprehensive compliance and risk management strategy across a variety of domains including operational risk, financial controls management, IT risk, compliance and internal audits," the company said.

Both Catalyst Insight and OpenPages will be featured among more than 100 other Software as a Service products supported by SoftLayer Technologies Inc., the company's cloud foundation.

IBM said interested users can request a price quote for the IBM Concert offering.

Posted by David Ramel on 05/21/2014 at 9:44 AM0 comments

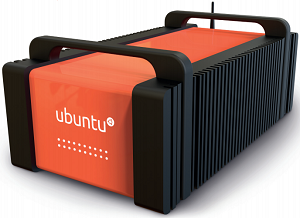

Halloween is a ways off, but the new Orange Box from Canonical Ltd. looks like a tasty treat for users wanting a quick, easy way to get started tinkering with OpenStack cloud infrastructure and Ubuntu.

Encased in a ruggedized black flight-case frame with built-in handles, the bright orange cloud-in-a-box made some waves at the recent OpenStack Summit in Atlanta when introduced by Canonical, the steward of the popular Ubuntu Linux OS distribution.

The Orange Box (source: Canonical Ltd.)

The Orange Box (source: Canonical Ltd.)

OpenStack is open source software for building private and public clouds, providing an OS to handle compute, networking and storage in the cloud.

The Orange Box is meant to be a learning aid for users getting instruction on OpenStack and other technologies through the Ubuntu Jumpstart training program.

"We are delighted to introduce a new delivery mechanism for Jumpstarts, leveraging the innovative Orange Box," the company said. "We'll deliver an Orange Box to your office, and work with you for two days, learning the ins and outs of Ubuntu, MAAS, Juju, Landscape, and OpenStack, safely within the confines of an Orange Box and without disrupting your production networks.

"You get to keep the box for two weeks and carry out your own testing, experimentation, and exploration of Ubuntu's rich ecosystem of cloud tools. We will join you again, a couple of weeks later, to review what you learned and discuss scaling these tools into your own datacenter, onto your own enterprise hardware."

The 37 lb. box -- or "mobile cluster" -- comes with Ubuntu 14.04 LTS, Metal as a Service (MaaS) and Juju, the service orchestration tool. The box comes with 16GB of DDR RAM, 10 four-core nodes and 120GB of SSD storage. It features an Intel i5-3427U CPU, an Intel HD4000 GPU and an Intel Gigabit network interface card (NIC). Four nodes include more SSD storage and one includes Intel Wi-Fi and a 2TB hard drive.

The appliance, built by Tranquil PC, can deploy OpenStack, Cloud Foundry and Hadoop workloads.

Canonical said the unit could be set up in a conference room to let curious systems administrators and engineers get training from experts in a private sandbox without disrupting operations. They can try different storage alternatives, practice scaling and build and destroy environments as needed.

Beyond the initial two-day Jumpstart training, customers can use the Orange Box for two weeks at a cost of $10,000. Interested users were advised to contact Canonical for more details.

Posted by David Ramel on 05/21/2014 at 9:44 AM0 comments

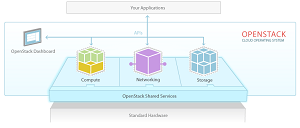

The OpenStack Foundation this week unveiled a one-stop shop for organizations interested in learning about and getting started with the free, open source software for building clouds.

The OpenStack Marketplace opened Monday during the OpenStack Summit conference in Atlanta, featuring vendor offerings in Public Clouds; Distributions and Appliances; Training; Consulting and Systems Integrators; and Drivers.

[Click on image for larger view.]

OpenStack Marketplace public cloud services

[Click on image for larger view.]

OpenStack Marketplace public cloud services

(source: OpenStack Foundation)

Within those categories, users can explore products and services that best suit their business requirements.

For example, under the Public Clouds category, a Google-powered map shows the number of Infrastructure-as-a-Service (IaaS) offerings available in different regions of the world, accompanied by a list of vendors, such as HP, Rackspace, Internap and several more. Each listing contains a capsule description of the cloud service with a link to explore more details, including OpenStack services offered and their respective versions -- such as Havana -- along with pricing options, supported hypervisors and OSes, data center locations, API coverage and more.

Under the Training tab, actually in operation since last September, more than 250 classes have been listed. Summaries of training opportunities are provided, along with upcoming dates of courses, locations, the level of instruction and more. A separate section shows a consolidated list of upcoming classes.

Marketplace users have the option to read and submit reviews of the services.

[Click on image for larger view.]

The OpenStack OS

[Click on image for larger view.]

The OpenStack OS

(source: OpenStack Foundation)

The foundation said all featured vendors are vetted to ensure they meet technical requirements and are transparent about information such as the OpenStack versions supported and the capabilities of their services.

A key part of its mission, the foundation said, is to inform users about the growing OpenStack ecosystem and "cut through the noise" to give users facts and help them make decisions about services to use.

"How to get started with OpenStack is one of the most common questions we receive," said Mark Collier, chief operating officer of the OpenStack Foundation, in a statement. "The answer is that there are many ways to consume OpenStack, whether they are building a cloud, looking to use one by the hour or pursuing a hybrid model. The marketplace is intended to help users make sense of the paths to adoption and find the right mix of products, services and community resources to achieve their goals."

The foundation said it's enacting testing requirements to validate OpenStack technical capabilities and later this year will publish test results on the marketplace.

OpenStack, operating under Apache License 2.0, features many related projects for controlling pools of processing, storage and networking resources in a data center, managed through a dashboard, command-line tools or a RESTful API. It seeks to help organizations quickly launch new products, add features and improve systems internally with open products and services, avoiding technology lock-in. The foundation has more than 16,000 individual members and some 350 organizations supporting it in 138 countries.

It operates with a six-month release cycle that includes frequent development milestones. Icehouse, the most recent stable release, was made available April 17.

Vendors interested in being featured in the marketplace were invited to check out the branding programs.

Posted by David Ramel on 05/14/2014 at 9:44 AM0 comments

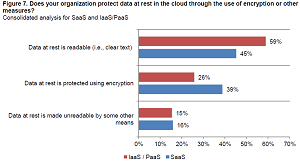

Who is responsible for protecting sensitive or confidential data transferred to the cloud -- the provider or the consumer?

According to a recent survey of 4,275 business and IT managers around the globe, the answer to that question depends on the type of service, be it Software-as-a-Service (SaaS), Infrastructure-as-a-Service (IaaS) or Platform-as-a-Service (PaaS).

"In a SaaS environment, more than half view the cloud provider as being primarily responsible for security and less than a quarter view cloud consumers as being primarily responsible," states the third annual Trends in Cloud Encryption Study. "Only 19 percent see this as a shared responsibility.

"In contrast, nearly half of users of IaaS/PaaS environments for sensitive data view security as a shared responsibility between the user and provider of cloud services. Only 22 percent see this as the sole responsibility of the cloud provider."

The report was commissioned by Thales e-Security and conducted independently by Ponemon Institute LLC, which surveyed companies in the United States, United Kingdom, Germany, France, Australia, Japan, Brazil and Russia.

[Click on image for larger view.]

Protecting data at rest in the cloud

[Click on image for larger view.]

Protecting data at rest in the cloud

(source: Thales e-Security)

The research indicated slow growth in the number of organizations transferring sensitive data to cloud providers -- 53 percent in 2013 as opposed to 49 percent in 2011 -- and in those planning to do so in the next couple of years -- 36 percent compared to 33 percent in 2011. Only 11 percent of respondents do not plan on using any cloud services in the next couple of years, down from 19 percent in 2011.

Three-year trend analysis also shows some growth in the percentage of respondents who know the steps taken by cloud service providers to protect their data. Consolidated results show 35 percent of respondents claimed to know this information, compared to 33 percent in 2012 and 29 percent in 2011.

Meanwhile, fewer respondents believe the cloud has decreased their security posture, with 34 percent answering in the affirmative compared with 35 percent in 2012 and 39 percent in 2011.

Other questions answered in the report, free for download with registration, include:

- Do organizations have the ability to safeguard sensitive or confidential data before or after it is transferred to the cloud?

- Do respondents believe their cloud providers have the ability to safeguard sensitive or confidential data within the cloud?

- Where is encryption applied to protect data that is transferred to the cloud?

- Who manages encryption keys when sensitive and confidential data is transferred to the cloud?

"In our research we consider how encryption is used to ensure sensitive or confidential data is kept safe and secure when transferred to external-based cloud service providers," the report's executive summary stated. "We believe these findings are important because they demonstrate the relationship between encryption and the preservation of a strong security posture in the cloud environment. As shown in this research, organizations with a relatively strong security posture are more likely to transfer sensitive or confidential information to the cloud. In addition, they are more likely to encrypt data at rest in the cloud ecosystem."

Posted by David Ramel on 05/14/2014 at 9:44 AM0 comments

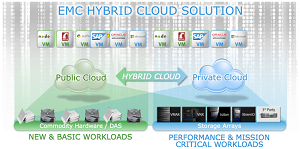

Combining the benefits of public and private clouds was a common theme of announcements coming from EMC Corp.'s conference in Las Vegas this week.

The EMC Hybrid Cloud solution is designed to let customers easily build a hybrid cloud that combines the best aspects of public and private clouds. Public cloud services provide speed and agility, EMC said, while private cloud infrastructure brings control and security.

"The EMC Hybrid Cloud solution is a new end-to-end reference architecture that is built upon EMC's industry-leading private cloud solutions,"

the company said. "It allows customers to rapidly integrate with multiple public clouds to create a unified hybrid cloud. The solution enhances the performance, security and compliance of an organization's private cloud with compatible, on-demand services from EMC-powered cloud service providers."

[Click on image for larger view.] The EMC hybrid cloud vision(source: EMC Corp.)

[Click on image for larger view.] The EMC hybrid cloud vision(source: EMC Corp.)

EMC executive Josh Kahn said the solution is built on the software-defined foundation established by EMC and VMware. "On one hand, the EMC Hybrid Cloud solution is the foundation of the hybrid cloud, enabling application workloads to be placed in the right cloud, with the appropriate cost, security and performance needed," Kahn said. "On the other hand, EMC Hybrid Cloud is the bridge between traditional applications and next-generation applications, all deployable across a common software-defined architecture."

While virtualization solutions provided cost savings and agility, customers asked for more, such as a choice of what devices to use, better access to infrastructure and applications, and application flexibility, Kahn said. Providing those benefits entails what EMC calls a "well-run Hybrid Cloud," something that more than 70 percent of customers indicated they will need, he said.

EMC sought to show how easy it is to build such hybrid clouds by having its staffers build one from scratch in 48 hours at the EMC World conference.

Echoing the same public/private cloud theme was the announcement of the EMC Elastic Cloud Storage (ECS) Appliance. "The new EMC ECS Appliance delivers the ease-of-use and agility of a public cloud with the control and security of a private cloud," the company said.

Formerly called Project Nile, the ECS Appliance is described as an infrastructure for hyperscale cloud storage. It provides up to 2.9 petabytes of storage in a single rack and can be clustered to gain exabyte scale.

In his Virtual Geek blog, EMC's Chad Sakac claimed the new appliance delivered better transactional storage and object data services than comparable solutions offered by competitor Amazon Web Services.

Sakac also said the appliance features the same intellectual property as yet another product announced at the conference: ViPR 2.0, a software-defined storage offering. He said the ViPR Controller and ViPR Data Services are software-only and appeal to customers who want to use their own hardware, while the ECS Appliance is for customers who "want to avoid the mess of hardware sourcing, sparing and maintenance."

David Goulden, CEO of EMC Information Infrastructure, positioned the new products as solutions for problems faced in the new age of mobile workers and customers, social media engagement, Big Data, and, of course, cloud computing.

"The industry is navigating the single-most transformative IT shift ever," Goulden said. "It's driven by billions of devices, billions of users and millions of applications. Not a single industry or organization is immune to this sweeping change. The priority for customers today is to drive competitive advantage by harnessing the forces of mobile, social, cloud and Big Data, while maximizing existing investments that support traditional enterprise workloads. The hybrid cloud model allows customers to run applications easily and cost-effectively inside or outside of their data centers, and today's software-defined products make this possible."

Posted by David Ramel on 05/07/2014 at 9:44 AM0 comments