How-To

Using Graphic Cards with Virtual Machines, Part 2

Tom Fenton, after earlier demonstrating how he used Virtual Dedicated Graphics Acceleration (vDGA) -- a technology which provides a VM with unrestricted, fully-dedicated access to a host's GPUs -- to use a server with a powerful graphics card in a VM, now shows how he added that VM to Horizon and the results of testing to assess its performance.

In my previous article, I explained that I had a server (Intel NUC 9 Pro) that had a powerful graphics card (NVIDIA Quadro P2200) in it that I wanted to use in a virtual machine (VM). I then described how I did this by using Virtual Dedicated Graphics Acceleration (vDGA), a technology which provides a VM with unrestricted, fully-dedicated access to a host's GPUs.

In this article, I will show you how I added that VM to Horizon and the results of the testing that I did on the VM to assess its performance.

Adding the GPU VM to Horizon

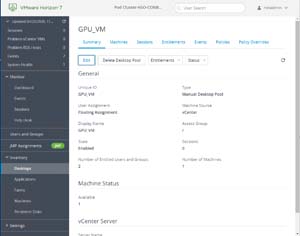

After logging on to Horizon Console, I created a manual, floating Horizon desktop pool that supported two 4K monitors. I also enabled automatic 3D rendering.

[Click on image for larger view.]

[Click on image for larger view.]

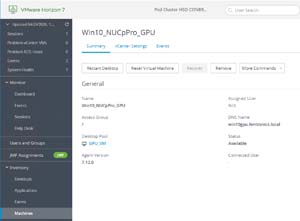

First, I added the vDGA VM to the desktop pool.

[Click on image for larger view.]

[Click on image for larger view.]

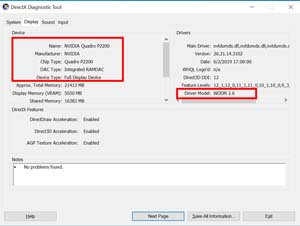

Then, I used the Horizon client (version 5.4) to connect to the vGDA desktop using Blast protocol; I entered dxdiag.exe from the command line. The output showed that it was using the NVIDIA Quadro P2200 device and the WDDM 2.6 driver and didn't have any problems. This is far different than what I saw when I ran dxdiag after connecting to the VM by using RDP.

[Click on image for larger view.]

[Click on image for larger view.]

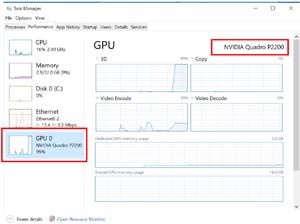

I launched Task Manager and clicked the Performance tab, and in the upper left I saw NVIDIA Quadro P2200 and its GPU performance metrics.

[Click on image for larger view.]

[Click on image for larger view.]

ControlUp Console showed GPU details and metrics.

[Click on image for larger view.]

[Click on image for larger view.]

[Click on image for larger view.]

[Click on image for larger view.]

Because I wanted to know how well the system would act as a CAD/CAM workstation, I ran the Catia and Maya SPECviewperf 12.1 benchmark test on it.

[Click on image for larger view.]

[Click on image for larger view.]

I compared these test results to those that I pulled on a bare metal install of Windows 10 on the NUC 9 Pro. I found that the bare metal results were ~15 percent better, but the bare metal results were using all eight vCPUs of the server, not the four vCPUs that I was using with the vDGA VM. After installing Catia on the vDGA VM, I was very happy with its performance.

Another task that I wanted to accomplish with the VM was to encode/transcode videos. To test this, I used ffmpeg to transcode it to HEVC (H.265) by using the following command:

ffmpeg.exe -i TestInput.mkv -c:v hevc_nvenc -c:a copy -quality quality -b:v 3M -bufsize 16M -maxrate 6M outhevcNvidia.mp4

ControlUp then showed that the VM was using the hardware encoder on the GPU.

[Click on image for larger view.]

[Click on image for larger view.]

Conclusion

After using the NUC 9 Pro in a multi-monitor setup, disassembling the unit, looking at its hardware, and running benchmark tests on it, I continued to be impressed by it. Although it is considerably larger than the other NUCs that I have reviewed, it packs a lot into that space. Its Compute Element supports 64GB of RAM, has space for two M.2 devices, and contains two ethernet ports, a wireless chip, an HDMI connector, as well as other components. The NUC 9 Pro does have two PCIe slots that can be used for expandability, but you would be hard-pressed to use both if you are using a graphics card with it. I did find that to get to any of the M.2 storage devices, or to remove the graphics card, I needed to first remove the Compute Element; however, to be honest, unless you are using this as a device test bed, you will seldom need to access these devices. With its multiple USB 3.1 and Thunderbolt ports, the device's external connectivity is excellent. Trading houses, engineering firms, and content creators would find it difficult to push the limits of this computer.

By running ESXi on the NUC 9 Pro with a vDGA VM, I was able to have the system serve as a dual-purpose unit.

More information on the NUC 9 Pro kit is located

here. Intel's Product Compatibility Tool for the NUC 9 Pro is located

here.

Addendum

After working with my vGDA VM for a few days, I noticed the that GPU was no longer being reported in Task Manager. Also, when I ran GPU-Z, the device was seen but the GPU was showing 0 clock cycles.

[Click on image for larger view.]

[Click on image for larger view.]

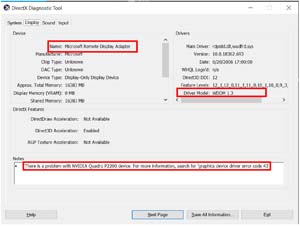

The device manager showed that there was a problem with the device.

[Click on image for larger view.]

[Click on image for larger view.]

The driver indicated that it had problems.

[Click on image for larger view.]

[Click on image for larger view.]

I tried various things to restart the drive, and also rebooted the VM, but these attempts did not fix the issue. After I rebooted the ESXi server, however, the GPU worked as expected. I searched online and found that other people have seen this on physical machines; also, taking into account the heavy load and reconfiguration I was doing to the machine, I am not concerned with this issue but I will continue to keep an eye on it.

About the Author

Tom Fenton has a wealth of hands-on IT experience gained over the past 30 years in a variety of technologies, with the past 20 years focusing on virtualization and storage. He previously worked as a Technical Marketing Manager for ControlUp. He also previously worked at VMware in Staff and Senior level positions. He has also worked as a Senior Validation Engineer with The Taneja Group, where he headed the Validation Service Lab and was instrumental in starting up its vSphere Virtual Volumes practice. He's on X @vDoppler.