In-Depth

Putting the 'C' in Confidential Computing

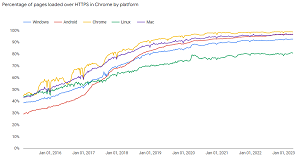

The IT industry is slowly growing up, putting basic building blocks in place to protect data and transactions as our entire society now relies on these foundations being solid. Take SSL / TLS, for instance. Ten years ago only banks and other "highly secure" sites used it; nowadays it's taken for granted ("data in transit"), and instead of looking for the green padlock, we look for the exception, which is the red warning that traffic between your browser and a site is not protected (thanks Edward Snowden).

[Click on image for larger view.] Percentages of Pages Loaded over HTTPS in Chrome by Platform (source: Google Transparency Report).

[Click on image for larger view.] Percentages of Pages Loaded over HTTPS in Chrome by Platform (source: Google Transparency Report).

If you buy a new Windows laptop, its drive will be encrypted with Bitlocker, ditto (in most cases) for your new smartphone or tablet. And data you store in your on-premises datacenters, or in any public cloud, will also be encrypted on disk ("data at rest").

But there's one area where we haven't progressed as far, and that's protecting data and code, while they're in use. This is the realm of Confidential Computing, which we last looked at back in May 2019, which in cloud years is WAY BEFORE dinosaurs roamed the earth.

Mark Russinovich, CTO of Azure, recently said, "We will see an expectation that data is always encrypted while it's in use, regardless of how sensitive it might be." In other words, not using confidential computing will be the exception (at least on the server / cloud side), while using it will be just normal.

In this article I'll look at why Azure is a leader in Confidential Computing, how the Confidential Computing Consortium (CCC) fits into the picture and which different services in Azure you can use Confidential Computing with.

The Basics

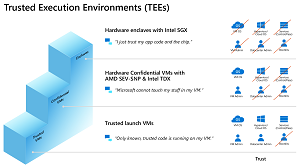

Protecting data as it's being computed on ("data in use") is achieved by doing it in Trusted Execution Environments (TEEs). There are a few different levels here. AMD offers Secure Encrypted Virtualization -- Secure Nested Paging (SEV-SNP) that allows you to encrypt an entire VM or container. And Intel Software Guard eXtensions (SGX) offer parts of memory and the CPU securely walled off where the computation can be done. Intel's architecture means you have to write your code (or have third-party components) specifically to take advantage of SGX, whereas AMDs approach is essentially invisible to the workload.

Intel's fourth generation of Xeon Scalable processors, coming in 2023 to Azure, with Trusted Domain eXtensions (TDX) will offer entire VM encryption as well.

Qualcomm and ARM processors aren't left behind; their TEE is called an ARM realm, but it's not offered in Azure yet.

[Click on image for larger view.] The Spectrum of Protection in Different Trusted Execution Environments (source: Microsoft).

[Click on image for larger view.] The Spectrum of Protection in Different Trusted Execution Environments (source: Microsoft).

The first step on the staircase is trusted launch VMs, built on similar technology to trusted boot on your new Windows 11 laptop, ensuring that only trusted, signed and measured components are used to boot the system. The second step is confidential VMs where the entire VM / container is encrypted (AMD processors), and the third step (Intel processors) provides the most protection as only the code you allow is running inside the enclave.

Another way to think about this is the Trusted Computing Base (TCB), which is all the system components critical to establishing and maintaining the security of the system. Using a Zero Trust approach to computing, you can assume that all components outside of the TCB for a given system have been compromised, while your data is still secure in the TEE.

As a real-world example, in your on-premises datacenter, your server hardware, the hypervisor and the OS / applications you run are all inside your TCB. This might be an acceptable risk as you have control over (some of) those components. Once you move to a public cloud, you have to trust the cloud provider (rogue fabric administrator). With all flavors of Azure Confidential Computing, the hypervisor is moved outside of the TCB, shielding the data or VMs inside from any cloud provider administrator access. As seen above, you can even reduce the TCB to selected lines of code in your application.

The final component of this approach is attestation -- how does the VM, container or app know that it's running on trusted hardware? Azure Attestation is both a service and a framework for attesting TEEs, available throughout Azure at no cost, and it uses Intel SGX enclaves to protect the data it's processing itself. It checks if a signed TEE quote chains up to a trusted designer (both AMD and Intel CPUs have individual keys burned into the CPUs, so they check with the respective manufacturer). Azure Attestation also checks if the TEE meets the security baseline (which you can customize) and verifies the trustworthiness of the TEE binaries. For Intel SGX specifically, Trusted Hardware Identity Management (THIM) caches attestation collateral to minimize latency and increase resiliency.

The Confidential Computing Consortium (CCC) is a community project in the Linux foundation and the aim is to define and accelerate confidential computing across the IT industry.

Scenarios

The obvious way in which Confidential Computing makes sense is in the public cloud, where in essence, for any workload that processes sensitive data, you can ensure that no one at the cloud provider has access to the data.

Another strong use case is multi-party data sharing. Each hospital has some patient data to build an ML model to assist in diagnosis, but not enough to build an accurate model. Working with other hospitals will result in a better model, but you can't share the data. Using Confidential Computing each hospital (or bank or x -- fill in whatever type of institution that could benefit from using data analysis, without sharing sensitive data with the other businesses) can securely contribute their data. These sorts of consortium scenarios will also benefit greatly from the Azure Managed Confidential Consortium framework (currently in preview), which assists with the set up and ongoing management of the open source Confidential Consortium Framework.

Confidential Computing also gives you end-to-end, verifiably secure protection of your data, from when it's stored on disk, transmitted over the network and when it's processed.

Companies already using Azure confidential computing includes Signal, Royal Bank of Canada and UCSF (for healthcare).

Confidential Virtual Machines and Containers

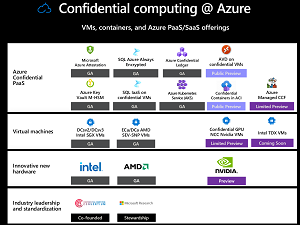

As you might expect, since those first baby steps back in 2019, Confidential Computing has spread to many areas in Azure.

[Click on image for larger view.] Confidential Computing in Azure (source: Microsoft).

[Click on image for larger view.] Confidential Computing in Azure (source: Microsoft).

Notice that in the PaaS box above, SQL Azure Always Encrypted and SQL in confidential VMs can both take advantage of confidential computing, moving your database administrators outside your TCB. As expected, Azure Kubernetes Service (AKS) can do the same, provided the nodes are running on confidential computing series VMs.

Recently released is support for confidential computing for Azure Virtual Desktop and for Azure Container Instances (ACI). Unlike Kubernetes, ACI is very simple to stand up and maintain, so if you just need a few containers, supplemented by the latest features for modern development -- particularly if they only run for short amounts of times -- ACI is the way to go. And now, if you need to encrypt them (Linux only for now), support is in public preview. Note that with both full VM encryption and the support for encrypted containers in ACI, you can migrate existing VMs/containers to Azure and not change a line of code to gain the advantage of the protection.

Confidential GPUs

So far, we've looked at the CPU silicon from AMD and Intel. They differ in their approach and what you can use each of their platforms for. I'm reminded of when Intel first launched their 64-bit Itanium processors on the market and told everyone that the only way to get to 64-bit computing from 32-bit was to rewrite every OS and every app. And AMD said, hold my beer, and came up with x64, which is what every CPU (apart from ARM / Qualcomm) uses today. I'm not saying that Intel's approach is going to crash and burn like that, but it's interesting to see the different approaches.

But GPUs of course are heavily used in the cloud, both for actual screen display calculations (render farms and Azure Virtual Desktop CAD workstations), and more importantly for machine learning inference. And thus Microsoft is working with NVIDIA on their Hopper architecture and the new H100 Tensor Core GPU for confidential computing on GPUs. It links the GPU TEE to the CPU TEE, and you will even be able to connect multiple GPUs to the same CPU TEE in the future. NVIDIA is taking an interesting approach, where you can boot a H100 GPU in either normal or trusted mode, and it's using hardware firewall in the PCI Express bus to partition the data flow, plus encrypting it all.

Wrapping Up

These recent advances in Confidential Computing in Azure were revealed at Microsoft's inaugural Secure conference, specifically these three sessions:

You can also read more on the Azure Confidential Computing blog.

There were a lot of other interesting reveals at Secure, obviously the ChatGPT-based Security Copilot, which we'll have to wait some time to test out ourselves, Optical Character Recognition (OCR) support in Data Loss Prevention (DLP) (max 50 MB files, 150 different languages recognized), DLP for virtual desktops, Teams collaboration protection, and sensitive data awareness for Azure storage accounts. The recently released Defender for Threat Intelligence (MDTI) now has an API connector for Sentinel, which is very welcome.

It'll be interesting to see if Mark Russinovich is right, and we'll look back on this period, amazed that not every computer was automatically using Confidential Computing.