News

AI Brings Star Trek 'Universal Translator' Closer to Reality

Meta announced a new AI model for speech and text translations that seems to bring Star Trek's "universal translator" closer to reality.

Readers of a certain age know that the Star Trek TV series in the '60s had humans effortlessly talking with aliens of all types thanks to the automatic universal translator.

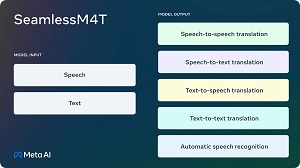

However, rather than scanning alien brain waves to find a basis for language commonality and translation, Meta's new SeamlessM4T is just a multimodal AI model, though it can perform speech-to-text, speech-to-speech, text-to-speech, and text-to-text translations for up to 100 languages depending on the task.

And the company doesn't make any Star Trek comparisons in its announcement. Rather, it refers to another pop culture universal translator from a renowned sci-fi movie (maybe it's an age thing).

[Click on image for larger view.] SeamlessM4T (source: Meta).

[Click on image for larger view.] SeamlessM4T (source: Meta).

"Building a universal language translator, like the fictional Babel Fish in The Hitchhiker's Guide to the Galaxy, is challenging because existing speech-to-speech and speech-to-text systems only cover a small fraction of the world's languages," Meta said in an Aug. 22 post. "But we believe the work we're announcing today is a significant step forward in this journey."

Specifically, the research-licensed SeamlessM4T supports:

- Speech recognition for nearly 100 languages

- Speech-to-text translation for nearly 100 input and output languages

- Speech-to-speech translation, supporting nearly 100 input languages and 36 (including English) output languages

- Text-to-text translation for nearly 100 languages

- Text-to-speech translation, supporting nearly 100 input languages and 35 (including English) output languages

Along with releasing the project under a search license, Meta is also releasing the metadata of SeamlessAlign, the biggest open multimodal translation dataset to date, totaling 270,000 hours of mined speech and text alignments.

"This work builds on advancements Meta and others have made over the years in the quest to create a universal translator," Meta said in another post. "Last year, we released No Language Left Behind (NLLB), a text-to-text machine translation model that supports 200 languages and has since been integrated into Wikipedia as one of its translation providers. A few months later, we shared a demo of our Universal Speech Translator, which was the first direct speech-to-speech translation system for Hokkien, a language without a widely used writing system. Through this, we developed SpeechMatrix, the first large-scale multilingual speech-to-speech translation dataset, derived from SpeechLASER, a breakthrough in supervised representation learning.

"Earlier this year, we also shared Massively Multilingual Speech, which provides automatic speech recognition, language identification, and speech synthesis technology across more than 1,100 languages. SeamlessM4T draws on findings from all of these projects to enable a multilingual and multimodal translation experience stemming from a single model, built across a wide range of spoken data sources and with state-of-the-art results."

For more information, Meta invites readers to:

About the Author

David Ramel is an editor and writer at Converge 360.