Last week I outlined how I go about provisioning my own lab for testing virtualization and supporting blogging efforts. One of the key points of my ability to have a functional lab is a shared storage device. I use the

DroboPro iSCSI storage device. Since I purchased the unit, the

Elite version has been released. While the DroboPro and DroboElite are candidates for home or test labs, Data Robotics (makers of the Drobo series) are positioning themselves well for the SMB virtualization market.

I had a chance to evaluate the DroboElite unit, and it has definitely added some refining points that are optimized for VMware virtualization installations. The DroboElite is certified on the VMware vSphere compatibility list for up to four hosts. The new features for the DroboElite over the DroboPro are:

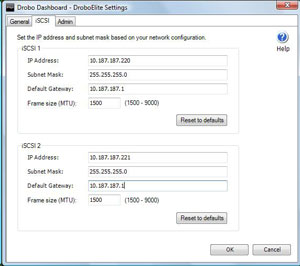

Second iSCSI network interface -- The cornerstone feature is its ability to have two Ethernet connections for iSCSI traffic to a VMware ESX/ESXi host (see Fig. 1).

|

| Figure 1. You can provide two network interfaces from the DroboElite to be placed on two different switches for redundancy, right from the dashboard. (Click image to view larger version.) |

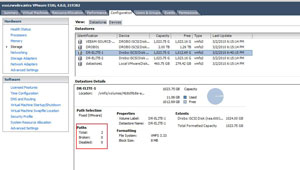

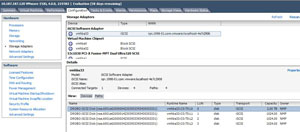

This also allows the multipath driver for ESX and ESXi to show the second path to the disk. Whether or not you put it on multiple switches, depending on your connectivity on the host and on the physical network, you can design a much better connectivity arrangement compared to the DroboPro device, which only had one network interface. Fig. 2 shows the second path made available to the datastore:

|

| Figure 2. The second network interface allows DroboElite to present two paths to the ESXi host. (Click image to view larger version.) |

While the second network interface was a great touch, the DroboElite still has only one power supply. This is my main concern for using it as primary storage for a workplace virtualization installation. What complicates it further is that the single power supply is not removable or a standard part.

Increased Performance

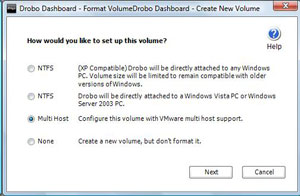

Simply speaking in terms of user experience, the DroboElite is faster than the DroboPro. I asked Data Robotics for specific information on the controller, but they had none, than the DroboElite has 50 percent more memory and a 50 percent faster processor. Beyond simply having more resources on the controller, there are also some code-level changes with the DroboElite. This is most visibly seen in the volume creation task, where a volume is determined to be a VMware multi-host volume (see Fig. 3).

|

| Figure 3. A volume is created with the specific option of being a VMware datastore. (Click image to view larger version.) |

Like any storage system, if it gets busy, you feel it. The DroboElite is no exception. At one point, I installed two operating systems concurrently and the other virtual machines on the same datastore were affected. The DroboPro has produced this behavior as well, but it wasn't as pronounced on the DroboElite.

First Impression

Configuring the DroboElite is a very simple task. I think I had it up and running within 20 minutes, which included the time to remove the controller and five drives from packaging. For certain small environments, the DroboElite will be a great fit at the right price. List prices at the DroboStore have a DroboElite unit with a total of 8 drives at 2 TB each, making 12.4 TB of storage at $5,899USD. Comment here.

Posted by Rick Vanover on 03/09/2010 at 12:47 PM1 comments

There was quite a buzz in the blogosphere about what people do for their virtualization test environments. The theme of the VMware Communities Podcast #79, white boxes and home labs, spurred quite a discussion around the blogosphere, as many bloggers and people learning about virtualization go about this differently. Surprisingly, many utilize of physical systems to test and learn about virtualization.

Many of the bloggers took time to outline the steps they take and detail their private labs. I wrote about my personal virtualization lab at my personal blog site, outlining the layout of where I do most of my personal development work.

I make it a priority to separate my professional virtualization practice from my blogging activities. In my case, the lab is basic in that the host server, a ProLiant ML 350, is capable of running all of the major hypervisors. Further, I make extended use of the nested ESX (or vESX) functionality.

For a lab to be successful as a learning vehicle, some form of shared storage should be used to deliver the best configuration for advanced features. In my lab, I use a DroboPro as an iSCSI SAN for my ESXi server and all subsequent vESX servers. I can also use the iSCSI functionality to connect Windows servers to the DroboPro for storage using the built-in iSCSI Initiator. I purchased the DroboPro unit at a crossroads of product lifecycles, but the DroboElite was released in late 2009 and offers increased performance as well as dual Ethernet interfaces for iSCSI connectivity.

While my home lab is sufficient to test effectively all elements of VMware virtualization, there are some really over the top home labs out there. Jason Boche's home lab takes the cake as far as I can tell. Check out this post outlining the unboxing of all the gear as it arrived and his end-state configuration of some truly superior equipment.

How do you go about the home lab for virtualization testing? Do you use it as a training tool? Share your comments here.

Posted by Rick Vanover on 03/04/2010 at 12:47 PM6 comments

I am not sure when the typical server consolidation with virtualization officially became known as an on-premise private cloud, but it is. We will see more products start to blur the lines between on-premise and start to use the major cloud providers such as Amazon Web Services, Microsoft Azure and others.

One category that many internal infrastructure teams may want to consider sending to the cloud is the lab management segment. Lab management is a difficult beast. For organizations with a large amount of developers, the request for test systems can be immense. Putting development (lab-class) virtual servers, even if temporarily, in the primary server infrastructure may not be the best use of resources.

That is the approach that VMLogix has with the Lab Manager Cloud Edition product. Simply speaking, the lab management technology that VMLogix has become very popular in the virtualization landscape is extended to the cloud. A lot of history with VMLogix technology is manifested in current provisioning offerings with Citrix Xen technologies as well as a broad set of offerings for VMware environments. The Lab Manager Cloud Edition allows administrators to create a robust portal in the Amazon EC2 and S3 clouds to allow internal administrators to give developers server resources on demand without allocating the expense of internal storage and server resources.

The primary issue when moving anything to the cloud is security. For a lab management function, I believe security can be addressed with a few practice points. The first is to use sample data in the cloud if a database is involved. You could make a case for intellectual property with the developer's code and configuration that may be used and their transfers between the cloud and your private network. This can be somewhat mitigated by the network traffic rules that can be set for an Amazon Machine Image (AMI).

VMLogix is working on integrating with the upcoming Amazon Virtual Private Cloud for Lab Management to bridge a big gap. In most private address spaces, this will allow your IP address space to be extended to the cloud over a site-to-site VPN to the cloud. This can also include Windows domain membership which would allow centralized management of encryption, firewall policies and other security configuration.

Just like transitioning from development to production in on-premise clouds, application teams need to be good at transporting code and configuration between the two environments. If this is in the datacenter or in the cloud, this requirement doesn't change.

The VMLogix approach is to build on Amazon's clearly defined allocation and cost model for resources to allow organizations to provision their lab management functions in the cloud. This covers everything from who can make a virtual machine to how long the AMI will be valid. Lab Manager Cloud Edition also allows AMIs to be provisioned with a series of installable packages; such as an Apache web engine, the .NET Framework, and other almost anything you can support an automated installation or configuration. Fig. 1 is a snapshot of the Lab Manager Cloud Edition portal:

|

| Figure 1. The Lab Manager Cloud Edition allows administrators to deploy virtual servers in the Amazon cloud with a robust provisioning scheme. (Click image to view larger version.) |

Does cloud-based lab management seem appropriate at this time? Do we need something like Amazon VPC in place first? Share your comments here and let me know if you want to see this area explored more.

Posted by Rick Vanover on 03/01/2010 at 12:47 PM6 comments

Intel, pushing 10 Gigabit Ethernet (10 Gig-E) for a number of reasons, just published a

recent report outlining over one million I/O operations per second (IOPS). One of the comments from the report's associated Webcast mentions the practice of assigning the iSCSI Initiator in the guest virtual machine. This is a fundamental, reverse perspective for all virtualization platforms that should be really considered.

Before I go into what I think of this material, consider the fact that achieving 1 million IOPS is, for all intents and purposes, applicable to no one. Besides, the disk used was RAM and I don't have a RAM SAN for the virtualization circles I cross. But it would be darn cool.

Now, back to the takeaway of the Webcast related to Hyper-V and the iSCSI Initiator for Windows Server 2008 R2 and Hyper-V. The material is out to prove that any workload can be done on the software initiator using the Intel 82559 controller.

One comment on the material is that administrators may choose to use the virtual machine's initiator. This would have the guest virtual machine communicate to the iSCSI storage directly. This would not apply to the Windows boot volume with the software initiator, but this would be a nice roadmap item. Putting one and two together, you can see that this can put a relief on the shared storage requirements of the guest virtual machine.

In VMware circles, the hands-down recommendation would be to use the VMFS file system; I've never heard of a recommendation to use in-guest software initiators. Even so, there are use cases for a raw device mapping (RDM). For a Hyper-V installation, this may be an architecture that can work well into tiered storage to put the operating system drives on a designated clustered shared volume, and the guest storage would go directly to the iSCSI storage resource.

While 10 Gig-E is not necessarily available everywhere yet, future designs may benefit from a potentially simpler host configuration and lessened reliance on the clustered shared volumes storage resources for potentially large virtual machines.

What do you think of in-guest iSCSI initiators from a design perspective? Comment below.

Posted by Rick Vanover on 02/25/2010 at 12:47 PM3 comments

The more you look into server virtualization, the more you find yourself looking back at the storage for arrangement, optimization, troubleshooting and expansion capabilities.

In my current virtualization practice, I'm preparing for a big jump upward in regards to smart storage. To help me do this, I'm reading the NetApp and VMware vSphere Storage Best Practices book. This book is written by five top-notch people, including Vaughn Stewart from NetApp.

While this book is a quick read at only 124 pages, it is right to the point in so many areas. Unfortunately with virtualization, so many things revolve around the storage product in use. This is complicated by the fact that if you change products, there is a learning curve associated with getting the new product in line with your virtualization requirements.

I'm not quite done with the book, but find myself going back and forth on configuration, terms, and a new inventory of best practices.

During my prior position working with virtualization, I was a storage customer rather than the storage provider. There were many conversations on which type of storage to use, mainly SAS or SATA disk. Yet, I wasn't involved with the storage system presentation and administration of the aggregated storage, much less a fundamentally different product series to work with.

By bringing storage and virtualization together, a lot of benefits can be realized. Storage vendors that have vCenter plug-in and pluggable storage architecture (PSA) support will allow virtualization administrators to deliver the best virtualized storage without wasting backend resources. My earlier post on thin provisioning is just the start. Once features like snapshots, deduplication, volume cloning, RAID selection, class of disk and other critical decisions roll into the mix, you'll quickly see that storage is everything.

Anyone want to disagree with me that storage is more important than RAM or CPU? I think that it is.

Posted by Rick Vanover on 02/23/2010 at 12:47 PM2 comments

Last week,

I told you five things to know about VMware certification. Now, I want to flip that around and offer five things you need to know about putting virtualization as one of your core competencies:

1. So you know virtualization, but what about storage and networking? I mentioned how these are important before, but I can't stress this enough. When it comes to architecting a robust virtualized architecture, you'll have to be a passable network and storage engineer.

2. You will have to be a licensing expert. Licensing historically has not been one of my strong points. But, virtualization has changed how I go about general infrastructure practices, from per-processor licenses with SQL server, Windows Datacenter's unlimited virtualization rights, and even USB license dongles. You'll have to deal with it, and make it work.

3. You will have to be a data protection expert. Architecting backups, providing off-site disaster recover, replication, mirroring, RPO/RTO and other core competencies will have to be part of the virtualization professional's repertoire.

4. You will have to be a database expert. I pick on databases in particular because so many topics come into play with database servers. The good news is that now there are plenty of resources that the virtualization administrator has to prepare a strong case to support databases in this environment. Here are the best resources for supporting databases in virtualization:

5. You will have to evangelize. Unfortunately, people generally don't want to change. In order to realize new IT architectures with superior benefits, mindsets have to shift to a new way of thinking. This takes patience to explain the technology to people who may have a vague understanding of a non-virtualized environment. But should you be successful at this mode and deliver a superior virtualized infrastructure, you will build political capital in your organization.

Posted by Rick Vanover on 02/18/2010 at 12:47 PM3 comments

A new vSphere feature is the VMXNET3 network interface that is available to assign to a guest VM. This is one of four options available to virtual machines at version 7 (the other three being E1000, flexible and VMXNET2 enhanced).

There are a couple of key notes to using the VMXNET3 driver. The most obvious is that the guest will appear to be using a 10 Gb/s interface. While the underlying media may not actually be 10 Gb/s, the operating system will perceive it to be. This can assist in VM-to-VM traffic on the same host and port group, and this uses CPU cycles for the local traffic in lieu of the physical Ethernet media in most situations (as does VMXNET2). What it doesn't mean is that it is 10x faster than a 1 Gb/s connection. The VMware VMXNET3 whitepaper shows the gain in performance for a test situation, available in this PDF from VMware.

It's unfortunate that this driver is not the default one for a new virtual machine. There are a few reasons for this, primarily the fault tolerant (FT) virtual machine is not supported with the VMXNET3 driver, nor is it able to be used with paravirtualized SCSI drivers (PVSCSI). Check Scott Lowe's information on this topic.

Windows Server 2008 and Linux virtual machines will benefit most from using VMXNET3 due to added support of key features such as receive-side scaling (RSS). There new features with IPv6 offloading, should you be using it. There are larger transmit and receive buffer sizes with VMXNET3, which can accommodate burst-frequent and high-throughput guest VMs.

I'm going to configure my VMs for the VMXNET3 virtual network driver. I don't have any FT-required systems, nor do I use the PVSCSI drivers.

Do you see yourself using VMXNET3? Share your comments here.

Posted by Rick Vanover on 02/16/2010 at 12:47 PM8 comments

One of the great things about the blogging and other social networking tools such as Twitter is the ability to get something quickly. Last week, I was tweeting with fellow virtualization blogger Jon Owings of 2VCPs and a Truck. It was quite simple, John just said that he didn't know what to blog about. I replied with a topic idea and Jon immediately took it. The result is this post where Jon's views of the VMware certification are spelled out.

Now that you've read Jon's list, here's my list of five things you should know about VMware certification:

1. Certification is not a substitute for experience. While this seems to be somewhat profound, the real-world experience cannot be simulated. The VMware certification does have emphasis on troubleshooting, but unfortunately this can't be used as a broad-reaching gauge of experience. In my personal certification path, I ensured I had over two years of VI3 experience before seeking the VMware Certified Professional. It follows in the spirit of my other certification efforts as well. I didn't achieve the Windows Server 2008 MCITP credential until mid-year 2009. It is important to note that as Jon is a solution provider -- I'm not. Therefore, I don't have keep up with the cutting edge of certifications.

2. Achieving a VMware certification is the single most effective rounding off of one's external presentation. I've always believed that one's marketability is a sum of one's technical experience from current or previous jobs, formal education and certification inventory. Each one is pivotal in the well-rounded IT professional. For virtualization professionals, the VMware certification creates a nice cornerstone to that model. I've even said before that virtualization has changed my life, and the VCP certification in particular has done that for me.

3. The VCP is becoming diluted. There are quite a few VCPs out there now. Between 2005 and 2007, if you had the experience plus the certification you could be very selective on opportunities. In 2010, the novelty has worn off for sure. This is even including the fact that VMware requires that a course from a VMware Education Services (or partner) be taken by the prospective VCP.

4. Hypervisors are not enough. To round out the virtualization professional (and this includes non-VMware virtualization), other core technologies such as storage and networking are critical. Traditional IT professionals could get by with having core competencies such as Windows server technologies or Linux server skills. With virtualization, you can not put enough emphasis on storage. The more you can be a storage professional with virtualization, the more you can set yourself apart from the rest of the VCPs in the field. Networking can hold the same torch, though not as much as storage.

5. Don't be intimidated by the VCP test. If you are considering the VCP credential, by all means go for it. Virtualization is one of the hottest places to be, and I'll always feel we can have one more in this party. Besides, there are also plenty of new markets for virtualization. I think there is a market for the very small business, especially as blended cloud/virtualization solutions become more popular.

Are you considering the VCP credential? Share your comments here.

Posted by Rick Vanover on 02/11/2010 at 12:47 PM6 comments

When it comes to administering and designing a virtualized infrastructure, storage is one of the cornerstones of a successful design. Provisioning storage is also a mixed bag of what your best practice is and it can vary greatly by the storage in use.

My private lab has a storage system with a number of iSCSI LUNs available to a number of ESXi hosts. Some of the ESXi hosts are virtual machines themselves utilizing the host as a guest backdoor. I have one LUN for my ESXi host installed on my primary server, and a number of LUNs that are allocated for test purposes. Fig. 1 shows an ESXi host (that is a guest VM) connected to the iSCSI network that shares all of these LUNs.

|

| Figure 1. The LUNs visible to this host are shown in the storage configuration panel of a host. (Click image to view larger version.) |

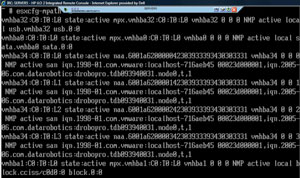

The question is, how do you know which LUN is which? One way is to expand the name column, as I've done in Fig. 1. This shows the name of the LUN as it is presented from the storage system. If you jump to the ESXi command line, you can enter this command to see this across all storage adapters:

esxcfg-mpath -L

This will show additional information, but is a little more cryptic to decipher the entire multipathing configuration. Fig. 2 shows this command run on the ESXi console.

|

| Figure 2. The listing of paths has all of the information about each target. (Click image to view larger version.) |

That's all fine and good, but how do we know which LUN is which? Well, that too depends on the storage system. You can easily tell them apart by size, that is if you make them different size. I'd prefer to make everything a uniform size myself. So, then how do you tell? Well in the examples below the LUN identifier number, or serial number is revealed in each entry.:

naa.6001a620000142303933393430303331

In the string above the bolded “1” indicates it is LUN 1. There is a LUN 0 (the 2 TB LUN) and two other 1024 GB LUNs, identified as LUN 2 and 3 with the same LUN identifier strings:

naa.6001a620000242303933393430303331

naa.6001a620000342303933393430303331

This can be a good spring board to roll the LUN identifier into the VMFS datastore name to avoid confusion down the road.

How do you distinguish LUNs from each other when it comes to naming the datastores? Share your comments below.

Posted by Rick Vanover on 02/09/2010 at 12:47 PM4 comments

I've come to really like the distributed virtual switch feature of VMware's vSphere 4. The vNetwork Distributed Switch, as it is officially called, is a great way to standardize how hosts are provisioned for network configuration as well as ensure consistent monitoring of guest VMs in a distributed network.

In a previous post, I showed how you can reset a host if the distributed virtual switch configuration orphans it. Hosts can be orphaned through an incorrect VLAN assignment or the wrong network interface designation for the distributed virtual switch.

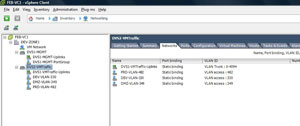

What I've come to feel the best way to provision the safest environment is to configure two or more distributed virtual switches. This creates a natural security zone separation between management and guest virtual machine networking. This separation at the distributed virtual switch level would also be a full separation by cables (as well as VLANs). The guest networking port groups would be a separate distributed virtual switch and use separate physical interfaces (see Fig. 1 for an example).

|

| Figure 1. Two distributed virtual switches give a natural separation to follow physical media separation as well as protection against reconfiguration issues. (Click image to view larger version.) |

There are quite a few questions floating around on whether the distributed virtual switch is ready for production, Virtualization expert Mike Laverick outlines a number of them in this post. My practice going forward will be use them for all roles, including service console or ESXi's vmkernel management. The natural separation of management (including vMotion) and guest networking will make at least two distributed virtual switches in most situations. In the case of a distributed virtual switch becoming misconfigured and orphaning a host, it can be reconfigured on the fly without affecting the guest networking in the event that they are all stacked on the same virtual switch.

This really ends up matching the practice done in the standard virtual switch and port group world, making troubleshooting and logical separation intuitive.

Where are you on the distributed virtual switch? How have you separated or organized them with all of the vSphere roles? Share your comments here.

Posted by Rick Vanover on 02/04/2010 at 12:47 PM2 comments

I've come across some systems that for one reason or another can't run VMware Tools. This can be due to support reasons, an operating system that doesn't officially have VMware support from the version of the hypervisor or family of operating systems, an appliance that is designed to run on a physical system but doesn't offer virtualization support, or it simply won't install on that system without something else going awry. (While this does not happen frequently, usually the vendor or application owner's response is "The application isn't supported yet with virtualization" or worse, I have to explain what virtualization is.)

As an administrator, you'll know if something will or won't work as a virtual machine. Virtual machines are a wonderful way to tuck away previous systems to use as an archive or inquiry-only role. In these situations, VMware Tools is not there to provide the necessary interaction between the guest operating system and the hypervisor.

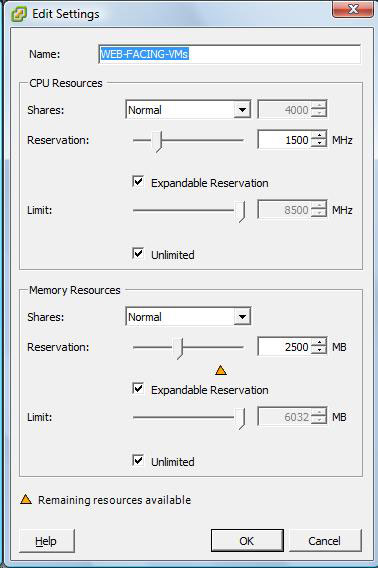

I've also had some unexplained behavior if resources are moved around on the the host due to a busy compute and memory environment. The workaround in this situation is to assign a reservation. I usually don't use reservations, as they are typically wasted compute and memory resources. But if you must, you can do it both with licensed vSphere and VI3 installations, as well as the free ESXi hypervisor (see Fig. 1).

|

| Figure 1. The resource reservations are shown for both memory and processor categories. (Click image to view larger version.) |

If you see the reservation increase (enabled by the expandable option), you know that you have allocated too little and the resource scheduler may increase what is assigned to this category. This can be done for a resource pool, an individual VM or a vApp in vSphere. Again, I only assign reservations when a situation requires it -- lack of VMware Tools is one case where it makes sense.

Do you have systems without VMware Tools? How do you plan on resource allocation beyond the base virtual machine provisioning process? Send me e-mail (preferred) or comment here.

Posted by Rick Vanover on 02/02/2010 at 12:47 PM4 comments

Thin-provisioning, a great feature for virtual machines, uses the disk space required instead of what is allocated. I frequently refer to the Windows pie graph that displays used and free space as a good way to explain what thin-provisioned virtual disk files “cost” on disk. All major hypervisors support it, and there is somewhat of a debate as to whether or not the storage system or virtualization engine should manage thin-provisioning.

On the other hand, many storage systems in use for virtualization environment support a thin-provisioned volume. This is effectively aggregating the same benefit across many virtual machines. In the case of a volume that is presented to a physical server directly, thin-provisioned disks can be used in that way as well. Most mainstream storage products with decent management now support thin-provisioning of virtual machine storage types, primarily VMware's vStorage VMFS and Windows NTFS file systems. NTFS is probably marginally more supported simply due to Windows having broader support. A thin-provisioned volume on the storage system effectively is VMFS- or NTFS-aware for what is going on inside. This means that it knows if a .VMDK file is using its full allocation.

The prevailing thought is to have the storage system perform thin-provisioning for virtualization volumes. There is an non-quantified amount of overhead that may be associated with a write operation that would extend the file. You can hedge this off by using the 8 MB block size on your VMFS volumes, due to its built-in efficiencies or allocating a higher performance tier of disk. For the storage system to manage thin-provisioning, you're using on the disk controllers to manage that dynamic growth with direct access to write cache. What probably makes the least sense is to thin-provision on both environments. The disk savings would be minimal, yet overhead would be increased.

How do you approach thin-provisioning? Share your comments below.

Posted by Rick Vanover on 01/27/2010 at 12:47 PM7 comments