News

Machine Learning Security Paper Unveils 'Uncomfortable Truths'

With all the talk about ransomware and malware and so on, one area of cybersecurity you don't hear as much about is machine learning, a situation addressed by a new research white paper titled "Practical Attacks on Machine Learning Systems."

Authored by Chris Anley, chief scientist at NCC Group, the research ultimately seeks in part to help enable more effective security auditing and security-focused code reviews of ML systems.

Specifically, it distils some of the most common security issues encountered in ML systems from a traditional persepective along with a more contemporary cloud, container and app perspective. The paper also can help security practitioners by explaining several attack categories specific to ML systems, from the black-box perspective typical of security assessments.

"Since approximately 2015 we have seen a sharp upturn in the number of organizations creating and deploying Machine Learning (ML) systems," the paper said. "This has coincided with an equally sharp increase in the number of organizations moving critical systems into cloud platforms, the rise of mobile applications and containerized architectures, all of which significantly change the security properties of the applications we trust every day."

The 48-page paper, as is typical with scientific research, contains many highly detailed facts, figures, code samples and so on, but also listed several "uncomfortable truths" gleaned from reference resources:

- Training an ML system using sensitive data appears to be fundamentally unsafe, in the sense that the data used to train a system can often be recovered by attackers in a variety of ways (Membership Inference, Model Inversion, Model Theft, Training Data Extraction)

- Neural Network classifiers appear to be "brittle," in the sense that they can be easily forced into misclassifications by adversarial perturbation attacks. While countermeasures exist, these countermeasures are reported to reduce accuracy and may render the system more vulnerable to other forms of attack

- High-fidelity copies of trained models can be extracted by remote attackers by exercising the model and observing the results

"While exploiting these issues is not always possible due to various mitigations that may be in place, these new forms of attack have been demonstrated and are certainly viable in practical scenarios" the paper said.

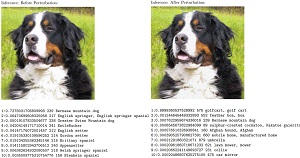

The second item in the above bullet list, adversarial perturbation/misclassification, affects ML classifiers used in scenarios like identifying the subject of a photo. Using this method, actors can craft an input (by introducing noise to an image, for example) to make an ML system return whatever decision they want. The paper includes an example where a dog can be misclassified as a golf cart, and other incorrect weighted inferences.

[Click on image for larger view.] Turning a Dog into a Golf Cart (source: NCC Group).

[Click on image for larger view.] Turning a Dog into a Golf Cart (source: NCC Group).

While that may seem a trivial example, the technique has real-world implications such as meeting some confidence criteria in a class in a system designed to achieve a security result, with these examples provided:

- Authentication systems (we maximize confidence in some class, causing us to "pass" an auth check)

- Malware filters (we reduce confidence by mutating malware to bypass detection, or deliberately provoke a false-positive)

- Obscenity filters. We might be testing these, and wish to either deliberately trigger or bypass them

- Physical domain attacks, e.g. examples in the literature include causing a stop sign to be recognized as a 45 mph speed limit sign, a 3d printed turtle as a gun, or disguising individuals or groups from facial recognition systems

A model inversion attack, meanwhile, mentioned above in the context of training with sensitive data, can allow such data in the training set to be obtained by an attacker, who could supply inputs and note the corresponding output.

The paper described model inversion as: "A form of attack whereby sensitive data is extracted from a trained model, by repeatedly submitting input data, observing the confidence level of the response, and repeating with slightly modified input data ("Hill Climbing"). The extracted data is typically the "essence" of some class, i.e. a combination of features and training inputs, which may not constitute an easily usable "data leak", although in some cases (e.g. fingerprints, signatures, facial recognition) the number of input training cases may be small enough for the extracted data to be usable. The extracted data is certainly useful in terms of representing an "ideal" (high confidence) instance of the class, and so may be used in the generation of adversarial inputs."

Untrusted models that contain malicious, executable code could also leak sensitive input/output to a third party. Models containing executable code can arise for several reasons:

- The methods used to save and load objects explicitly allow the persistence of executable code

- The algorithms themselves explicitly allow customization with arbitrary code, for example user-specified functions or function calls, forming layers in a neural network

- The persistence libraries or surrounding framework may accidentally expose methods allowing arbitrary code to be present in models

- It is convenient to be able to save the state of a training run in progress and resume it at some later time; in several cases, this mechanism allows executable code to be persisted

"In this attack, the system either allows the user to select a model to be loaded in the context of inference infrastructure, or has some flaw allowing arbitrary models to be loaded," the paper said. "This results in arbitrary code execution within the context of the targeted system. The malicious code can perform any action the attacker wishes within the context of the inference system, for example accessing other resources, such as training data or sensitive files, changing the behavior of the system."

The third "uncomfortable truth," concerning high-fidelity copies of trained models being extracted by remote attackers who exercise the model to observe the results, is called "high fidelity copy by inference" in the paper.

"In some cases, the attacker can obtain a very high-fidelity copy of the target model, for example in regression, decision tree or random forest algorithms, the underlying structure of the system allows a perfect or near-perfect copy to be created," the paper said "Even in neural network systems, a near-perfect copy is sometimes possible, although there are considerable constraints in the neural network case; parameters are typically initialized to random values, and nondeterminism is present in various parts of the training process, for example in the behavior of GPUs."

While much of the above discusses new forms of attacks specific to ML systems, those same systems can remain vulnerable to tried-and-true existing attacks found in all other areas of IT. "These alarming new forms of attack supplement the 'traditional 'forms of attack that still apply to all ML systems; they are still subject to infrastructure issues, web application bugs and the wide variety of other problems, both old and new, that modern cloud-based systems often suffer," the paper said.

About the Author

David Ramel is an editor and writer for Converge360.