In-Depth

Microsoft 365 Copilot Data Governance

The hype around generative AI and large language models (LLMs) seems to be settling down slightly, as we all collectively learn how to actually incorporate them in business processes and find out where they're useful (and not).

Microsoft, having released M365 Copilot at last year's Ignite conference, has been hard at work over the last year adding even more governance and data security features. As a longtime Microsoft technology watcher, it's been interesting to see how seriously they've taken the governance and control features -- they were there on day one and have grown considerably in scope and power since. For many other new options and features in Azure and Microsoft 365, security and administrative controls are often released after the feature is out, sometimes a long time later. This makes sense though, because for Microsoft to sell every business on the power of M365 Copilot and drive adoption, data oversharing and governance concerns must be carefully managed.

In this article I'll look at Microsoft Purview, what it is, how its evolved over the last couple of years and how it helps with governing data in general, and generative AI specifically. This includes announcements from Ignite 2024. I did write about data governance for Copilot almost exactly a year ago, covering some of the basics that I won't go into here.

What is Purview?

There are three members in the Microsoft governance "family": Entra ID for identity, Priva for privacy and Purview for data governance. Like so many other cloud services, if you looked at these a year or two ago, you might have the impression that there's a lot missing, but given the frantic adding of new features, nothing could be further from the truth.

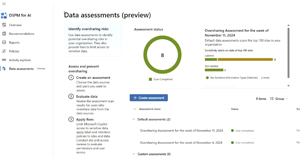

[Click on image for larger view.] DSPM for AI in the Purview Portal

[Click on image for larger view.] DSPM for AI in the Purview Portal

Purview is receiving a lot of attention from Microsoft, for good reason. Data security and governance are increasingly important areas for businesses of all sizes, with or without Copilot in the picture.

The solutions available in Purview are:

- Audit

- Communication Compliance

- Compliance Manager

- Data Lifecycle Management (DLM)

- Data Loss Prevention (DLP)

- Data Security Posture Management (DSPM) for AI

- eDiscovery

- Information Barriers

- Information Protection

- Insider Risk Management (IRM)

- Records Management

Most of these require M365 E5 / E5 Compliance licensing to take full advantage of. The legacy portal at https://compliance.microsoft.com has just been retired in favour of the new portal. I like the new portal as you only add the solutions that you use, out of a long list thus keeping the UI less cluttered.

Briefly, Audit is the traditional unified audit plane in M365, tracking actions in all Office applications, the M365 Graph API plus much more, and here's where you can search for specific events. Communication Compliance lets you set up policies for email and Teams communications (plus third-party platforms) and flag or block inappropriate or sensitive messages. Compliance Manager is a comprehensive platform to manage regulatory compliance across nearly 400 different regulations (from all over the world), with tracking of actions Microsoft has taken on your behalf in M365, plus lists of tasks for you to complete before the auditors knock on the door. Data Lifecycle Management allows you to configure retention labels and policies for Exchange Online and SharePoint / OneDrive data to only keep what you need for business and regulatory reasons. Data Loss Prevention is there to block or warn users when they're sharing or handling sensitive data in a risky manner. eDiscovery does what it says on the tin and lets you search through all the data stored in M365, perhaps as response to a legal request from an external entity, or as part of a data breach investigation. Information Barriers is for very specific legal requirements in organizations where the trading team can't communicate with the investment team, for example. Information Protection manages scanning the content of documents, identifying sensitive data using various built-in or customized methods, labeling documents and applying policies to restrict permissions or encrypt the document for enhanced protection. Insider Risk Management (IRM) tracks (anonymously) user activity over time and correlates signals using machine learning to assign minor, moderate or elevated risk status using policies you define. IRM also lets you build cases for the legal department to take over when there are significant signals to indicate a malicious insider. The different IRM risk status levels can also be used for adaptive protection in DLP, for example where an elevated risky user can't copy sensitive documents to external USB storage, whereas a user with minor risk level can do so, thus not impeding productivity. Records Management is for documents such as signed legal contracts which need to be kept for a certain number of years, and not be altered during this period.

Data Security Posture Management (DSPM) for AI is the

new kid on the block, previously known as AI Hub, and is covered below.

Microsoft 365 Copilot -- the World's Greatest Data Bloodhound

The big challenge is that M365 Copilot has access to all the documents that the user has and can base its answers to prompts on all of that information. This means that in an organization with lax data governance -- where end users can create SharePoint sites at will -- no sensitive labels are applied (either automatically or manually), no retention is configured (so very old data can be used to answer prompts) and sharing of these sites is liberal, and so the possibility of inadvertent data sharing through Copilot is huge. This is called oversharing and is one of the main concerns of businesses deploying M365 Copilot.

There are five common causes of oversharing in SharePoint (which is also the underlying document storage for Teams and OneDrive for business):

- Site privacy set to public

- Default sharing option is everyone

- Broken permission inheritance

- Use of Everyone Except External Users (EEEU)

- Sites and files without sensitivity labels

When you create a SharePoint site and set it Public, by default anyone in the organization can access it, whereas a site set to Private requires you to be a member to access it. Sharing links (which is so convenient!) might be set to default "everyone," which again includes everyone in the company. It's also relatively easy for users (who have sufficient permissions) to alter the permissions on a file or document library and make them unique, thus breaking the inheritance of permissions from the site itself. This issue is compounded by the fact that this isn't obvious to site owners who have to actively investigate to see this. When you're sharing a site or a document, one option is "everyone except external users" (EEEU) which again means that every internal user will have access. And if you have no sensitivity labels or policies in place, it's harder for the Graph API and Copilot to do their work securely.

M365 Copilot hasn't created this problem, but years of lax data governance and overly broad permissions are being highlighted by this "data bloodhound," and security by obscurity is no longer an option.

An interesting session at Ignite was delivered by Al MacKinnon from CDW Direct, they've spoken to 10,000 organizations about M365 Copilot and deployed it for over 2,000. They surveyed these clients and 75% of them were concerned about their technical readiness (which included data governance), and 60% worried about user readiness.

Obviously, Microsoft has taken steps to manage these risks. Back in November 2023 the unified audit platform in M365 included Copilot activity, which could also be found through eDiscovery searches. You could define retention policies to keep Copilot prompts and output (initially with the same retention as for Teams chats, released at Ignite this year is the ability to have different retention for Teams and Copilot), and you could apply Communication Compliance policies to Copilot interactions. And if Copilot accessed Information Protection labeled documents (that the user has legitimate access to) as part of its answer to a prompt, the output was also labeled, with the most restrictive label of the source documents. So far, so good, but this doesn't really fix the problem of a poorly governed data estate being highlighted by a data hungry LLM looking to please you with its answers to your prompts.

In-between then and now, Microsoft released a very coarse control, the ability to exclude selected SharePoint sites from the semantic index altogether. The problem with this approach is that this also breaks search, hindering productivity for users that have legitimate access to those sites.

Also, in May 2024 we got the preview of AI hub (now DSPM for AI) that offered oversharing reports and showed sensitive data leakage to several different generative AI services. Just knowing what LLMs your users are using, called Shadow AI when it's not sanctioned by the business, is the first step.

New Services Announced at Ignite 2024

The biggest announcement is DLP support for M365 Copilot interactions. This means that you can define DLP policies (just like you can do today for SharePoint, OneDrive, third party SaaS apps, and Windows / MacOS endpoints) for Copilot prompts, and if referenced documents are labelled with a particular label, you can block Copilot from processing it.

In DSPM for AI there are also Data assessments, which not only evaluates oversharing and unlabelled documents with sensitive data in them (useful both pre- and post-Copilot deployment), but also lets you apply fixes.

[Click on image for larger view.] Data Assessment Reports (Courtesy of Microsoft)

[Click on image for larger view.] Data Assessment Reports (Courtesy of Microsoft)

You can restrict access using a label for that particular item or use Restricted Content Discoverability (RCD). This is the option to exclude a particular site from being surfaced by Search or Copilot, which makes sense in certain situations where a site contains highly sensitive data. Another option is Restricted Access Control (RAC), where you discover a site that has far too lax permissions and serious cleanup is required, apply a RAC policy, which restricts access to the site to a small group of users until the permissions can be right sized and normal access restored.

[Click on image for larger view.] Actions to Take from Data Assessment in DSPM for AI (Courtesy of Microsoft)

[Click on image for larger view.] Actions to Take from Data Assessment in DSPM for AI (Courtesy of Microsoft)

Obviously, businesses use LLMs other than M365 Copilot, and DSPM for AI now integrates with ChatGPT Enterprise, applying the same discovery and controls as for M365 Copilot, with support for other LLMs coming.

The tracking of risky AI usage (prompt injection or jailbreak attacks) is now surfaced in DSPM for AI. The risky AI usage indicators are tracked for M365 Copilot, Copilot studio (where you can create custom AI LLMs on top of Copilot) and ChatGPT Enterprise.

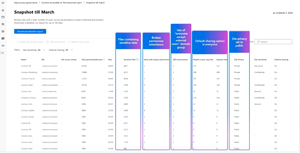

[Click on image for larger view.] Content Accessible to Permissioned Users Report (Courtesy of Microsoft)

[Click on image for larger view.] Content Accessible to Permissioned Users Report (Courtesy of Microsoft)

There are also two new risk detections in Communication Compliance, one for prompt injection attacks and one for protected materials.

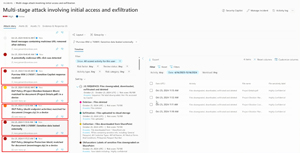

[Click on image for larger view.] DLP and IRM Alerts as Part of an Incident in M365 XDR (courtesy of Microsoft)

[Click on image for larger view.] DLP and IRM Alerts as Part of an Incident in M365 XDR (courtesy of Microsoft)

Insider Risk Management (IRM) user risk levels can be used in Conditional Access policies -- as an example a user with elevated risk (handed in resignation, performed several label downgrades and attempted exfiltration of data to consumer cloud storage) isn't allowed access to a particular application, whereas another user with minor risk can access it. IRM alerts are now also feeding into Defender XDR, creating a single pane of glass for all incidents, and is also available in two new tables (DataSecurityBehaviors, DataSecurityEvents) in Advanced Hunting for KQL jockeys.

[Click on image for larger view.] Advanced Hunting KQL Query Correlating Data in Defender XDR (courtesy of Microsoft)

[Click on image for larger view.] Advanced Hunting KQL Query Correlating Data in Defender XDR (courtesy of Microsoft)

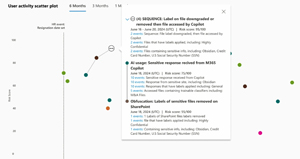

The risky AI usage signals mentioned above are now also surfaced in IRM and show up in the IRM graph.

[Click on image for larger view.] Purview Insider Risk Management AI Activity Details (courtesy of Microsoft)

[Click on image for larger view.] Purview Insider Risk Management AI Activity Details (courtesy of Microsoft)

Another interesting reveal was the fact that SharePoint Advanced Management (SAM), part of SharePoint Premium, is now included in Microsoft 365 Copilot licensing, rolling out in January 2025. This set of tools also gives you a dashboard in SharePoint identifying "problem" site/folders/files from a sensitivity or sharing perspective and allowing you to manage access to them.

[Click on image for larger view.] Permission Tracking in SharePoint Advanced Management (courtesy of Microsoft)

[Click on image for larger view.] Permission Tracking in SharePoint Advanced Management (courtesy of Microsoft)

Reporting has also been improved, and coming in Public Preview in December 2024 is the Copilot Activity API, allowing organizations to include M365 Copilot activities by their users in custom reports and dashboards.

As you can see, there are many new features and it can be hard to know where to start, particularly if you're a business where oversharing has been the name of the game for many years, so Microsoft has put together a concise Blueprint, available in both PDF and PPNT formats.

Multicloud and Custom AI Solutions

When it comes to in-house generative AI solutions, your business might be creating in Azure AI Foundry, Azure Open AI, Azure Machine Learning or AWS Bedrock, Defender for Cloud has AI Security Posture Management (generally available) to spot risky usage.

[Click on image for larger view.] Cloud Security Explorer in Defender for Cloud for AI & ML Deployments (courtesy of Microsoft)

[Click on image for larger view.] Cloud Security Explorer in Defender for Cloud for AI & ML Deployments (courtesy of Microsoft)

For your own AI services there are regulatory compliance assessments for the EU AI Act, NIST AI RMF, ISO 42001, and ISO 23894, in Purview Compliance Manager.

Furthermore, Purview Unified Catalog, which is the new name for what was known as Purview Data Governance solution, is also expanding its scope. This is a solution that uses the same Information Protection labels, but instead of applying them to M365 documents, applies labels to structured data in databases and data lakes such as Databricks Unity Catalog, Snowflake, Google Big Query and Amazon S3, as well as Microsoft Fabric.

Here are some breakout recordings from Ignite that dive deeper into everything related to Copilot data governance: BRK272, BRK273, BRK315, BRK318, BRK321, BRK322 and BRK327.

Conclusion

With comprehensive user training and data governance in place, Microsoft 365 Copilot does have the promise to improve efficiency considerably for many business operations, but this does require planning and reining in of oversharing. Microsoft have shown that they are serious about providing the tools to do so, the only question is if businesses leaders will provide the required resources to their IT teams.

About the Author

Paul Schnackenburg has been working in IT for nearly 30 years and has been teaching for over 20 years. He runs Expert IT Solutions, an IT consultancy in Australia. Paul focuses on cloud technologies such as Azure and Microsoft 365 and how to secure IT, whether in the cloud or on-premises. He's a frequent speaker at conferences and writes for several sites, including virtualizationreview.com. Find him at @paulschnack on Twitter or on his blog at TellITasITis.com.au.