When applications are aware of like-configured systems providing the same services, it is a beautiful thing. The most frequent example is a Web service that has a number of VMs providing the same Web content and load balanced in some fashion. These Web server VMs each process browser traffic and provide back the same content, including any database or Web applications that need to be done to deliver back to the clients.

The issue is traffic distribution. Web server engines (such as IIS or Apache) in this example cannot maintain logic to distribute traffic from one server to another. Instead, they generally rely on one of two main technologies to manage session flow. One option is to use Network Load Balancing (NLB) on Windows systems to distribute traffic between hosts for Web traffic. The other option is to use a network-based solution, such as an F5 device or a Cisco product for traffic management. I have gone each of these ways at some point in my experience, and I can say that the network solution is the way to go.

The discussion came up recently on a Twitter post that made me revisit this topic in regards to having a traffic management solution for virtual environments. For VMware installations, you can use NLB for guest VMs but only in multicast configurations to avoid port saturation as explained in this technote.

For the sake of being "100-percent virtual" or some other threshold that may not really be that important, having traffic management done within a NLB cluster in the virtual environment is not as robust as the alternative. The network devices will implement a virtual IP address. This tier of virtualization is delivered well through network devices and, when coupled to a DNS CNAME, makes management a snap. Sure, the costs are higher this way, but functionality is also better.

Have a thought? Drop a note below or e-mail me.

Posted by Rick Vanover on 09/24/2009 at 12:47 PM7 comments

There is an interesting discussion developing in the blogosphere regarding storage technologies for virtual environments. I've mentioned VMware employee Eric Gray's personal blog

VCritical as a good virtualization resource. Somewhat harsh, but Eric has been known to counter with the rare VCompliment from time to time. One of Eric's recent posts, "

Hands off that CSV!," is about some of the intricacies of working with the Hyper-V R2 solution to shared storage.

I have long thought that vStorage VMFS is the most underrated technology VMware has ever produced. The post by Eric Gray goes at the Microsoft solution, Clustered Shared Volumes (CSV), which is a customized implementation of the Microsoft Clustering Services (MSCS). MSCS is a veteran in the IT landscape, and is good at what it does. CSV simply shares the .VHD files as clustered resources across multiple systems, rather than the entire disk.

Eric's critiques of the CSV implementation on Hyper-V are centered on its changes to accessing the newly created volume. This includes direct access through familiar interfaces such as Windows backup or otherwise accessing the CSV data. I won't hide that I am probably the biggest fan of VMFS. So do I have to really pick a side?

I'll just leave you with this: VMFS is a highly-engineered storage system built for the purpose of providing a clustered file system for virtual machines for enterprise-class installations, and is available with small installations as well with ESXi free. MSCS introduces CSV into existing functionality to provide what Microsoft touts as an equivalent offering.

Have a thought? Drop a note below or e-mail me.

Posted by Rick Vanover on 09/22/2009 at 12:47 PM9 comments

In the competitive spirit, a

very long post comparing costs for Hyper-V R2 and VMware vSphere was recently posted by Microsoft TechNet blogger

Matt McSpirit. I think the post is great. As a blogger, I was first of all quite impressed by the 5,000+ word count. That doesn't quite feel like a normal blog as it is quite a bit of reading, but it does allow a thorough explanation of the topic. Blogging can become a narrow field of vision at times, so I appreciate the time allocated to make the post and thoughts clear.

Back to the virtualization stuff. If you haven't read the post, take the time to do so. You may want to put your vacation requests in now to do it. To set the tone, know that I am a VMware guy. In most situations, VMware technologies are my virtualization platform of choice. This does not come without the occasional criticism, but for most server virtualization scenarios I find VMware virtualization the way to go. Regardless of who makes it, I never like cost model or ROI tools provided from a vendor. Every time I talk to ISV and other vendors about how they make these tools, it is pretty clear they are made to win. So, always take their results with a grain of salt.

A recurring theme in the cost-per-application post is Hyper-V R2 being compared to this or that piece of VMware functionality. Now that vSphere has been generally available for a few months, once Windows Server 2008 R2 becomes generally available (scheduled for October) we can take a closer look at the things that are not listed in the cost modeling.

For VMware technologies, this includes the vNetwork Distributed Switch, host profiles, live storage migration, fault-tolerant VMs and no true clustered file system, to name just a few. The shortcomings are mentioned at the end of the post, and honest admissions of strengths to the other platform are acknowledged. In factoring what you get for the money I think these should be represented.

Another topic in the post that may be misinterpreted is the use of the Windows Datacenter license, which

I blogged about earlier, for use in virtual environments. Many organizations may be stuck with licensing investments that they cannot just throw away to go to datacenter licensing, so take that into consideration. If that licensing is removed from the cost comparison, Hyper-V actually has a lesser cost.

Above all else, virtualization platform selection comes down to personal preference. You can go the http://virtualizationreview.com/articles/2009/09/01/the-great-car-debate.aspx car in your datacenter route, play the dollars and cents numbers game or take a stand on platforms by being an "all Microsoft" house. While the crosstalk is good and keeps everyone on their toes, I've yet to know anyone to change virtualization platforms either way.

Have a thought? Send me an

e-mail.

Posted by Rick Vanover on 09/16/2009 at 12:47 PM2 comments

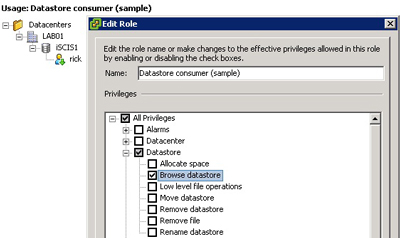

One of the new features available for vSphere is the ability to assign role-based permissions to datastores, including VMFS volumes. It is important to note that these permissions are managed exclusively by vCenter and not the filesystem. What this means is that if you access the NFS or VMFS volume outside of vCenter's access authority, these roles do not apply.

Access to VMFS volumes is managed on the volume directly, in lieu of a coordinator. In an earlier post, I outlined how VMFS volumes can easily cross management zones as part of their design. Further, VMFS-3 volumes are forward and backward compatible with VI3 and vSphere installations. This is important as the new datastore consumer role for VMFS permissions within vCenter do not modify the volume itself and affect older installations.

The datastore consumer role can be configured to assign access and tasks per NFS or VMFS volume (see Fig. 1). This can be critical when certain volumes may require highly restricted access, yet an administrator may need virtual machine access at other levels.

|

| Figure 1. The datastore consumer role allows configuration of privileges on the VMFS volume managed by vCenter. |

The main takeaway is to be aware that if vCenter is configured for permissions to a datastore, that is where those access permissions stop. Any access by a host account on the ESX or ESXi host can still access the filesystem when zoned to the storage, whether or not it is managed by the same vCenter Server.

Realistically speaking, managing access for aggregated storage can only be done effectively through a product such as vCenter. I still feel that vStorage VMFS is the most underrated technology that VMware has produced.

Have a thought? Send me an e-mail.

Posted by Rick Vanover on 09/14/2009 at 12:47 PM1 comments

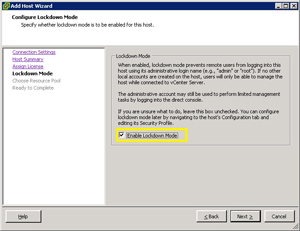

In an earlier post I mentioned that the upgrade to vSphere is the right time to make the decision between ESXi and ESX. In any experience with ESXi, you will undoubtedly notice the option in a number of places to enable ESXi Lockdown mode. Both platforms support Lockdown mode, but I want to focus on its behavior for ESXi host installations.

Lockdown mode simply removes any remote root-level access to the host through the vSphere Client. For managed installations using vSphere with vCenter, this is a safe configuration. Lockdown mode will require all communications to use the vCenter Agent on the ESXi system. When managed by vCenter, the communication between the ESXi host and vCenter uses a special user, called vpxuser. If lockdown mode is used, the most visible indication is that you cannot log into the ESXi host via the vSphere Client directly as root. Logging into a host (whether it be ESXi or ESX) directly with the vSphere or VI Client can make sense in certain troubleshooting situations, but generally if the host is managed by vCenter all client activity should be done there. Another side effect of enabling Lockdown mode would be any virtualization-specific tools that use the root account to access the ESXi host directly.

Lockdown mode does not, however, remove SSH access with the root account to the ESXi server if you have enabled it (see Fig. 1).

|

| Figure 1. An ESXi host is being added with the option to enable Lockdown mode. (Click image to view larger version.) |

The next question is how does Lockdown mode affect any troubleshooting efforts that may be required for virtualization administrators? Not much, in my opinion. Through the physical console access via a monitor, HP iLO, Dell DRAC or otherwise direct access to the console of the system we are still able to access the main ESXi screen. This includes the option to restart the management network and restart the management agents. Further, the command line access is still available if needed on the ESXi host and Lockdown mode can be disabled on the fly.

Is Lockdown mode a security silver bullet? No. But it is a good practice if direct vSphere client connections should be prohibited to the hosts.

Posted by Rick Vanover on 09/10/2009 at 12:47 PM3 comments

Going into this year's VMworld in San Francisco, I had an open mind about the entire event with the intention that I would critique it publicly the week after. Here we are and I can say that VMworld overall was good. Before the start of the week, I was pretty sure that there was not going to be any ground-breaking announcement directly from VMware. I do, however, respect the early workings of possibly some big things with the vCloud API, client hypervisors and where the SpringSource acquisition can go with infrastructure integration.

The sessions were good for those interested in deep-down information about a specific technology. For me that included vSphere topics on performance, AppSpeed/ and others. The organization was good, and unlike Las Vegas last year, the surrounding area was pleasant and chic. Las Vegas is one of the places that can get weird after a bit, especially if you start to think it is real.

My main complaint is that the VMworld events in North America are limited to Western U.S. destinations. The flagship virtualization conference will return to San Francisco again in 2010 before rehashing in Copenhagen in October of 2010 for the newly positioned VMworld Europe. For a North American venue, San Francisco makes great sense. It is close to Palo Alto, which means the VMware staff can provide good support without too much disruption from traveling. San Francisco is a city that many cities in the U.S. can enjoy non-stop flight service to, which is imperative to the site selection process for an event of this size. Further, there are many low-cost flight options to the region, including into Oakland and San Jose.

From a facilities standpoint, the Moscone Center and lab arrangement at the San Francisco Marriott were okay. I thought the long walk between facilities was a minor issue, but the consensus is that it was a better arrangement than in 2007, when all events were in the Moscone Center. It was nice to walk outside a bit also for fresh air. That separation may have diluted traffic into the Solutions Exchange area, with attendees spending more time in between sessions near the lab facilities. The traffic is important to the exhibitors, as the spots are pricey.

There are plenty of other venues that could support the conference in the Eastern half of the United States. I understand why there is a home-field preference for San Francisco for logistical reasons. Yet the show has traveled in the past. A central or Eastern U.S. destination may attract a new market of attendees who may be able to carve out the staff training time and registration fees, yet can't swallow the airfare and hotel costs in some situations.

For those who could not make it to VMworld this year, I still encourage you to go for it in the future. It is a great networking event and there is a lot of good information exchanged at all levels. Share your comments below or drop me a note.

Posted by Rick Vanover on 09/08/2009 at 12:47 PM4 comments

Getting started on the training and certification path for

vSphere is a good idea. Many IT professionals have advanced their careers with experience in virtualization, with VMware technologies being a great example where training and experience will lead to better things.

There are a number of resources and strategies to employ to be successful with the new platform. Here are some of the things that I am doing with vSphere to supplement my professional experience with the product:

Lab time: You have to devote time to learning the product in a lab. This can be your formal development equipment, or if you are serious about learning the product you could purchase a server for use at home.

Resources: A good book is a timeless resource, even for fast-moving technical topics. One of the good resources that literally just became available is Scott Lowe's new book, Mastering VMware vSphere 4. I am only a few chapters in, but the book is well written and easy to follow.

Different perspectives: One of the strongest points about virtualization is that so many solutions can be architected for specific needs. In my own development I found that I had learned simply what I needed to know for my own requirements initially. I took it upon myself to broaden my VMware configurations in my self-guided training, and found that very enriching. The best example is storage. It is a good idea to work with other storage products and configurations that may be different from what you already know. An example would be that if your experience is limited to fibre channel storage, work with iSCSI products. I have used the openfiler and NexentaStor products to use NFS and iSCSI storage with vSphere. These can even be run as virtual machines for lab environments.

Commitment: If you want to advance on any technology, you have to want it. Most people won't develop in areas that they are not interested in.

A personal skill development plan is critical to embracing the new technology and advancing your career, if you are interested. Does vSphere offer a development opportunity for you? Share your comments below.

Posted by Rick Vanover on 09/03/2009 at 12:47 PM2 comments

One of the most important parts of VMware's

vSphere offering is the collective migration technology,

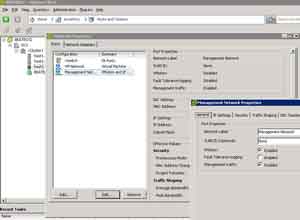

VMotion. In the current landscape, this includes migrating VMs from one host to another as well as from one storage system to another. Let's take a quick look at configuring VMotion to work for ESXi hosts in vSphere.

Like VI3, vSphere needs to have a VMkernel port configured to allow the migration technologies to proceed. For ESXi hosts, VMkernel network interfaces are installed by default but are not functional for migration tasks until they are configured. Default installations of ESXi embedded and installable will have an IP address assigned to the interface, but not until we check the box to enable VMotion for the host are we able to migrate (see Fig. 1).

|

| Figure 1. The configuration to enable VMotion is a single check box on the properties of the hosts’ VMkernel network interface. (Click image to view larger version.) |

Once that is configured, the host is able to use VMotion. This includes the Enhanced Storage VMotion options that allow a virtual machine to migrate from a thin-provisioned disk to a fully allocated disk, and the reverse.

From a network planning perspective, only one TCP/IP address is required for an ESXi host compared to full installations of ESX. This is due to the lack of the service console or console operating system (COS), which needs an IP address also. For redundancy purposes, make sure that at least two interfaces (ideally on different physical switches) are provisioned to the vSwitch that holds the VMkernel port.

VMware is aligning resources and direction to the ESXi model. As you are planning your migration to vSphere, I highly recommend that you start this implementation exclusively with ESXi for reasons that I point out in this earlier post. Should be easy enough to do in your sleep!

Posted by Rick Vanover on 09/01/2009 at 12:47 PM6 comments

VMworld is an interesting beast. Trying to explain it to someone outside of virtualization is more difficult than it seems. It isn't really training, it isn't entirely a trade show; it is more than just networking, yet it isn't like most other events. I had the opportunity to attend last year in Las Vegas and will be at this year's event in San Francisco next week.

VMworld is also over-stimulating, as each day is jam-packed with events. My head hurt after last year's show. There will be a flood of news and Twitter feeds of VMworld-related events. Here are some good feeds to follow as VMworld happens:

http://twitter.com/jtroyer -- John Troyer is a virtualization machine. As VMware communities manager, he has many relevant tweets to VMware and VMworld.

http://twitter.com/vmworld -- The official VMworld Twitter feed will have lots of practical information as well as events as they occur.

http://twitter.com/#search?q=%23VMworld -- Everyone via the Trending Topic #VMworld will surely be flooded with stuff next week.

http://twitter.com/RickVanover -- It's me! But, I'm going to try something different with my tweets next week. I'm going to give you a different perspective of the event from the attendee's perspective. I can't exactly tell you what that will be, so you'll have to check the tweets as they're pushed out.

Next week, you'll also see special editions of the VirtualizationReview.com newsletter, which will pack as much information as possible from the show.

I am excited for VMworld to start, but also, in a way, I'm ready for it to be over. My schedule is entirely booked for Tuesday and Wednesday, which are the only two days that I will be in San Francisco. While the event is only a few days, the amount of work that goes into planning an event like this is tremendous and I, for one, appreciate all the hard work that many people behind the scenes put in to make it a very organized event. Snags happen also, as was the case with the infamous LAB04 session earlier in the week.

Have a comment on VMworld? Send me a [email protected] note or leave a comment below.

Posted by Rick Vanover on 08/27/2009 at 12:47 PM2 comments

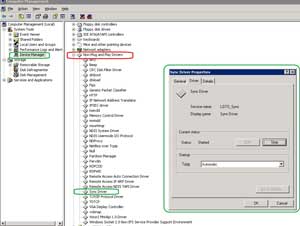

For VMs running on ESX and ESXi version 4, there's new functionality for creating snapshots. Generally speaking, I prefer not to have user-created VM snapshots in production-class server consolidation environments, as I believe they can become unmanageable.

Snapshots are, however, critical to functions like virtualization-centric backup solutions and use in test or development capacities. vSphere's offering of quiesced snapshots allows a VM to be in a more consistent state during the snapshot.

A quiesced snapshot simply gets the VM quiet so that a better snapshot can be performed. A quiesced snapshot doesn't shut down or reboot the guest. VMware Tools is a critical component of a quiesced snapshot with the Sync driver. The Sync driver is installed with VMware Tools, but is a separate service called "Sync Driver." The Sync driver shows up in Windows logs as "LGTO_SYNC" (see Fig. 1).

|

| Figure 1. The VMware Tools Sync driver enables quiesced snapshots, as show in the Sync Driver of this Windows Server 2003 guest. (Click image to view larger version.) |

While the Sync driver is critical to better snapshots, certain configurations cannot run a quiesced snapshot with vSphere. Windows Vista as a guest VM cannot run a quiesced snapshot as outlined in this VMware KB article.

Quiesced snapshots are not exactly new for ESX and ESXi, as version 3.5 added the Sync Driver. vSphere has allowed the option to explicitly create a quiesced snapshot within the vSphere Client.

Posted by Rick Vanover on 08/24/2009 at 12:47 PM1 comments

Earlier in the year, I wrote about the PlateSpin Forge disaster recovery appliance. Forge is unique, as it leverages PlateSpin's sophisticated conversion technologies to replicate workloads to an ESX server locally on a dedicated piece of hardware and management.

PlateSpin has updated Forge to version 2.5. Functionality enhancements include:

- Additional operating system support: Windows Server 2008 and Vista have been added as protected workloads, including 64-bit editions of Windows Server 2003.

- Physical machine server sync: Forge can manage workload protection to physical servers.

- Long replication support: One of the prior limitations of Forge was that the initial replication needed to complete within 24 hours. They removed that limitation with version 2.5. This is targeted for large protected workloads or slow network links.

- Additional management and performance: Support for multiple tenants on a single appliance, role-based access, improved synchronization performance and increased functionality to accommodate moving the appliance.

Recently at TechMentor Orlando, I discussed Forge to a number of small- and medium-sized organizations who had interest with the product. It is a compelling offering for a single disaster recovery solution for up to 25 protected workloads.

More information can be found at the PlateSpin Web site.

Posted by Rick Vanover on 08/19/2009 at 12:47 PM2 comments

Earlier last week, I mentioned that I didn't care too much about the race between VMware and Microsoft that's getting hot. The heated discussions associated with platform evangelism generally makes my eyes roll, mainly because I am sure of where I am in my virtualization practice.

Just this week, I received a series of questions asking where Microsoft's Hyper-V R2 and SCVMM R2 offering is compared to VMware from a competitive standpoint. My answers were probably not very nice. I'll work on that.

There is one discussion that caught my eye in the blogosphere, however, that starts with this TechNet blog. There, a good discussion has been raised about the host patching burden. Then one of my peers in the community, Maish Saidel-Keesing, responded with a post on his site.

One thing I have learned when making direct comparisons to virtualization platforms is that there is no way to make both sides happy. Jeff's post on TechNet and Maish's independent response point out that how you tell a story can differ greatly on your perspective.

Back to the point on host patching. Privately, I've thought for a long time that the host patching burden would be the biggest curve ball I would ever have if I were a Hyper-V administrator. Sure, migration, scripting and other factors would accommodate the actual practice points of host patching. One thing I like about VMware in this scenario is that the management server is the end-all for the host, meaning that the hosts are disposable in a way. I don't have to worry about domain membership, activation (MAK/KMS), and updates from base product on the redeployment. Therefore in a way, ESX and ESXi are a simpler solution, based on my experience and comfort level.

Host patching and its associated practice can easily fall into a heated religious debate. Share your comments on host patching below.

Posted by Rick Vanover on 08/18/2009 at 12:47 PM2 comments