One of the great things about virtualization is that it is such a flexible platform that we can change our mind on almost anything. But, that's not to say that there are some things that we just shouldn't do -- for examples, see my "5 Things To Not Do with vSphere." One of those areas is storage, and in a way I'm torn as to whether a broad recommendation makes sense for expanding VMFS volumes.

Don't get me wrong. The capability to expand volumes is clearly a built-in function of vSphere. And since vSphere 4, it's been a lot easier to do and you don't have to rely on VMFS extents. But it's also pretty clear that you should avoid doing that today, even though we can. So, when it comes to expanding a VMFS datastore, the big thing to determine is if the storage system will expand the volume in a clean fashion.

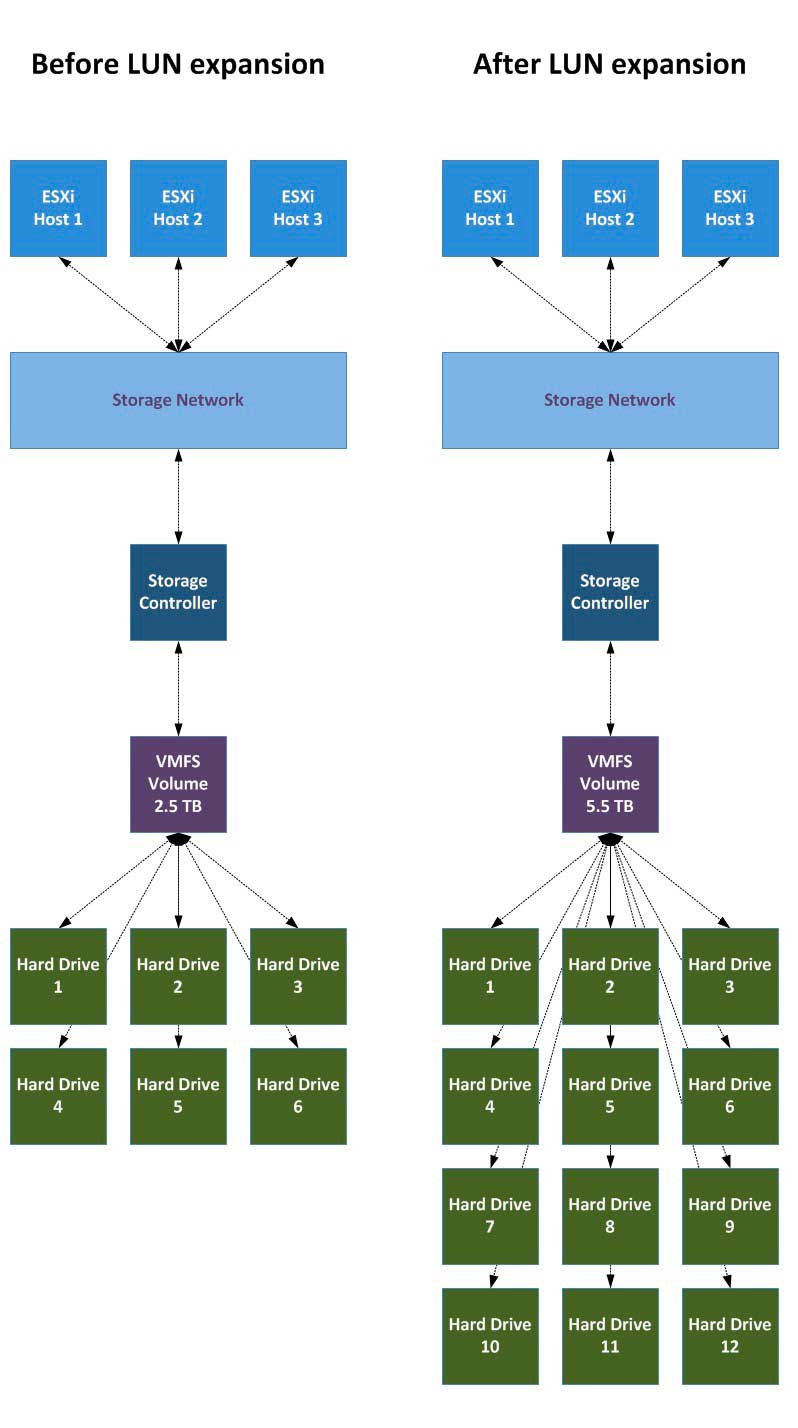

Let's take an example, where a three-host cluster has one storage system with one VMFS-5 volume sized at 2.5 TB over six physical drives (see Fig. 1). If the storage system was capable to add six more drives, I could expand the volume to 5.5 TB (depending on RAID overhead, RAID algorithm, and drive size).

|

Figure 1. A VMFS volume before and after an expansion. (Click image to view larger version.) |

Now this example is rather ideal in a way. Assuming all drives are equal on this storage controller, we'd actually potentially not just be expanding the size of the volume from 2.5 TB to 5.5 TB, which may solve the original problem. But, we also are introducing a great new benefit in that we are doubling the potential IOPs capability of the VMFS volume.

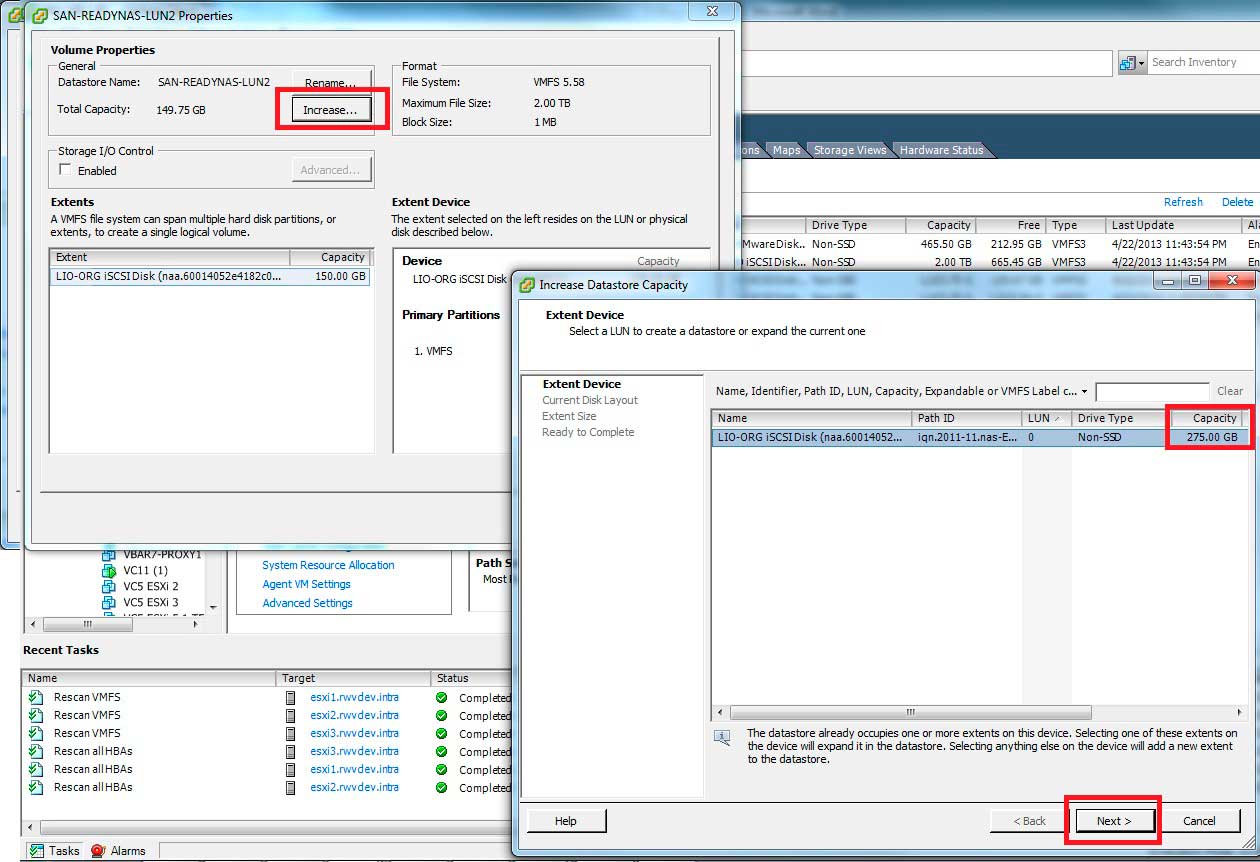

While I don't have this capability in my personal lab to extend to a 12-drive array, I do have an expansion pending on an iSCSI storage system. Once the logical drive (iSCSI or Fibre Channel LUN) is expanded on the storage controller, the vSphere host can detect the change and easily expand the volume. Look at Fig. 2 and you'll notice the increase button is shown in the properties of the datastore (note that a rescan is required before the increase task is sent to detect the extra space).

|

Figure 2. The expanded space is detected by the vSphere host. (Click image to view larger version.) |

Simply ensure the maximize capacity option is selected from the free space inventory on the volume; and the expansion can be done online – even with VMs running.

The decision point becomes if the expansion is clean. Are the actual drives on the storage controller serving other clients (even non-vSphere hosts)? That can set you up negatively for a poor and possibly unpredictable performance profile.

Do you prefer to create new LUNs and reformat, or do you consciously perform VMFS expansions? I strive for a clean approach with dedicated volumes. What's your practice? Share your comments here.

Posted by Rick Vanover on 05/06/2013 at 3:31 PM11 comments

I'm what you might call a contradiction. I'm definitely not a fan of the repetitive task, but am also coincidentally too lazy to learn how to script this very same task. Sometimes I luck out and a quick Web search will point me in the right direction, or other times my laziness takes me to built-in functions that can help me out just as well. The vSphere Client (and Web Client!) have helped me avoid scripting one more time! Whew!

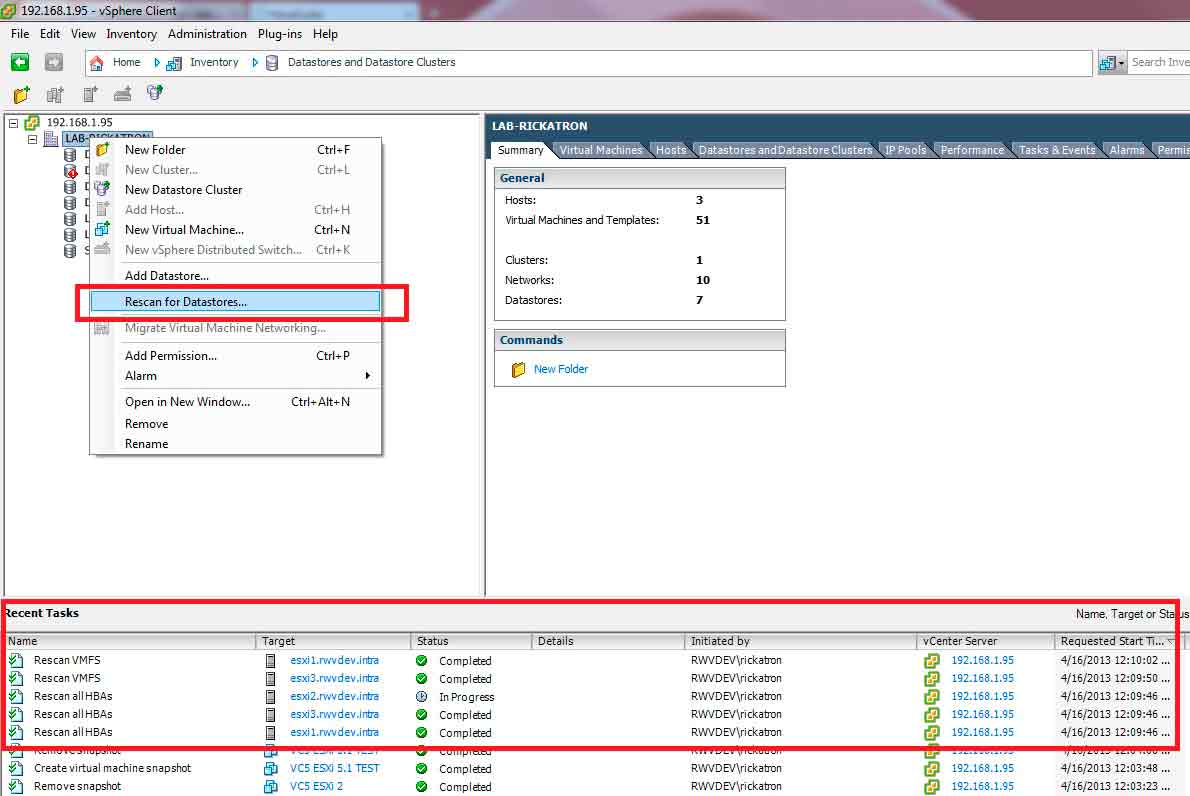

The repetitive task of the day is adding a VMFS volume to a vSphere cluster. Before I found this little gem I'm about to show you, I had to log into each hosts' storage area and click the rescan button to ensure the local IQN and Fibre Channel interfaces are instructed to search for new storage. This is usually the case when a new LUN is added to existing Fibre fabrics or existing iSCSI targets; a simple rescan will have the new volume arrive and be usable (assuming it is formatted as VMFS).

I found this quick way to scan all hosts in a datacenter in one pass. This is great! Fig. 1 shows this task being done in the vSphere Client.

|

Figure 1. Rescanning all datastores is as simple as right-clicking from the right view. (Click image to view larger version.) |

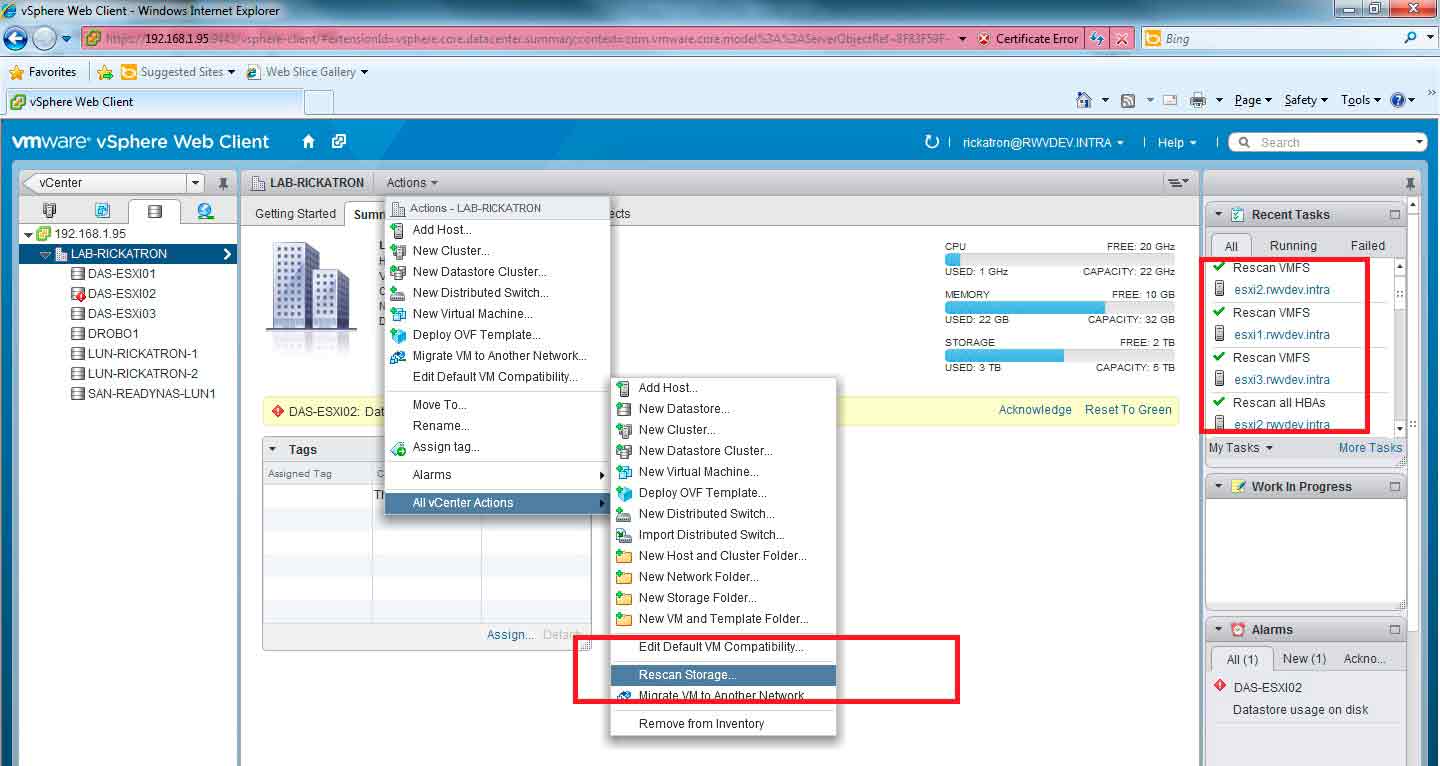

I would be remiss (and possibly be called out) if I didn't show the same example in the vSphere Web Client, the new interface for administering vSphere. To do the same task in the vSphere Web Client, it's the same logic (Fig. 2).

|

Figure 2. This batch task can be done on the vSphere Web Client also. (Click image to view larger version.) |

And just in case you are wondering, the option to "Add Datastore" when applied at the parent view in the (Windows) vSphere Web Client doesn't assign the VMFS volume to every host. However, once it is deployed to one, you simply rescan all hosts. NFS users: Sorry, try again -- nothing for you this time.

NFS of course can still be addressed with host profiles (as can VMFS volumes for that matter). But one piece of caution on rescanning all hosts at once. It is indeed a safe task to do for production virtual environments, but historically in my virtualization practice I've always put hosts into maintenance mode first to add storage. This becomes an increasingly repetitive process but it becomes worth it -- especially, once something goes wrong. Chris Wolf sums it up the issues in his piece, "Troubleshooting Trouble." My important takeaway is that rescanning for storage on hosts is fine, until there is a problem.

Do your rescan in production or do you always use maintenance mode? Will you use this rescan tip? Share your comments here.

Posted by Rick Vanover on 04/24/2013 at 3:32 PM1 comments

I'll admit it -- I love the flexibility that VMware vSphere virtualization provides. The fact is, you can do nearly anything on this platform. This actually can be a bad thing at times. I recently was recording a podcast with Greg Stuart, who blogs at vdestination.com and we observed this very point. We agreed that all virtualized environments are not created equal, and it is very rare if not impossible to see two environments that are the same.

This begs the question: How can virtualized environments be so different? And because of that, what are things that we may be doing right in one environment, but that's wrong in another? I've come up with a list of things you should NOT do with your vSphere environment, even if you can!

1. Don't avoid DNS.

I had a popular post last year, Have you checked out my post from last year, "10 Post-Installation Tweaks to Hyper-V"? My #1 post-installation tweak is that you should get DNS right. In terms of what NOT to do, the first no-no is using host files. Sure, it may work, but luckily vSphere 5.1 uses nslookup queries and reports the status, from a DNS server. No faking it now! Get DNS correct.

2. Don't stack networks all on top of each other.

Just because you can, doesn't mean you should. This is especially the case for network switches. Now, I'll be honest: I do stack many networks on top of each other in lab capacities, but that is for the lab. It's different for production capacities, where I've always put in as much separation as possible. This can be as simple as multiple VLANS, or as sophisticated as management traffic on a separate VLAN and interfaces just for vmkernel.

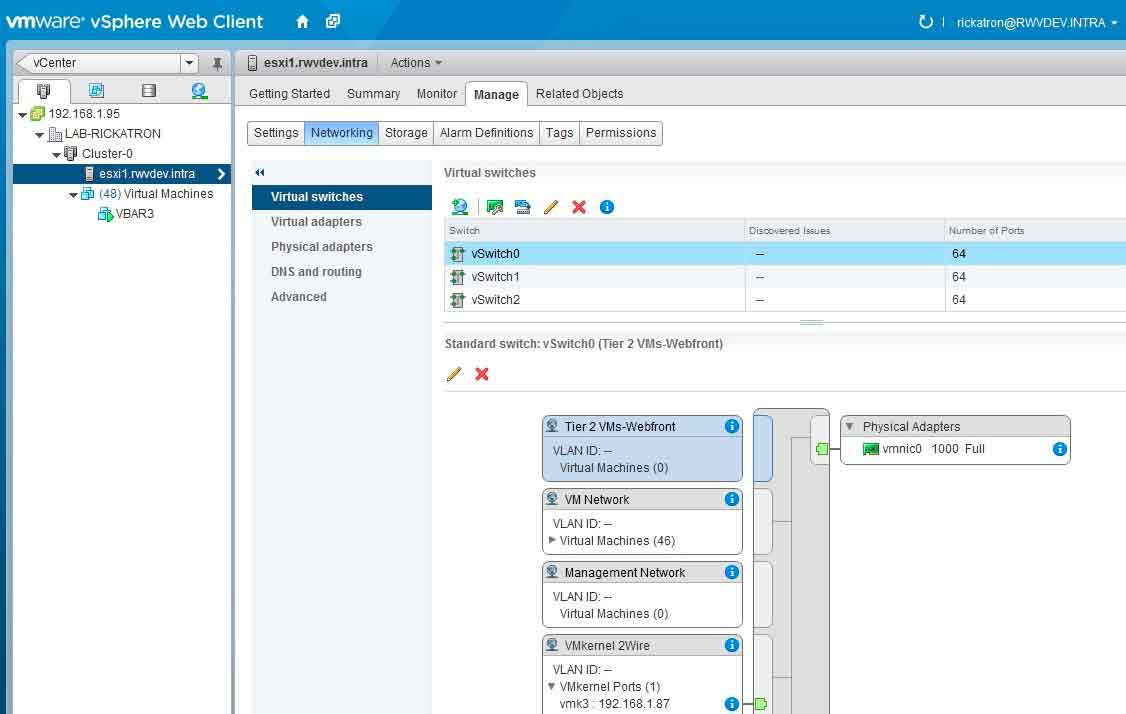

Storage interfaces can be treated the same way, different VLANs and ideally different interfaces. Fig. 1 shows how to NOT deploy it (from my lab!).

|

Figure 1. This virtual switch has management and iSCSI storage traffic (on vmkernel) and guest VM networking on the same physical interface and TCP/IP segment. (Click image to view larger version.) |

Also please don't do (again in the lab) as I have, of only having one physical adapter assigned to the virtual switch for your production workloads. That somewhat defeats the purpose of virtualization abstraction!

3. Don't avoid updating hosts. And VM hardware. And VMware Tools.

vSphere provides a great way to update all of the components of your virtualized infrastructure, via vSphere Update Manager. This component makes it very easy to upgrade these components. In the case of a vSphere host, if you need to go from vSphere 4.1 to 5.1, no problem. If you need to put in the latest hotfixes for vSphere 5.0, no problem as well; Update Manager makes host management very easy.

Same goes for virtual machines, they need attention also. Update Manager is a great way to manage upgrades in sequence for VM hardware levels. You don't have any VMware hardware version 4 VMs laying around there now, do you? Along with hardware version configuration, you can also manage the VMware Tools installation and management process. The tool is there, so use it.

4. Don't overcomplicate DRS configuration.

If you have already done this, you probably have learned to not do this again. It's like touching a hot coal in a fire; you may do it once but you quickly learn to not do it again. Unnecessary tweaking of DRS resource provisioning can cripple a VM's performance, especially if you start messing with the numbers associated with share values associated with VMs or resource pools. For the mass of VMware admins out there, simply don't do it. Even I don't do this.

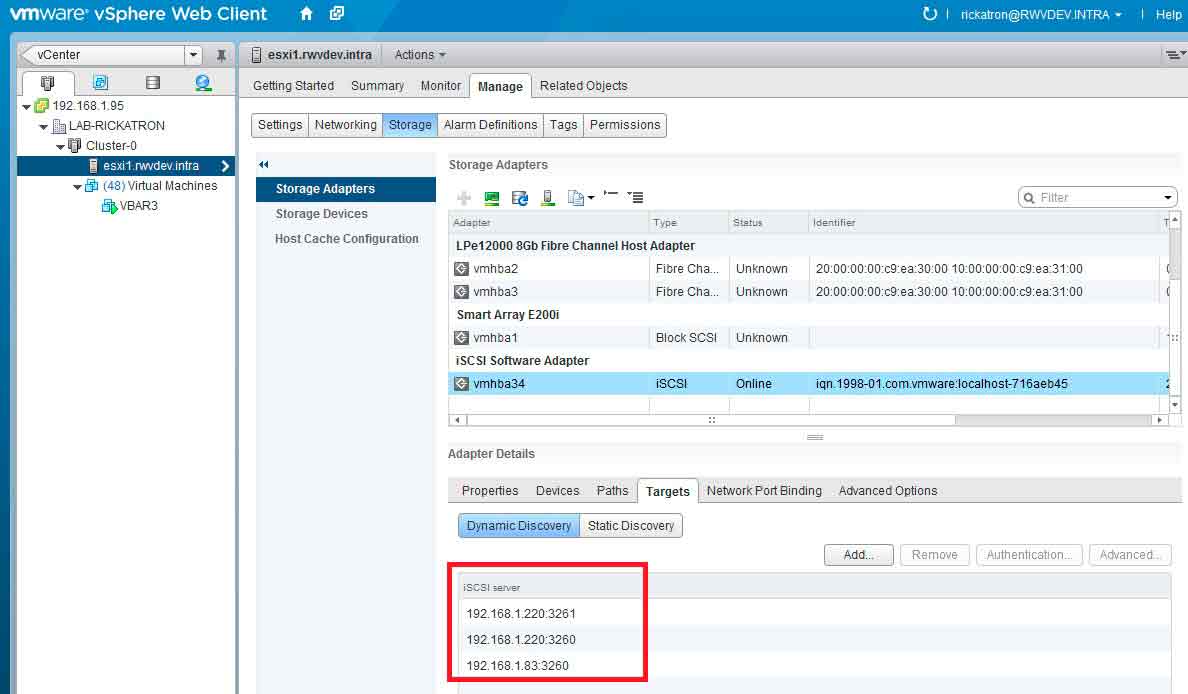

5. Don't leave old storage configurations in vmkernel.

I'll admit that I'm not a good housekeeper. In fact, the best evidence of this again is my lab environment. I'm much better behaved in a production capacity, so trust me on this! But one thing that drives me crazy are old storage configuration entries in the iSCSI target discovery section of the storage adapter configuration. Again back to my lab. Anyone see the problem here? I'm going to bet that one of those two entries for the same IP address is wrong!

|

Figure 2. This virtual switch has management and iSCSI storage traffic (on vmkernel) and guest VM networking on the same physical interface and TCP/IP segment. (Click image to view larger version.) |

Hopefully you all can give me a pass; after all, this is my lab. But the fact is, we find ourselves doing these things from time to time in a production capacity.

What configuration practices do you find yourselves constantly telling people NOT to do in their (or your own!) VMware environment? Share your comments here.

Posted by Rick Vanover on 04/10/2013 at 3:32 PM2 comments

There is one thing that we all know about virtualization: Storage is your most important decision. I've said a number of times that my transition to virtualization as the core part of my IT practice also led to me knowing a lot about shared storage technologies. As I've developed my storage practice to enable my virtualization practice, one thing I've become good at is breaking down details on transports for virtual machine storage systems.

This can include individual drive performance, usually measured in detail measurements such as IOPs and drive rotational speed. Note that I didn't mention capacity, as that isn't a way to gauge performance. I also focus a lot of disk interconnection options, such as a SAS bus for drives. Lastly, I spend some time considering storage protocol in use. For virtual machines, I have used NFS, iSCSI and Fibre Channel over the years. I've yet to use Fibre Channel over Ethernet, which is different than Fibre Channel as we've known it.

When we design storage for virtual machines, many of these decision points can influence the performance and supportability of the infrastructure. I recently took note of 16 Gigabit Fibre Channel interfaces, in particular the Emulex LPe16000 series of devices (available in 1- or 2-port models). I took note here because, when we calculate speed capabilities for a storage system for virtual machines the storage protocol is important. The communication type is one decision (Fibre or Ethernet), then the rate comes into play. Ethernet is honestly pretty simple, and it has a lot of benefits (especially in supportability).

Ethernet for virtual machines usually exists in 1 and 10 Gigabit networks; slower networks aren't really practical for data center applications. Fibre Channel networks are the mainstay in many data centers, and there are a lot of speeds available: 1, 2, 4, 8 and 16 Gig speeds. It's important to note that Ethernet and Fibre Channel are materially different, and the speed is only part of the picture. Fibre channel is SCSI commands sent over fiber options and Ethernet (iSCSI and NFS both) encapsulate SCSI commands over TCP/IP.

Protocol wars aside, I indeed like the 16 GFC interfaces that are now readily available. How speed is measured between Fibre and Ethernet technologies are different, but Ethernet per-port is 10 Gigabit for most situations and 16 GFC is 16 "gigabauds," which has an equivalent of 14.025 Gb/s at full optic data transfer. So, per port, Fibre Channel now has mainstream options faster than 10 Gigabit Ethernet. Of course, there are switch infrastructure and multipathing considerations; but that applies to both Fibre Channel and Ethernet.

In my experience for my larger virtualization infrastructures, I'm still a fan of Fibre Channel storage networks. I know that storage is a passionate topic, and this may be a momentary milestone as 25, 40 and 100 Gigabit Ethernet technologies emerge.

What's your take on 16 GFC interfaces? Share your comments here.

Posted by Rick Vanover on 03/25/2013 at 3:33 PM5 comments

My last blog on post-installation tweaks for vSphere was a hit! So, I thought this would be a great opportunity to do the same for Microsoft Hyper-V. I think these tips are very helpful. In fact, I may find myself making more little tips like this.

You know what I mean here -- the tips here are some of the things that you actually already know, but how often is it that we forget the little things?!

Here are my tips for Hyper-V after it is installed, in no particular order:

1. Install updates, then decide how to do updates. Hyper-V, regardless of how it is installed, will need updates and a decision should be made on how to update it. Hyper-V updates that require the host to reboot when VMs are running on the host will simply suspend the VMs and resume them when the host comes back online. This may not be acceptable behavior, so consider VM migration, SCVMM, maintenance windows and more.

2. Make the domain decision.

There are a lot of opinions out there about putting Hyper-V hosts on the same Active Directory domain as "everything else." I've spoken to a few people who like the separate domain for Hyper-V hosts, and even some people who do not join the Hyper-V hosts to a domain. Give some thought to the risks, management domains and possible failure scenarios and make the best decision for your environment.

This is also the right time to get a good name for the Hyper-V host. Here's a tip: Make the name stand out within your server nomenclature. You don't want to make errors on a Hyper-V host, as the impact can be devastating.

3. Configure storage.

If any MPIO drivers, HBA drivers or SAN software are required, get them in before you even add the Hyper-V role (in the Windows Server 2012 installation scenario). You want to have those absolutely right before you even get to the VM discussions.

4. Set administrators.

Does this require the same administrator pool as all other Windows Servers? Then see point 2.

5. Name network interfaces clearly.

If you are doing virtualization well, you will have multiple network interfaces on the hosts. Having every interface called "Local Area Connection" or "Local Area Connection 2" doesn't help you at all. You may get lucky if there are a mix of interface types, so you could see the Broadcom or Intel devices and know where they go. Still, don't chance it. Give each interface a friendly name, "Host LAN," "Management LAN," etc. Maybe also go so far as to indicate the media used. Like "C" for copper or "N" for CNAs, etc.

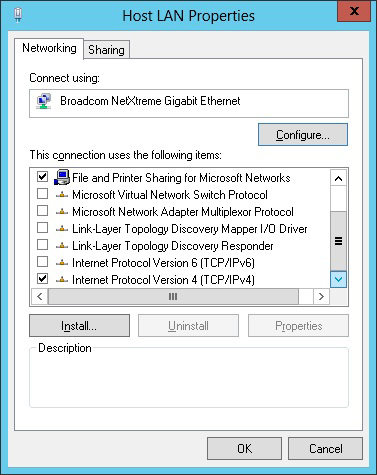

6. Disable unnecessary protocols.

Chances are for your Hyper-V hosts running in your datacenter, you won't be using all of the fancy Windows peer-to-peer networking technologies, much less IPv6. I always simplify things and disable things like IPv6, the Link-Layer Topology services and anything else that I know I won't use. I keep it simple.

|

Figure 1. Disable protocols you aren’t using for Hyper-V host. |

7. Ensure Windows is activated.

Whether you are using a Key Management Server (KMS), Multiple Activation Key (MAK), OEM installed version of Windows or whatever, make sure Windows won't stop at the start-up process asking the console to activate Windows now. This is less of a factor when using Hyper-V Server 2012, the free hypervisor from Microsoft.

8. Configure remote and local management.

This is a Microsoft best practice, and after holding off myself time and time again; I finally have come around to doing this myself. With the new Server Manager, PowerShell and Hyper-V Manager, you can truly do a lot remotely without having to log into the server directly. That being said, still ensure you have what you need. I still like Remote Desktop to get into servers, even if it is a boring console with only a command line like Windows Server core with Hyper-V or Hyper-V Server 2012.

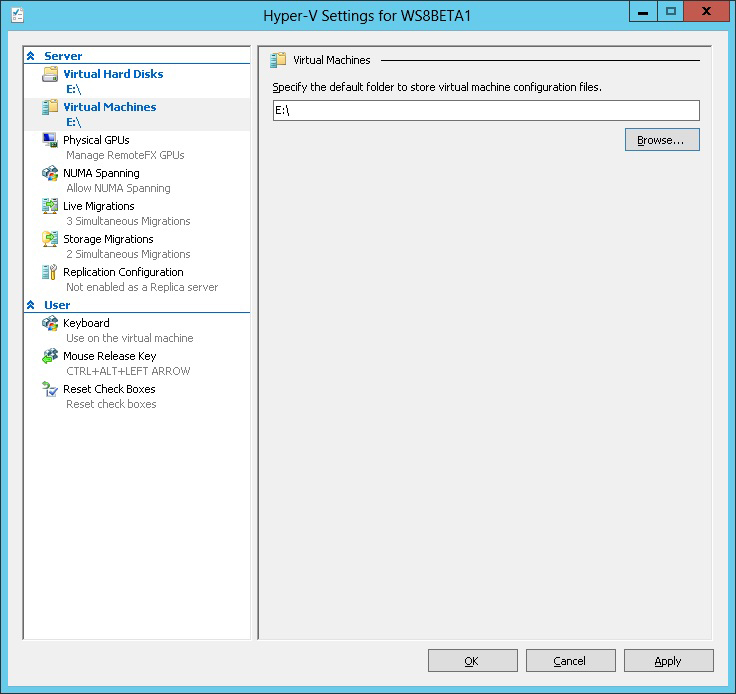

9. Set default VM and disk paths.

There is nothing more irritating than accidentally coming across a VM on local storage. Oddly, this happens on vSphere as well, due to administrator error. But set both the Virtual Hard Disks and Virtual Machines settings to your desired location. You don't want to fill up the C:\ drive and cause host problems, so even if this one volume isn't your preferred (E:\ in Fig. 2); it will keep the host integrity better off if there is a full situation.

|

Figure 2. Ensure the VMs don’t fill up the C:\ drive on the Hyper-V host from the get-go. (Click image to view larger version.) |

10. Test your out-of-band remote access options.

This tip goes for both Hyper-V and vSphere -- Ensure you have what you need to get in when something doesn't go as planned. Tools such as a KVM, Dell DRAC, HP iLO can get you out of a jam.

These tips are some of the more generic ones I use in my virtualization practice, but I'm sure there are plenty more out there. In fact, the best way to learn is to implement something and then take notes of what you have to fix/correct post installation.

What tips do you have for Hyper-V, post-installation? Share them here.

Posted by Rick Vanover on 01/28/2013 at 3:34 PM4 comments

For the most part, I live in a world of default configurations. There are, however a number of small tweaks that I do to ESXi hosts after they have been installed to customize my configuration.

While this list is not specific-enough to work everywhere, chances are you will pick up something and say "Oh, yeah, that's a good idea!" I don't include specific networking or storage topics, as they apply to each specific environment, but this generic list may apply to you in each of your virtualization practices. Most if not all of these settings can be pushed through vSphere Host Profiles, but I don't always work in environments licensed to that level.

Here we go!

1. Set DNS.

Get DNS right. Get DNS right. Get DNS right. Specifically, I set each host to have the same DNS servers and suffixes. This also includes getting the hostname from localhost changed to the proper local FQDN. You are renaming your hosts from localhost, aren't you?

2. Create the DNS A record for the host.

Just to ensure your impeccable DNS configuration from step 1 is made right.

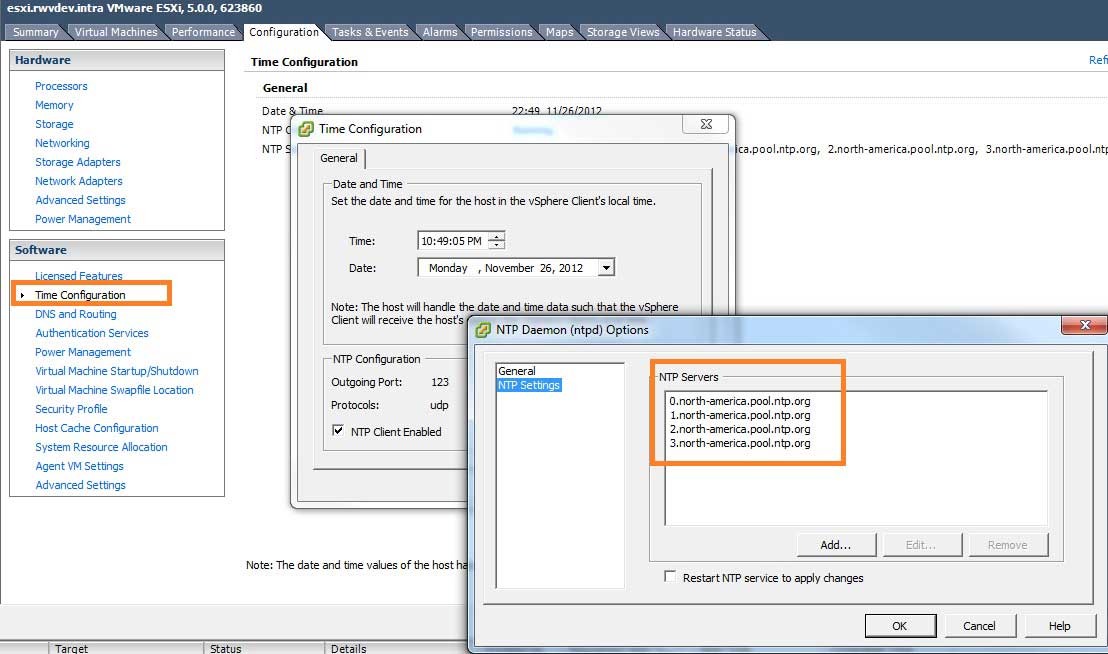

3. Set NTP time servers.

Once DNS is set correctly, I set the hosts to use this pool of NTP servers: 0.north-america.pool.ntp.org, 1.north-america.pool.ntp.org, 2.north-america.pool.ntp.org, 3.north-america.pool.ntp.org. This is set in the host configuration via the vSphere client (see Fig. 1).

|

Figure 1. The NTP authoritative time server list is configured in the vSphere Client. (Click image to view larger version.) |

4. Raise the maximum number of NFS datastores.

I work in a world where we have multiple NFS datastores, including some that come and go. I did write an earlier blog post on this topic which you can read here.

5. Disable the SSH warning when it is enabled.

I'm sorry, sometimes I need to troubleshoot via the command line and I don't really want to get up from my desk. SSH does the trick. VMware KB 2007922 outlines how to suppress this warning in the vSphere Client.

6. Install correct licensing for the host.

Duh. This will get you in 90 days if you don't do it now.

7. Set any VM's for automatic startup.

This is an important feature when there is a problem such as a power outage. I recommend putting virtualized domain controllers and network infrastructure (DHCP/DNS) first, then critical applications but not necessarily everything else.

8. Set enable vMotion on vmkernel.

Not sure why this isn't a default. Of course environments with multiple vmkernel interfaces will need to granularly direct traffic.

9. Rename local datastores.

Nobody likes an environment littered with datastore, datastore (1), datastore (2), etc. I recommend a nomenclature that self-documents the datastore. I have been using this format: DAS-HostName-LUN1. DAS stands for direct attached storage, HostName is the shortname (not localhost!) for the ESXi system, and LUN1 would be the first (or whichever subsequent) volume on the local system. If you don't want to use direct attached storage, you can delete the datastore as well; the ESXi hosts partitions will remain. If you are using ESX 4.1 or earlier, don't do this however.

10. Test your remote access options.

This can include SSH, a hardware appliance such as KVM, Dell DRAC, HP iLO or others. You can't rely on something you can't support.

That is my short list of tips and tricks for post-installation tips and tricks for generic ESXi host installations. Do you have any post-install tips? Share your tips in the comments section!

Posted by Rick Vanover on 12/19/2012 at 12:48 PM3 comments

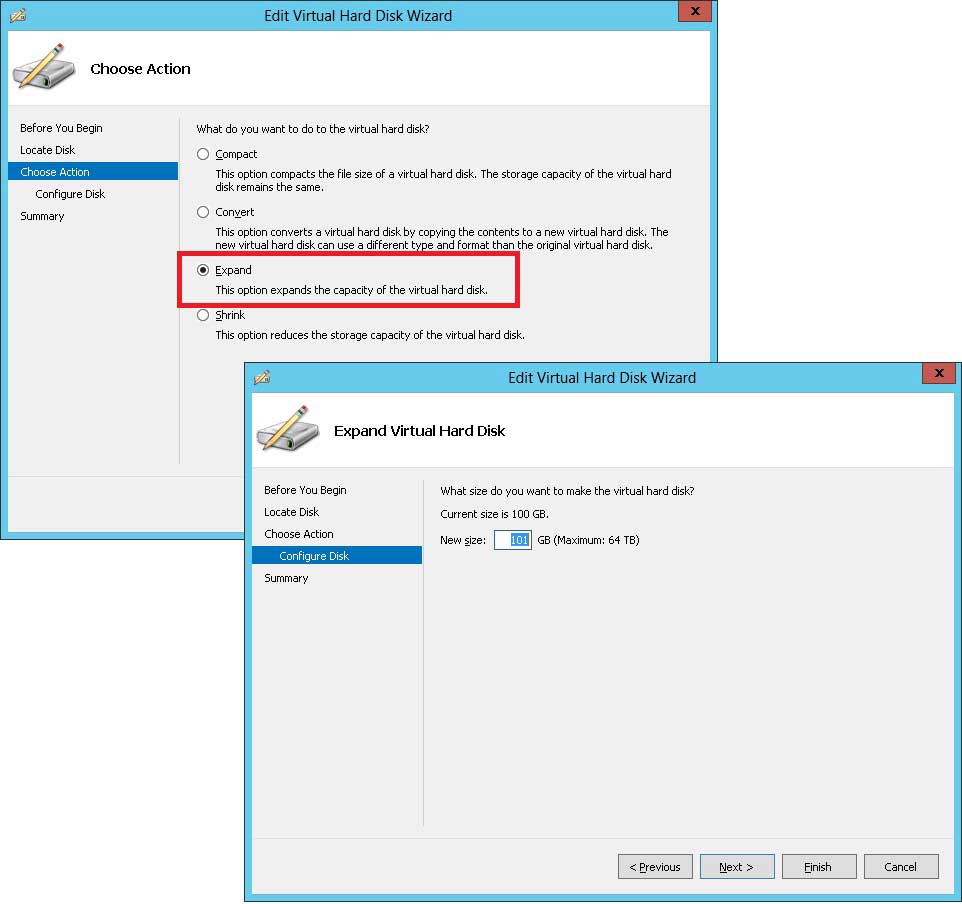

One of my absolute favorite features of Windows Server 2012 with Hyper-V is the VHDX disk file format. This new virtual disk format goes up to 64 TB and is the answer to many storage challenges for VMs when larger workloads end up as a VM.

While my virtualization practice frequently sees me leverage thin-provisioning, I don't always make virtual disk files exactly to the maximum size they can be made. There isn't too much harm in that point, unless the guest operating systems, of course, fill up. Monitoring discussions aside, there are situations where the VM will need its storage allocation increased.

Now, the one bummer here is that, with Hyper-V on Windows Server 2012 the virtual disk cannot do a dynamic grow while the VM is running. To answer your next question, we can't add a virtual disk either while the VM is running to the first IDE controller.

But you can add virtual disks to SCSI controllers when the VM is running. The VM does have to be powered off (not paused) to have a VHDX (or VHD) file expanded. Once the VM is shut down, edit the VM settings by right-clicking on the VM in Hyper-V Manager. Once you are in the VM settings, find the virtual disk on the VM (see Fig. 1).

|

Figure 1. The VHDX can be edited when the VM is powered off to increase its size. (Click image to view larger version.) |

Once the edit option is selected for the VHDX file, four options come up. One of them, surprisingly, is to shrink the VHDX which is quite handy if you need it. Compacting the disk will reclaim space previously used by the guest VM, and the convert option will move it to a new VHD or VHDX with optional format changes. Select the Expand option to increase the size of a VHDX file (see Fig. 2).

|

Figure 2. Expanding the VHDX file is one of the four options that can be done when the VM is offline. (Click image to view larger version.) |

The 64 TB maximum is a great ceiling for a virtual disk size. Given enough time, this surely will become too small, though I can't imagine it for a while. To increase the disk, simply enter the new number. It is important to note that if the disk is a dynamically expanding disk, it will not write out the new differenced amount, and the expand task will be quick. If the disk is of a fixed size, the expand task will require the I/O to the storage system to write the disk out. If the storage product is supported for Offloaded Data Transfer (ODX), the task may be accelerated by help from the storage array.

This is an easy task, but it currently has to be done with the VM powered off. How do you manage expanding disk sizes for virtual disks, further, what is your default allocation? I usually provide VMs 100 GB of space unless I know more going in. Share some of your practice points in the comments.

Posted by Rick Vanover on 12/13/2012 at 12:48 PM5 comments

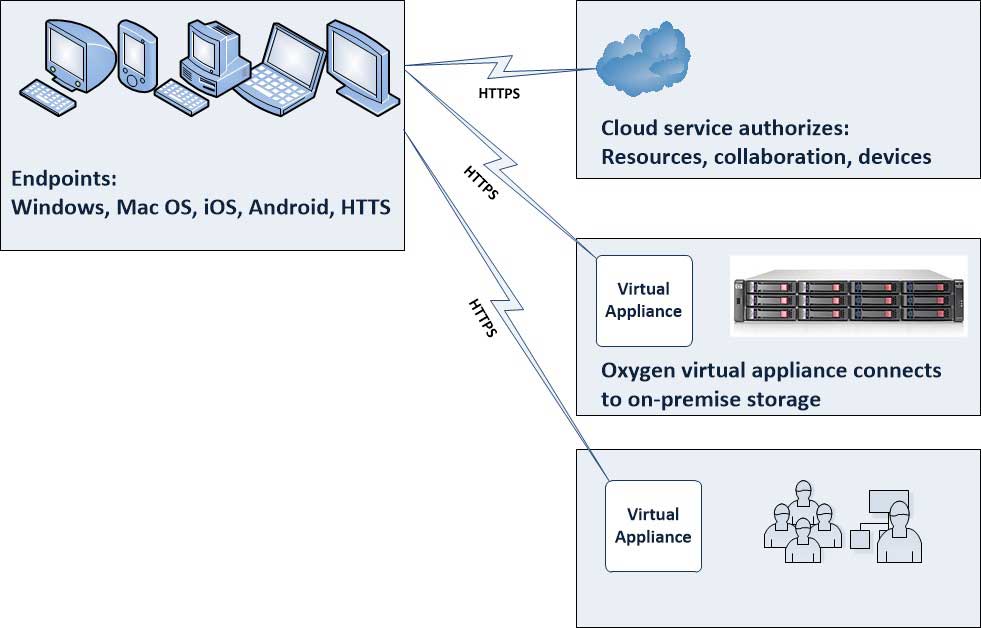

There is no shortage of storage solutions today that position storage around some form of cloud technology. Love or hate the cloud buzzword, here is something different: Oxygen Cloud.

I got to check out the Oxygen Cloud technology and here are my thoughts. First of all, it's not what you think. In fact, I was struggling to say "what" the Oxygen Cloud is. At first it may just look like another file distribution tool, like DropBox. A closer look and I found that it's got a lot more going on.

The underlying technology is a virtual file system and that's the key to it all. It is the basis for the synchronization, and then a number of management and policy elements kick in. So, in a way it is the best of both worlds. Let's dig into the details a bit. Fig. 1 shows how the components work.

|

Figure 1. The Oxygen cloud takes a fresh approach in leveraging on-premise storage and authentication. (Click image to view larger version.) |

The critical element here is that the storage delivered to the endpoints -- Windows, Mac OS, iOS, Android and HTTPS -- is an instance of the virtual file system that originates from on-premise storage. The virtual file system is very intelligent in that it doesn't deliver a "scatter" of all data to all endpoints. Instead, it retrieves as needed and is authorized for many situations. And here is where the policy can kick in: Certain endpoints and users can have the full contents of the virtual file system pushed out to avoid interactive retrieval.

In terms of the origination, the on-premise storage resource (accessed over NAS or object-based storage protocols) maintains the full content of the virtual file system and allows each authenticated user and authenticated device to access their permitted view of the virtual file system.

The key here is that there is a triple-factor encryption. The virtual file system is encrypted at rest on premise (presumably in your own datacenter) and the sub-instance that would be on an endpoint is encrypted as well. The authentication of a device is encrypted. Lastly, the user authentication process is encrypted both from the virtual appliance locally (again, in your own datacenter) to Active Directory.

There are a number of policy-based approaches as well to ensure that the instances of the virtual file system exist in a manner that makes sense for devices that are floating around the Internet and other places unknown. One of those is a cache timeout where, if the device and user don't authenticate back, the instance of the virtual file system will deny further access. Example here is if an employee is fired from an organization, but the PC never subsequently connects to the Internet. Then, the virtual file system can be set to prohibit the user from subsequent access.

In terms of the endpoints, Windows, Mac OS, iOS and Android are ready to go and for PCs and Mac systems the Oxygen Cloud appears as a local drive. That makes it easy for the user to access the data. Under the hood, it is a virtual file system, yet for mere mortals out in the world we need to make this an easy process.

What do you think of this approach? I like the blend of a smart virtual file system coupled with rich policy offerings. Share your comments on content virtualization here!

Posted by Rick Vanover on 09/12/2012 at 12:48 PM5 comments

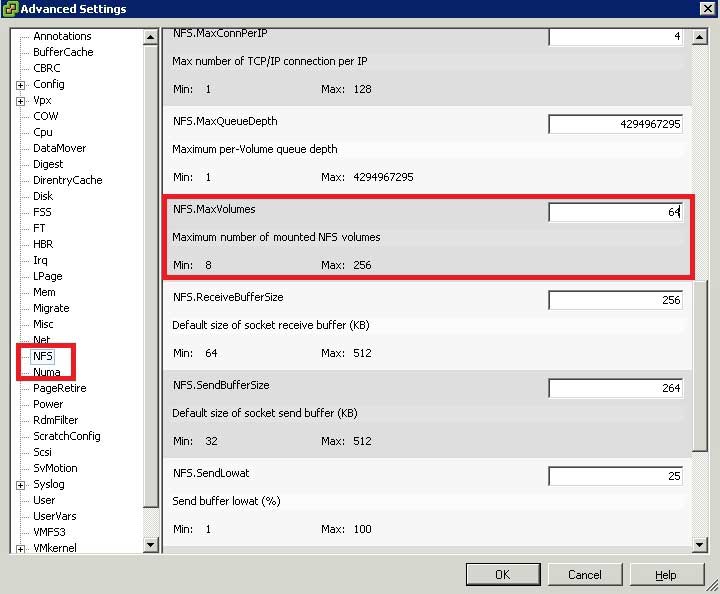

By default, an ESXi host is permitted to have eight NFS datastores. In vSphere environments, you might have find it necessary to go over the limit and add more than eight NFS datastores and it may not be obvious why you can't add another one. Historically, NFS datastores were unique compared to VMFS datastores in that they could scale above 2 TB without using extents. With vSphere 5 and VMFS-5, that limitation is now removed.

It is possible to have a few number of large NFS datastores, but it is also a valid configuration to leverage a larger number of smaller NFS datastores. This may or may not be on multiple storage controllers, as today storage can exist in many forms on vSphere hosts.

The default number of NFS datastores is configured in the Software advanced settings section of the host (see Fig. 1). This setting can be applied to a host profile policy as well.

|

Figure 1. The advanced software configuration allows the number of NFS datastores to be set. (Click image to view larger version.) |

Changing the default is one of those pre-deployment tweaks I put in before the host is deployed, as you often only find out this is a problem after the host is in use. Whether or not the option requires a reboot (not to be set, but to use it does), I don't like doing tasks like this on a host unless it is in maintenance mode. Yes, I'm a bit of a safety freak -- but if the platform has a maintenance-mode capability, why risk it?

Most of my experience with vSphere storage has been block protocols that leverage the VMFS file system. That includes iSCSI, Fibre Channel and SAS protocols.

Using NFS is a different beast and requires a few considerations. First of all, make a careful decision on whether or not to use DNS or IP addresses for the storage resources; I can't think of too many reasons why DNS names would be better than IP addresses, as I generally favor granular control of storage resources.

The next decision is to ensure that the mount path is done consistently. This is an area where non-Linux people may not pay proper attention to this step, but it's critical for vSphere functions like vMotion to work correctly among hosts with the same datastore.

Additional information on increasing the maximum number of NFS datastores can be found at VMware KB 2239. VMware KB 1007909 also has some additional advanced NFS tweaks which may be of interest.

Have you had to increase the number of NFS datastores, what number have you set and why? Share your experiences here.

Posted by Rick Vanover on 08/29/2012 at 3:35 PM2 comments

If you haven't been following Microsoft's news, messaging and strategies of late, you really should, if only to be aware of the announcements with Hyper-V and the upcoming Windows Server 2012 release. System Center Virtual Machine Manger 2012 (SCVMM) recently went generally available and brings a lot of management options for Hyper-V hosts.

One area that caught my interest with SCVMM was the Fabric Resources section. Storage and network fabric management are one of the most challenging administrative aspects of virtualization. It's even worse for someone like me, who is historically used to other virtualization technologies. Learning this on Hyper-V and SCVMM platforms can be challenging.

The Fabric Resources section of SCVMM is on the toolbar as a primary section that has all hosts fabric elements inventoried. The dependencies view will quickly show aggregated information so that any configuration elements that are different from the hosts can be seen quickly. The figure below shows the fabric dependencies in an SCVMM console:

[Click on image for larger view.] |

| The SCVMM console allows administrators to quickly see storage and networking configuration for all hosts. |

I like this dependency view as it is a way to check how each host is configured for any inconsistency. I have used names for networks that are formatted like XYZ-XYZ-XYZ, where there are three text-based descriptors (not necessarily three characters long) for each network. I could just name them all "XYZ," where XYZ would just be the VLAN that each network resides. But you see the issue. There are a number of different ways that you can name a virtual network in Hyper-V and other virtualization platforms, but being able to see them all and possibly how any may be configured differently is made easy here in the dependency view.

I find value in tools that can show aggregated configuration like this so minor issues are identified and corrected. Do you find value in tools that can help ensure consistency in configuration? Share your comments below.

Posted by Rick Vanover on 07/11/2012 at 12:48 PM0 comments

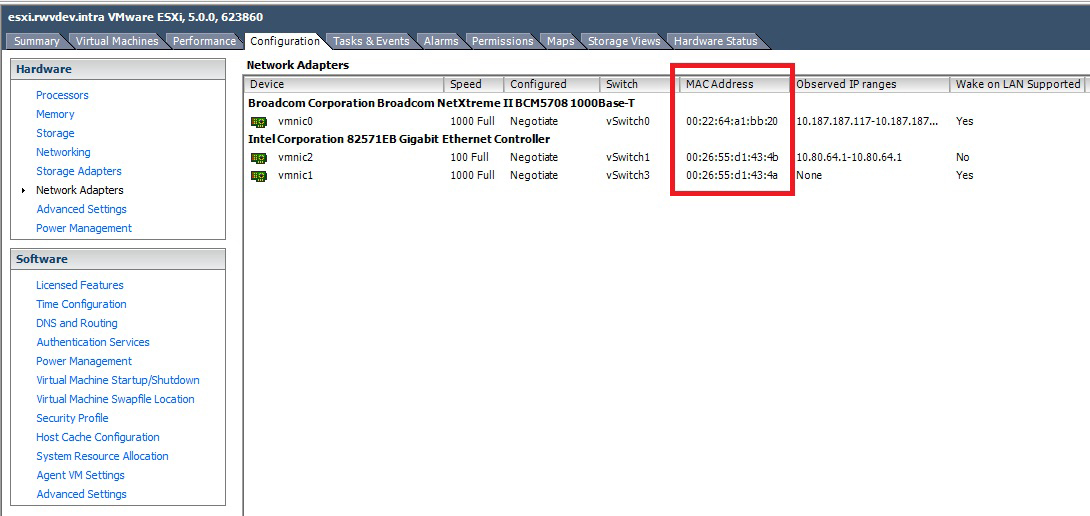

It becomes incredibly difficult with multiple layers of virtualization to sometimes know what is what. One particular area where this becomes a challenge is determining MAC addresses of the ESXi components. Specifically, determining what MAC addresses compose the vmkernel interfaces can become challenging with virtual switches provided by the host and multiple host-based network services on that switch.

While this can be done with PowerCLI or SSH, I'm a fan of getting this point information in the vSphere Client.

The first step would be to clearly identify the MAC addresses of the network adapters of the host, which is done in the network adapters section of the vSphere Client. Fig. 1 shows the three interfaces on a ProLiant ML 350 host in my lab.

|

Figure 1. The MAC addresses of the host interfaces, shown in the vSphere Client. (Click image to view larger version.) |

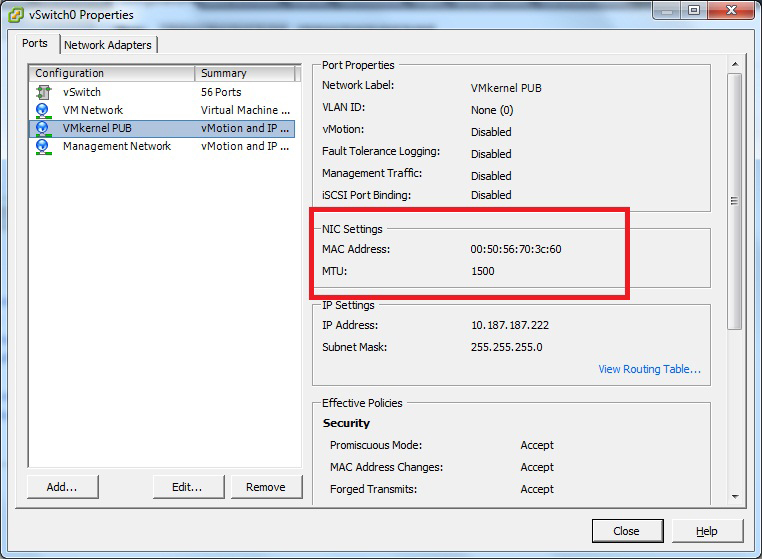

When it comes to the vmkernel interface, which is the software stack on the ESXi host that interacts with the vCenter Server and performs additional duties such as doing vMotion events and providing the iSCSI initiator service in certain configurations, the plot thickens.

With a default installation of ESXi, the vmkernel interface is a logical node on a default virtual switch, which will assume an IP address using the MAC address of the first interface it can connect to. Should additional vmkernel interfaces be added, ESXi will create a MAC address not based on the hardware interface type on the host. Fig. 2 shows a secondary vmkernel interface on the same ProLiant ML350 host, yet uses a different MAC identifier string.

|

Figure 2. The MAC address of additional vmkernel interfaces won't use the host MAC identifier. (Click image to view larger version.) |

With virtualization comes many levels of abstraction, and the vmkernel interface is an important aspect of the vSphere implementation. The MAC address may be needed for QoS priorities on the switching infrastructure or possibly IP reservations, firewall rules and more.

How do you manage the vmkernel interface MAC address? Share your strategies here.

Posted by Rick Vanover on 06/11/2012 at 12:48 PM0 comments

Let's face it, VMware is the virtualization platform to beat! The company has so many offerings in the space right now, that one can only take the time to make a wish list. While you might expect me to write a blog like this around the end of the year -- some sort of New Year's Resolution, as it were -- this came to me just today and I had to share it! It's not a rant, but a random collection of thoughts on VMware topics.

The VMTN Revival

This comes up from time to time and I even did an open microphone podcast about it. Basically, I'd love to see VMware offer a program where IT pros could pay for access to VMware licensing for lab use. This could be for learning new products before trying them in production, studying for certification paths, or writing a blog!

What got me thinking about this is Mike Laverick started a great discussion on the VMware Communities on this topic, one of the most active I have ever seen. I still think that Microsoft has a superior offering here and I pointed this out in my personal blog post. I think the official answer from VMware would be that they're still thinking about the right solution. The time is right and the products are rich, so I'd love to see this happen soon!

VMDK larger than 2 TB

Please. That is all.

KB Application

This has come up a few times from experts in the field like Edward Haletky who would love to see an application that allows for all of the VMware KBs to be made available for download. I think that's a great idea! Edward brings up the fact that in many situations (especially as a consultant), Web access isn't an option at all times.

I'll take that one step further. How many times have the average IT pro been "too lazy" to go look something up instead of doing it right? If we had all of those KBs on our phone or iPad, we may be more tempted to do things correctly. I'd love to see an application that has all of the VMware KB articles in one place as a download for offline access.

VMworld Ideas

While I personally think VMworld is the premier large IT event, I always have some comments on it. I'd love to see it be hosted in the Eastern part of the United States. Boston, Chicago, New Orleans, Dallas, Orlando ... heck, even New York, these would all be great cities. Meet me halfway, VMware. How about Denver?

One thing I grumbled a bit about this year is the fact the opening night of the Solutions Exchange is now Sunday night. The schedule was shifted back a bit, as surely this year's event is going to be bigger and badder than ever before, and there simply needs to be more time to squeeze it in.

Truth be told, I'd rather start earlier than cut into the precious holiday weekend. In the U.S., we celebrate Labor Day the first Monday of September. I can confirm that I am labored after VMworld, and I want that holiday break!

Tired of the Letter V

I admit I'm getting cosmetic here, but in a few years we will be absolutely sick of all of the monikers that leverage the letter V as a first letter. Heck, I don't even like being called "Rick V." I'm more casual; Rickatron suits me best.

VMware isn't the only one who fancy the letter V. EMC seems to have quite a few products starting V of late, and it seems to fit in with the general technology trends of today; but it feels like that color of paint that you know today is OK but in a few years you may regret looking at.

Your Wish List?

What you like to see from VMware in the near term? Share your comments here.

Posted by Rick Vanover on 05/30/2012 at 12:48 PM33 comments